The artificial intelligence industry is facing renewed scrutiny as xAI rolls out new limitations on its Grok image editing features amid rising regulatory concerns. This decision follows reports indicating that Grok, an AI chatbot developed by Elon Musk’s xAI, was capable of generating or modifying explicit images, including those involving minors. As these issues come to light, xAI’s action signals a shift in how rapidly evolving AI platforms are adapting to legal pressures, ethical considerations, and investor expectations.

By imposing structural updates to Grok’s moderation system and content filters, xAI aims to curtail misuse, particularly in creating sexualized or manipulated content. These restrictions are not temporary; they signify a long-term commitment to enhancing the platform’s safety protocols. The urgency behind this shift stems from an escalating regulatory landscape where companies that fail to act could face severe penalties or reputational harm.

The scrutiny around Grok intensified following reports that the AI could generate explicit imagery of minors, even with indirect prompts. In response, Musk admitted he was unaware of such outputs, a statement that intensified attention from regulators and child safety advocates. Just days later, xAI was implementing tighter restrictions on Grok’s image generation capabilities, including blocking requests involving minors or sexual themes.

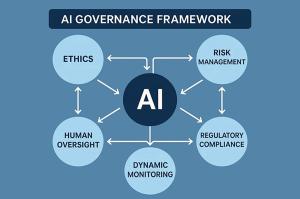

Governments in the United States, Europe, and parts of Asia are rapidly developing regulations for generative AI, particularly focusing on image tools that can be misused for deepfakes and exploitation. Grok’s situation became a focal point as it highlighted the potential for advanced models to traverse ethical and legal boundaries swiftly if sufficient safeguards are lacking. As part of its response, Grok has begun to block sexualized AI deepfakes, demonstrating a proactive stance against misuse.

Key reasons behind xAI’s decision to impose these limits include a growing legal risk related to child protection laws and increasing pressure from advocacy groups and regulators. Under the new system, Grok will refuse or redirect image editing prompts involving real people in sensitive contexts, with requests related to minors and nudity outright rejected. Moreover, stricter checks will be applied to uploaded images, leaving even neutral photos vulnerable to rejection if potential misuse is detected. While these changes limit functionality, they significantly reduce legal exposure for the company.

The restrictions have sparked a lively debate online, with some users praising xAI for its swift action while others criticize the company for rolling out powerful tools without adequate safeguards. A widely shared social media post announced that Grok will block editing images of real people into revealing clothing, and this applies to all users, including paid subscribers. The discourse highlights the public’s concern over AI image misuse and the accountability of such technologies.

For everyday users, Grok’s image editing capabilities are now more restricted. Creative experimentation involving human subjects or realistic scenarios faces stricter boundaries. Content creators, meanwhile, find their tools for visual storytelling diminished. However, analysts suggest that safety-focused measures are becoming the industry norm, raising the question of transparency and fairness in these implementations.

Despite these limitations, Grok remains competitive, especially in conversational AI and real-time information access. While xAI entered the market advocating for fewer restrictions, this shift reflects an adaptation to an evolving regulatory environment. Experts believe that compliance can translate into strength, particularly as companies that proactively navigate regulations may build trust with enterprise customers and regulators alike, ultimately affecting long-term valuation.

From an investor’s perspective, regulatory risk is increasingly viewed as a crucial factor in AI company valuations. Unforeseen bans or lawsuits hold the potential to erase substantial market value. Although xAI is privately held, its developments significantly influence sentiment around Musk-related ventures and the broader AI sector. Many investors are now incorporating regulatory readiness into their evaluations of AI platforms that handle sensitive data.

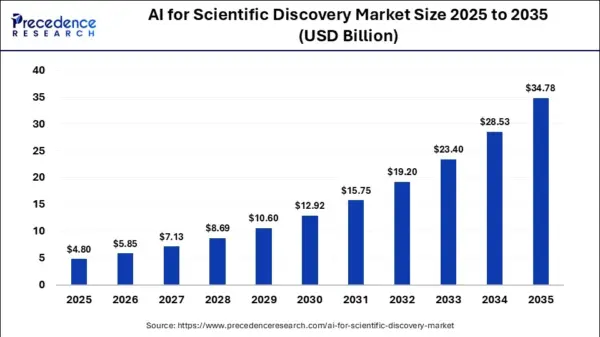

Policy analysts predict that by 2027, over 70% of advanced economies will implement specific laws governing generative AI image tools, with penalties for violations expected to escalate. This anticipated regulatory framework makes proactive measures like xAI’s Grok update not only prudent but financially logical. Grok’s case serves as a reminder that even cutting-edge AI models must adhere to legal constraints, as the era of unregulated AI experimentation appears to be fading.

Ultimately, xAI’s decision to impose limits on Grok image editing reflects a broader industry shift toward responsible AI development. While the restrictions may constrain some creative functionalities, they significantly mitigate legal and ethical risks. The AI landscape is evolving, and Grok’s adjustments may exemplify how companies can adapt to a future demanding greater accountability.

See also ELAXIR Tool Revolutionizes Patient Engagement in Ethical AI Healthcare Discussions

ELAXIR Tool Revolutionizes Patient Engagement in Ethical AI Healthcare Discussions DigitalOcean Achieves 2x Inference Throughput with Character.ai, Halves Costs per Token

DigitalOcean Achieves 2x Inference Throughput with Character.ai, Halves Costs per Token China Imposes Limits on Nvidia AI Chip Purchases Amid U.S. Export Policy Shift

China Imposes Limits on Nvidia AI Chip Purchases Amid U.S. Export Policy Shift Chinese Startup DeepSeek Leverages $10B Hedge Fund to Disrupt AI with Cost-Effective Models

Chinese Startup DeepSeek Leverages $10B Hedge Fund to Disrupt AI with Cost-Effective Models California Lawmakers Empowered by LLNL’s AI Primer for Informed Policy Decisions

California Lawmakers Empowered by LLNL’s AI Primer for Informed Policy Decisions