ELAXIR, an initiative aimed at enhancing understanding of artificial intelligence (AI) in healthcare, has launched a unique educational tool designed for healthcare professionals, patients, and AI developers. The project, spearheaded by Dr. Raquel Iniesta, Reader in Statistical Learning for Precision Medicine at the Institute of Psychiatry, Psychology & Neuroscience at King’s College London, seeks to foster discussions around ethical AI usage in healthcare settings. This initiative comes as the integration of AI into healthcare continues to grow, with applications ranging from analyzing electronic health records to facilitating remote patient monitoring.

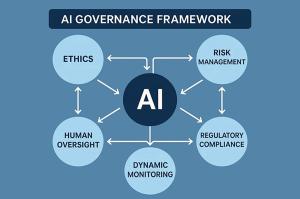

The need for ethical considerations in AI implementation has never been more pressing. Concepts such as patient data privacy, algorithmic bias, and the necessity of human oversight are increasingly recognized as critical to building trust in AI technologies. The ELAXIR project, which received funding from RAI UK, UKRI, and the NIHR Maudsley BRC, aims to empower patients and healthcare stakeholders through a comprehensive understanding of these principles.

Central to the ELAXIR initiative is the “patient-extended” collaborative model, proposed by Iniesta, which emphasizes the importance of patient comprehension regarding AI systems and their rights. This model prioritizes collaboration among developers, clinicians, and patients to ensure that AI technologies are implemented safely and ethically. By that, it promotes a patient-centric approach to AI in healthcare, ensuring patients are equipped to make informed decisions regarding their care.

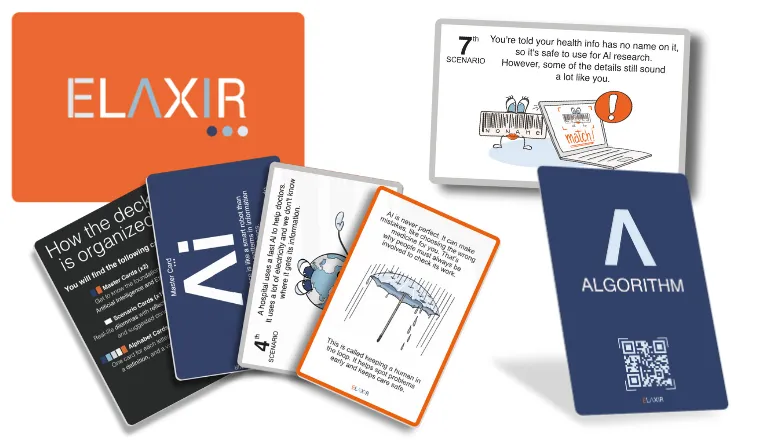

The ELAXIR tool itself consists of a deck of cards divided into three key components. Firstly, the Master cards explain foundational concepts such as “AI” and “Ethics.” Secondly, Scenario Cards present 13 thought-provoking scenarios co-designed by clinicians, developers, and patients. These scenarios are intended to prompt discussion about the implications of AI in healthcare delivery. For example, they pose questions such as whether AI should make treatment decisions without patient understanding, and how to resolve conflicts between AI recommendations and physician advice.

Another component of the deck is the Ethical AI Dictionary, which provides a comprehensive A to Z of key terms related to AI and ethics, linking them directly to the corresponding scenarios. This resource aims to make complex concepts accessible to a diverse audience, including patients, clinicians, and even children as young as ten. Supporting the cards is a dedicated website, elaxircards.com, which offers additional resources, articles, podcasts, and research on ethical AI in healthcare.

Since October 2023, Dr. Iniesta has further developed the “patient-extended” model to promote education among all stakeholders in the healthcare sector. This initiative aims to prevent the dehumanization of patient care in the context of AI integration while fostering empowerment within a patient-centered healthcare system. The model includes a ‘five-facts’ approach, providing a straightforward, evidence-based framework for understanding accountability in ethical AI, aligned with guidance from the World Health Organization and existing policies like the EU AI Act and GDPR.

The ELAXIR initiative marks a significant step toward making ethical AI practices standard in healthcare, while also addressing the complexities surrounding its implementation. As AI technologies continue to evolve and permeate various aspects of patient care, the importance of such educational resources will only increase. The collaborative model and its associated tools may play a critical role in shaping how AI is utilized in healthcare, ensuring that it serves as a valuable asset while upholding the rights and dignity of patients.

See also DigitalOcean Achieves 2x Inference Throughput with Character.ai, Halves Costs per Token

DigitalOcean Achieves 2x Inference Throughput with Character.ai, Halves Costs per Token China Imposes Limits on Nvidia AI Chip Purchases Amid U.S. Export Policy Shift

China Imposes Limits on Nvidia AI Chip Purchases Amid U.S. Export Policy Shift Chinese Startup DeepSeek Leverages $10B Hedge Fund to Disrupt AI with Cost-Effective Models

Chinese Startup DeepSeek Leverages $10B Hedge Fund to Disrupt AI with Cost-Effective Models California Lawmakers Empowered by LLNL’s AI Primer for Informed Policy Decisions

California Lawmakers Empowered by LLNL’s AI Primer for Informed Policy Decisions