Artificial intelligence systems are increasingly becoming more conversational and autonomous, yet a significant hurdle remains: memory. While large language models (LLMs) demonstrate the ability to generate fluent responses and engage in complex reasoning, many still struggle with retaining information across interactions. A recent study titled “The AI Hippocampus: How Far Are We From Human Memory?” published in Transactions on Machine Learning Research, emphasizes the necessity of enhancing memory capabilities in AI to ensure its reliability and long-term usefulness.

The study argues that memory is not merely an adjunct to intelligence but its core foundation. In humans, memory enables learning from experience, long-term planning, contextual comprehension, and personal continuity. In contrast, most AI systems operate as stateless predictors, responding to prompts without retaining a consistent understanding of past interactions or evolving objectives. This limitation may hinder AI applications as they evolve from static question-answering systems to interactive agents, tutors, healthcare assistants, and more.

For tasks that require personalized engagement, such as tutoring or healthcare support, the ability to store, retrieve, and update information over time is crucial. Without a robust memory system, AI risks becoming unreliable or misleading in real-world scenarios. The authors assert that addressing memory issues is essential for enhancing not only performance but also safety, alignment, and user trust.

Drawing from neuroscience, the paper highlights a particular model known as the complementary learning systems theory, which distinguishes between fast, episodic memory and slower, consolidated knowledge in the human brain. This analogy provides the foundation for the study’s main contribution: a unified taxonomy of memory mechanisms used in modern AI systems.

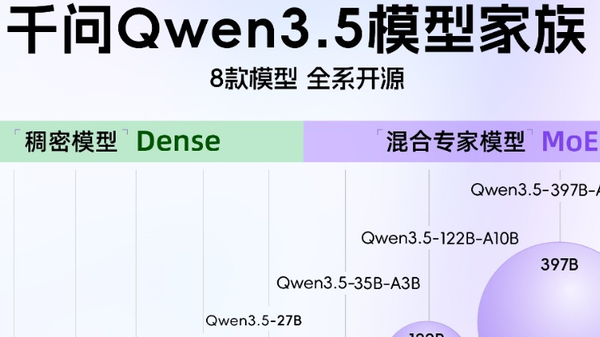

Three Memory Paradigms in AI

The research categorizes existing studies into three major memory paradigms: implicit memory, explicit memory, and agentic memory. Each of these paradigms serves a unique purpose in how AI systems manage and utilize information, and each comes with its own advantages and drawbacks.

Implicit memory involves knowledge encoded within a model’s parameters during training, encompassing factual data, linguistic patterns, commonsense reasoning, and associative relationships. The survey illustrates that transformer models can store a substantial amount of knowledge internally and can retrieve it through attention and feed-forward mechanisms. However, this form of memory is not without its limitations; updating or removing specific knowledge often requires costly retraining or intricate editing techniques. Additionally, implicit memory is subject to interference, where new information can disrupt existing knowledge, and it has limited capacity, making it impractical for continuous learning or real-time adaptation.

Explicit memory, on the other hand, aims to overcome these limitations by externalizing knowledge into retrievable storage systems such as documents, vector databases, or knowledge graphs. The study discusses retrieval-augmented generation frameworks that allow models to query external memory during inference, thus improving accuracy and scalability. While explicit memory offers increased flexibility and interpretability, it also presents new challenges, such as retrieval errors and computational overhead, demanding a careful balance between relevance, efficiency, and robustness.

The final paradigm, agentic memory, represents a shift toward persistent memory within autonomous AI agents. This type of memory enables systems to maintain an internal state across interactions, facilitating long-term planning, goal tracking, and self-consistency. Such capabilities are crucial for applications like personal assistants and robotics. The study likens agentic memory to the executive functions of the human prefrontal cortex, allowing AI agents to coordinate between implicit and explicit memory while adapting their strategies over time.

As AI technology evolves, the survey extends its discussion to multimodal AI models, which integrate various forms of input such as vision, audio, and spatial reasoning. With the rise of robotics and real-world applications, memory must encompass multiple sensory and action modalities. The study illustrates how multimodal memory supports tasks like visual grounding and embodied learning, essential for robots to navigate and interact with their environments effectively.

Memory also plays a pivotal role in multi-agent systems, where shared and individual memory structures facilitate coordination and collaboration. In such frameworks, memory must function as both a cognitive and social capability, underpinning communication and collective intelligence among agents.

Despite the challenges identified, including limited memory capacity and difficulties in ensuring factual consistency, the study presents memory as a promising avenue for advancing AI’s capabilities. The authors argue that improvements in memory design, integration, and governance will be crucial as the field seeks to develop more human-like AI systems. Rather than simply relying on larger models or more data, the future of AI may depend significantly on how well these systems can manage and utilize memory.

See also Interview Kickstart Launches Advanced GenAI Course for Engineers on LLMs and Diffusion Models

Interview Kickstart Launches Advanced GenAI Course for Engineers on LLMs and Diffusion Models LumeFlow AI Launches Web V1.4.0, Introducing Advanced Image Generation and Global Effects

LumeFlow AI Launches Web V1.4.0, Introducing Advanced Image Generation and Global Effects Z-Image vs. GLM-Image: Speed and Precision Redefined in AI Image Generation

Z-Image vs. GLM-Image: Speed and Precision Redefined in AI Image Generation Raspberry Pi Launches AI HAT+ 2 with 8GB RAM for $130, Enhancing Generative AI Capabilities

Raspberry Pi Launches AI HAT+ 2 with 8GB RAM for $130, Enhancing Generative AI Capabilities