As artificial intelligence (AI) systems increasingly assume decision-making roles across sectors such as fraud detection, trading, and cybersecurity, the traditional model of “human-in-the-loop” oversight is rapidly becoming obsolete. Experts argue that the concept, once seen as a prudent safeguard, now fails to keep pace with the speed and scale at which AI operates.

The speed at which AI systems can make decisions has escalated dramatically; millions of transactions can be evaluated per hour by a single fraud model, while recommendation engines influence billions of interactions daily. In today’s environment, relying on humans to oversee every AI decision is a comforting but impractical fiction. Traditional oversight practices, often manual and periodic, are proving inadequate for the continuous and dynamic nature of modern AI systems.

Human oversight is already faltering in real-world applications. Instances such as flash crashes in financial markets or runaway digital ad spending illustrate how quickly failures can cascade. In many cases, human reviewers are too slow or fragmented, leading to consequences that are only explained after the damage is done. This highlights the urgent need for a new approach to governance that can operate at the pace of automated systems.

The issue is compounded by the inherently nondeterministic behavior of AI and its capacity for virtually limitless output. Current research into AI governance frameworks suggests that automated oversight mechanisms must be developed to match the rapid evolution of AI systems. As the complexity of these systems grows, there is a pressing need for AI to assume supervisory roles over other AI, effectively creating an “AI governs AI” framework.

Moving Toward AI Oversight

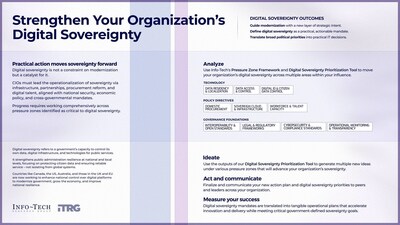

This shift does not imply the removal of human oversight; rather, it reallocates responsibilities to where both humans and AI can add the most value. Effective governance requires a layered model with clear separations of authority. AI systems should not monitor themselves; governance must be independent, with rules and thresholds defined by humans. Actions taken by AI must be logged, inspectable, and reversible, ensuring accountability remains intact.

The NIST AI Risk Management Framework emphasizes the necessity of automated monitoring and policy enforcement throughout the AI lifecycle. This has led to the emergence of AI observability tools that use AI to continuously monitor the performance and behavior of AI systems, alerting humans to potential risks in real time. In this model, AI monitors AI under human-defined constraints, resembling how internal audits and security operations function at scale.

With this architectural shift, human roles transition from direct oversight to strategic design. Leaders are tasked with establishing operating standards, defining constraints, and designing pathways for escalation and accountability in case of failures. This level of abstraction enables humans to govern AI effectively, thereby enhancing decision-making and security outcomes.

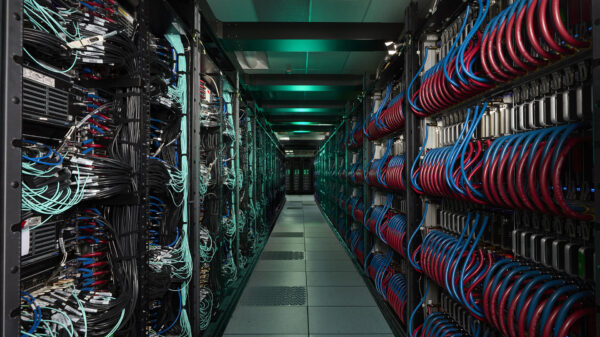

For chief technology leaders, the implementation of an AI-governs-AI framework is becoming a necessity. This involves designing a comprehensive oversight architecture that includes continuous monitoring, risk identification, and anomaly detection across AI systems. Clear autonomy boundaries must be established to delineate when AI can act independently and when human intervention is required. Additionally, ensuring that there is auditable visibility and telemetry is crucial for maintaining oversight of agentic workflows.

Investment in AI-native governance tools is essential, as legacy systems are insufficient for managing the complexities of modern AI. Leaders must also prioritize upskilling their teams to understand the objectives of AI governance, which extend beyond ethics to encompass observability and system-level risks.

The notion that a human supervisor can effectively monitor every AI system is increasingly unrealistic. As AI operates at a scale and speed that exceeds human capability, the path forward involves embracing a governance model where AI assumes oversight, allowing humans to focus on setting standards and owning outcomes. Technology leaders must consider whether they have built an enterprise-wide oversight stack capable of justifying the power and complexity of the AI systems they deploy.

See also California and New York Enact Tough AI Laws Mandating Risk Disclosure and Compliance

California and New York Enact Tough AI Laws Mandating Risk Disclosure and Compliance Calls for Stricter AI Regulations After Gloucester Mayor’s Fake Video Scandal

Calls for Stricter AI Regulations After Gloucester Mayor’s Fake Video Scandal Chhattisgarh’s Vidya Samiksha Kendra Achieves National Recognition for AI-Driven Education Governance

Chhattisgarh’s Vidya Samiksha Kendra Achieves National Recognition for AI-Driven Education Governance China Launches AI Plus Action Plan, Aiming for 90% AI Penetration by 2030

China Launches AI Plus Action Plan, Aiming for 90% AI Penetration by 2030 Asimov’s Three Laws Proposed as Legal Framework to Address AI Liability Gaps

Asimov’s Three Laws Proposed as Legal Framework to Address AI Liability Gaps