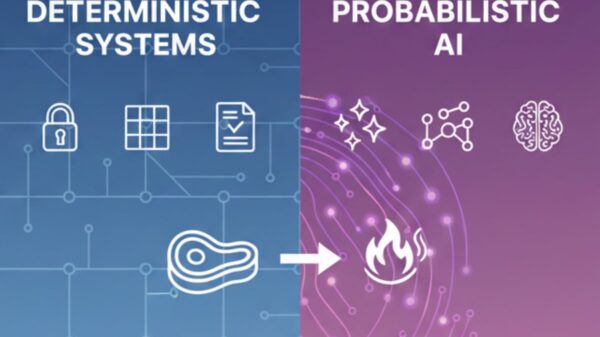

The European Telecommunications Standards Institute (ETSI) has introduced a new standard, ETSI EN 304 223, which aims to establish baseline cybersecurity requirements for artificial intelligence (AI) systems. This framework, approved by national standards bodies, becomes the first globally applicable European Norm specifically focused on securing AI technologies, extending its implications beyond European markets.

The standard addresses unique security risks associated with AI that are not present in traditional software systems. Threats such as data poisoning, indirect prompt injection, and vulnerabilities tied to complex data management necessitate the development of specialized defenses. ETSI EN 304 223 integrates established cybersecurity practices with targeted measures that cater to the distinctive characteristics of AI models and systems.

Adopting a full lifecycle perspective, the ETSI framework delineates thirteen principles spanning secure design, development, deployment, maintenance, and end-of-life considerations. This holistic approach aligns with internationally recognized AI lifecycle models, thereby enhancing interoperability and promoting consistent implementation across existing regulatory and technical ecosystems.

ETSI EN 304 223 is intended for a broad spectrum of organizations within the AI supply chain, including vendors, integrators, and operators. It encompasses systems based on deep neural networks, including generative AI, thereby addressing a wide array of applications and industries. The standard’s introduction is timely, as the proliferation of AI technologies raises significant concerns regarding security and ethical use.

As organizations increasingly rely on AI systems, the need for robust cybersecurity measures becomes paramount. The complexities involved in managing AI systems, especially those that utilize large datasets and complex algorithms, amplify the stakes when it comes to confidentiality and integrity. The new standard underscores the importance of proactive measures in mitigating risks associated with AI.

Further guidance is anticipated through ETSI Technical Report 104 159, which will specifically focus on the risks associated with generative AI, including deepfakes, misinformation, confidentiality concerns, and intellectual property protection. This forthcoming report aims to provide additional resources and clarity for organizations navigating the evolving landscape of AI security.

As the global AI landscape continues to evolve, the introduction of ETSI EN 304 223 represents a significant step forward in addressing cybersecurity challenges in this domain. By setting clear standards and principles, ETSI aims to foster a more secure environment for the deployment of AI technologies, paving the way for broader adoption and trust in innovative solutions.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks