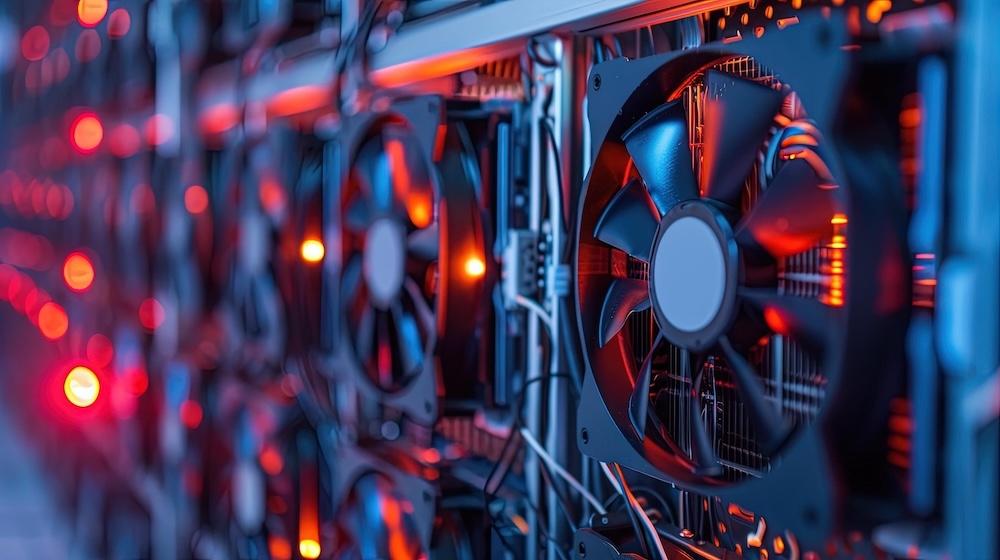

DigitalOcean’s latest deployment of its platform demonstrates a significant shift in how cloud providers are competing in the GPU market. Instead of focusing solely on hardware specifications, cloud providers are increasingly emphasizing performance metrics such as throughput, latency, and cost. In this context, DigitalOcean recently showcased its optimization efforts through a deployment with Character.ai, achieving double the throughput while halving token costs compared to standard GPUs, thanks to enhancements made on **AMD Instinct** GPUs.

The deployment was tailored to manage over a billion daily queries for Character.ai, targeting latency-sensitive conversational workloads that demand consistent response times even under extreme concurrency. Traditional cloud models typically provision GPU capacity while leaving the optimization process to customers. However, DigitalOcean has taken a proactive approach by integrating hardware-aware scheduling into its inference runtime tuning, thereby achieving performance enhancements that generic infrastructure configurations often overlook.

Technical Architecture Drives Economic Impact

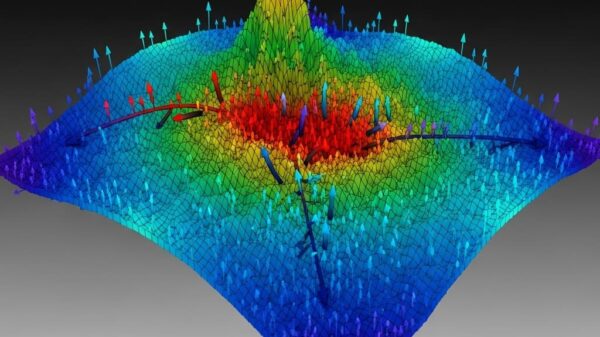

A technical deep dive published by DigitalOcean reveals that these performance gains stemmed from coordinated optimizations across multiple layers of the technology stack. Engineers from DigitalOcean collaborated with teams from Character.ai and **AMD** to configure the **AMD Instinct MI300X** and **MI325X** GPUs for the **Qwen 235-billion-parameter** mixture-of-experts model. This model strategically activates only 22 billion parameters for each inference request, distributing computation across eight selected experts from a pool of 128.

This mixture-of-experts model architecture introduces unique challenges, particularly in achieving computational efficiency while managing dynamic routing. Such routing can lead to load imbalances and communication overhead that generic GPU deployments struggle to handle. To address these issues, the optimization team adjusted the parallelization strategy to balance data and tensor parallelism, ultimately configuring each eight-GPU server into two data-parallel replicas. This setup utilized four-way tensor and expert parallelism, which directly influenced economic outcomes.

By reducing tensor parallelism from eight-way to four-way, each GPU was able to perform more local computation, thereby decreasing communication overhead while meeting the latency requirements for initial token generation and sustained output. Additionally, the team applied **FP8 quantization**, which reduced the memory footprint and bandwidth needs without sacrificing accuracy.

Character.ai’s deployment managed to uphold strict latency targets, with p90 time-to-first-token and time-per-output-token remaining within defined thresholds, even as request throughput doubled. This equilibrium between latency and throughput underscores the central challenge in production inference, which requires systems to accommodate many concurrent users without compromising individual response times.

DigitalOcean credits its success largely to co-optimizations across the stack involving Character.ai and AMD, including enhancements to **ROCm**, **vLLM**, and AMD’s **AITER**, a library designed for high-performance AI operators and kernels specifically for AMD Instinct GPUs. AMD has focused on overcoming software stack limitations that have historically hindered enterprise adoption by investing in ROCm and optimizing vLLM with AITER. These efforts have led to kernel improvements, efficient FP8 execution paths, and topology-aware GPU allocation that aligns workload requirements with hardware capabilities.

The **MI300X** and **MI325X** accelerators offer technical advantages beyond mere pricing; the MI325X provides 256 gigabytes of high-bandwidth memory, significantly outpacing the 141 gigabytes found in competing platforms, and boasts 1.3 times higher memory bandwidth. This capacity is particularly beneficial for inference workloads that require processing large context windows or running memory-intensive mixture-of-experts models, reducing the necessity for model sharding across multiple accelerators.

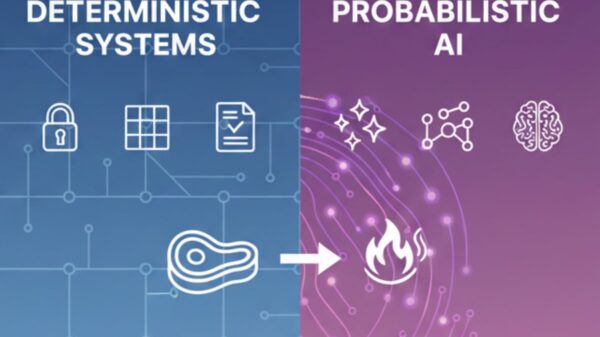

As cloud providers face economic pressures to diversify beyond single-vendor GPU strategies, DigitalOcean’s deployment illustrates that alternative accelerators can deliver performance on par with established solutions when paired with platform-level optimization. This potential shift may influence procurement decisions as enterprises seek cost-effective solutions for inference infrastructure.

Ultimately, the successful implementation of these technologies reveals that GPU selection alone does not dictate inference performance. DigitalOcean employed a comprehensive strategy involving optimizations across multiple system layers. The platform utilized **DigitalOcean Kubernetes** for orchestration, employing topology-aware scheduling to position GPU workloads to minimize communication latency. The team also cached model weights on network file storage, which reduced model loading time by 10-15%.

These strategic infrastructure choices not only compound but enhance scalability during traffic spikes. Topology-aware placement minimizes inter-GPU communication overhead during distributed inference, while hardware-aware scheduling ensures optimal workload assignments to the appropriate accelerators. Collectively, these optimizations resulted in a twofold increase in throughput and a 91 percent improvement compared to unoptimized configurations.

This approach stands in stark contrast to cloud platforms that offer GPU availability without integrated optimization. While hyperscale providers maintain vast compute catalogs, they typically leave performance tuning to customers. DigitalOcean’s strategy is aimed at digital-native enterprises—over 640,000 customers—who value operational simplicity over configuration flexibility, positioning inference optimization as a managed service rather than a do-it-yourself endeavor.

The implications of this deployment extend beyond the immediate results. As organizations evaluate inference platforms, they must consider not only GPU specifications but also the integrated optimization capabilities that ultimately dictate production performance. This AMD accelerator validation—showing production-grade performance across diverse hardware—serves to mitigate procurement risks as enterprises seek alternatives to concentrated GPU markets. In this evolving landscape, platform providers that prioritize optimization tools and customer-specific tuning are likely to differentiate themselves by focusing on outcomes rather than purely on infrastructure specs or pricing.

See also Kenya’s Philip Thigo Named Among Top 100 Global AI Leaders, Shaping Governance and Policy

Kenya’s Philip Thigo Named Among Top 100 Global AI Leaders, Shaping Governance and Policy CES 2026: Industry Leaders Shift Focus to Global Deployment of AI Products, Not Hardware

CES 2026: Industry Leaders Shift Focus to Global Deployment of AI Products, Not Hardware Amazon Pursues $10B Investment in OpenAI to Enhance AI-Driven Retail Experience

Amazon Pursues $10B Investment in OpenAI to Enhance AI-Driven Retail Experience EU’s New Law Could Revolutionize AI Development Amid Rising Geopolitical Tensions

EU’s New Law Could Revolutionize AI Development Amid Rising Geopolitical Tensions Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere