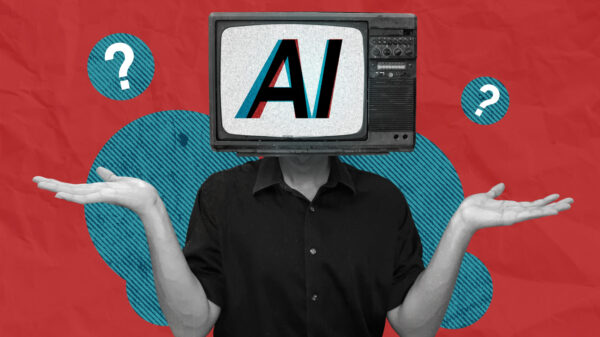

Artificial intelligence (AI) is swiftly becoming an integral component of higher education, with students increasingly employing AI tools for a range of purposes, from productive applications to potentially problematic uses, often without clear faculty guidance. This shift raises a pivotal question: rather than whether AI should be incorporated into academic courses, the focus is now on how it can be effectively utilized to enhance learning outcomes.

One educator recently designed an introductory marketing project that employed AI as a collaborative thinking partner, aiming to scaffold creativity, strategic reasoning, and sustainability-focused decision-making. The project was not intended to replace student effort but to amplify their cognitive abilities, allowing them to engage in higher-level thinking.

Before integrating AI into assignments, faculty members are encouraged to ask: “What do I want students to learn?” This foundational question aligns with frameworks like TPACK (Technological, Pedagogical and Content Knowledge), emphasizing that technology should serve educational objectives. The inquiry should not be about “How can I use AI?” but rather “Where can AI genuinely enhance student engagement with content and skills?” In the context of the marketing project, the focus was on fostering creativity and strategic thinking through AI collaboration.

In framing AI as a partner rather than an answer engine, the instructor mandated that students interact with AI iteratively, refining their ideas based on their own judgment. This approach encouraged students to engage in a dialogue with the AI, emulating collaborative dynamics they would encounter in professional settings. To aid this process, a short instructional video was provided, outlining effective prompt engineering strategies. Students learned to define AI’s role, provide context, request specific outputs, and iteratively refine prompts based on the AI’s responses. This skill set mirrors essential competencies in strategic communication and problem-solving.

Another critical aspect of the project was the use of a rich, purposeful scenario that detailed a fictional company’s mission, values, target market, and sustainability goals. This scenario not only anchored the AI’s responses but also ensured that the student outputs were relevant and tailored to specific business needs. It obliged students to make strategic decisions within constraints, a key competency in marketing practice. Using the scenario and their AI interactions, students produced several deliverables, including an executive summary, target audience analysis, marketing objectives, strategies and channels, key messaging, and reflections on their processes.

The reflection component proved particularly enlightening, allowing students to articulate how AI influenced their thought processes, where it enhanced their work, and where human judgment remained essential. These reflections illuminated the distinction between genuine learning and superficial technology use, reinforcing the notion that AI serves to support, not supplant, human decision-making.

As AI becomes more prevalent, traditional assessment methods face scrutiny, especially when students can generate adequate essays with ease. This reality compels faculty to rethink how students demonstrate learning. Projects, reflections, and iterative tasks can assess skills that are less likely to be outsourced entirely to AI, such as creativity and strategic thinking. Updating assessment rubrics to explicitly detail desirable skills is essential, especially as AI participation becomes integral to the learning process. In this project, the instructor discovered a misalignment between pedagogical intentions and assessment design when only one student followed the structured process for prompt creation. This feedback will inform iterative improvements for future classes.

Ultimately, while final deliverables showcased students’ creative and strategic thinking, the reflection process provided deeper insights into their learning journeys. By analyzing their interactions with AI—what they asked, how they refined prompts, and which suggestions they accepted—they were able to slow down and make their reasoning visible. This reflective practice not only distinguished authentic learning from superficial engagement but also emphasized the importance of human judgment in an AI-driven landscape.

The emergence of AI in education presents both challenges and opportunities. When used uncritically, AI can undermine learning; however, when employed thoughtfully, it can enhance engagement in complex and strategic tasks. Moving forward, the focus for educators should be on the intentional alignment of AI applications with learning objectives, authentic skills, and meaningful assessments. When these elements converge, AI can transform from a potential threat to academic integrity into a powerful catalyst for deeper learning.

See also Andrew Ng Advocates for Coding Skills Amid AI Evolution in Tech

Andrew Ng Advocates for Coding Skills Amid AI Evolution in Tech AI’s Growing Influence in Higher Education: Balancing Innovation and Critical Thinking

AI’s Growing Influence in Higher Education: Balancing Innovation and Critical Thinking AI in English Language Education: 6 Principles for Ethical Use and Human-Centered Solutions

AI in English Language Education: 6 Principles for Ethical Use and Human-Centered Solutions Ghana’s Ministry of Education Launches AI Curriculum, Training 68,000 Teachers by 2025

Ghana’s Ministry of Education Launches AI Curriculum, Training 68,000 Teachers by 2025 57% of Special Educators Use AI for IEPs, Raising Legal and Ethical Concerns

57% of Special Educators Use AI for IEPs, Raising Legal and Ethical Concerns