Mumbai: India’s push for digital public infrastructure is generating significant attention as the country contemplates incorporating artificial intelligence (AI) into governance. This evolution necessitates a robust public foundation designed with inclusivity in mind, particularly for citizens who do not fit the “ideal user” mold. Advocates are calling for an “Inclusivity Stack,” a framework comprising common standards, foundational components, datasets, audit methodologies, and procurement frameworks aimed at ensuring that digital services are inclusive and assistive-first.

This proposed framework is not merely a matter of charity or specialized requests; it represents a fundamental aspect of digital dignity. The right to access public technology for individuals with disabilities is crucial in affirming their citizenship within a digital state. India’s legal frameworks, including the Rights of Persons with Disabilities (RPwD) Act, 2016, emphasize principles of dignity, non-discrimination, and accessibility. This legislation establishes accessibility as a foundational component of governance, rather than a mere optional addition.

Despite these legal foundations, the design of daily digital services often assumes a “normal” citizen with stable connectivity, perfect vision, and high literacy levels. Such assumptions can lead to significant exclusion, manifesting in abandoned applications, repeated office visits, and increased dependence on intermediaries. As AI systems evolve, there is a risk they may exacerbate existing disparities by penalizing edge cases, unless inclusive governance practices are adopted that prioritize equitable access.

The costs of neglecting inclusivity in digital design can be profound. Many systems are engineered with basic defaults that ignore the needs of assistive technology users. For example, rate limits and timeouts might assume users can maintain continuous attention, while authentication methods such as captcha can be inaccessible to those with visual impairments. As a result, systems optimized for efficiency may inadvertently exclude many individuals from accessing essential services.

Inclusive AI governance, therefore, cannot be reduced to merely making interfaces accessible. While accessibility is necessary, it is not sufficient. The real challenge lies in ensuring that workflows accommodate diverse user experiences. Public services should reflect the complexity of human interaction, acknowledging that deviations from “normal” usage are legitimate expressions of diversity rather than user errors.

India already maintains guidelines for government websites that align with global accessibility standards, including WCAG 2.1 Level AA. These guidelines should serve as the baseline for service design in the AI era, rather than the final destination. The proposed Inclusivity Stack bears resemblance to India’s earlier digital initiatives, providing a public-interest framework that not only facilitates desired behaviors but also minimizes adverse outcomes.

The stack should consist of three key layers. The first is an experience layer featuring certified, reusable user interface components that work seamlessly with assistive technologies. The second is a governance layer incorporating inclusion audits into AI impact assessments, while the third layer focuses on shared datasets and public models designed from the perspective of disabled and neurodivergent users.

To achieve these goals, policymakers must reimagine procurement processes as a powerful tool for promoting inclusion. As one of the largest purchasers of digital systems, government procurement norms can shape industry standards. By mandating compliance with existing accessibility standards, the government can encourage vendors to prioritize inclusion from the outset, thus avoiding retrofitting under pressure.

Moreover, procurement checklists should explicitly address the diverse needs of assistive technology users. Users may rely on patterns or workflows that appear anomalous to standard risk assessment engines. Recognizing and protecting these patterns legally will be essential in fostering an environment where inclusion is a state objective.

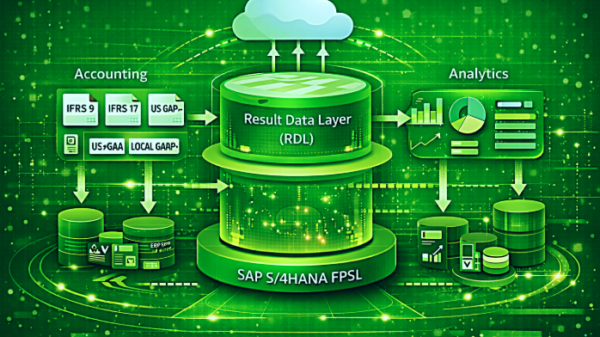

Inadequate representation of disabled users in AI training data can lead to systemic exclusion, transforming vital access points into failures of governance. The Inclusivity Stack must leverage government-backed datasets focused on disability and intersectional exclusion, gathered responsibly with privacy-preserving methods. This data can underpin transformative public models that serve as public goods, ensuring continuous improvement and evaluation to meet diverse user needs.

India positions itself as a leader in the global discourse surrounding “AI for good,” with the upcoming India AI Impact Summit 2026 centering on dignity and inclusivity. As policymakers move beyond rhetoric, the establishment of an Inclusivity Stack could play a pivotal role in embedding these principles into the governance framework. Doing so would ensure that AI’s integration into public services leads to a more inclusive society, transforming the notion of rights into a lived reality for all citizens.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health