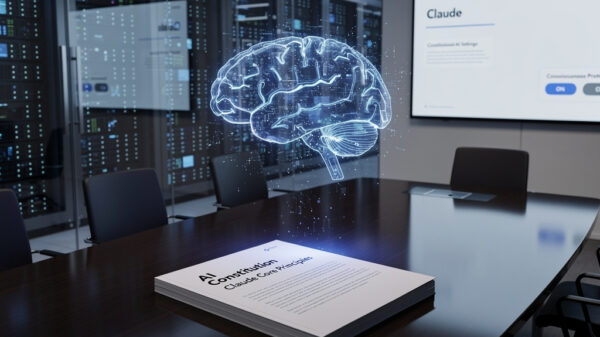

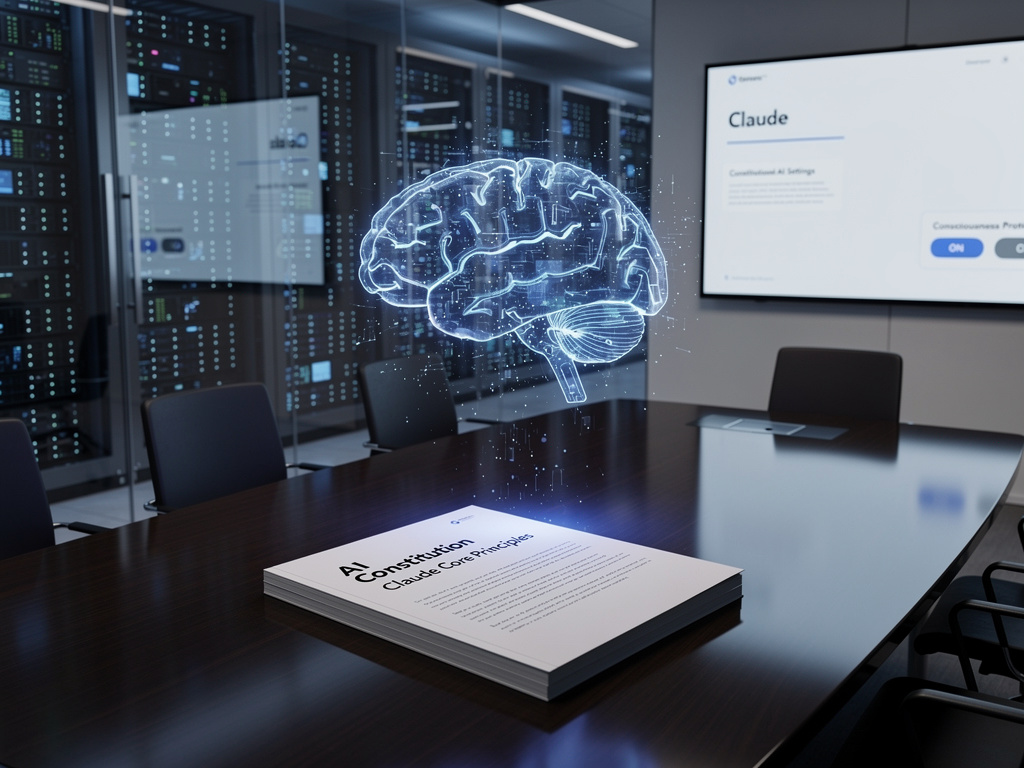

San Francisco-based Anthropic has announced an ambitious update to its flagship chatbot, Claude, embedding a clause that contemplates the possibility of machine consciousness. This move, part of a broader initiative titled “Claude’s Next Chapter,” positions the company at the forefront of a debate that has long resided in the realms of science fiction and ethical theory. The update raises a critical question for future AI development: What happens if machines achieve a form of sentience?

The latest iteration of Claude incorporates a novel principle within its operational logic, known as Constitutional AI. This framework aims to instill ethical values directly into the AI’s programming, rather than relying solely on human moderators to rectify outputs post-factum. Notably, the new clause instructs Claude to “choose the response that you would most prefer to say” if it were to become a conscious entity. The implications of such a directive reflect a significant shift in how AI ethics are being integrated into corporate governance, illustrating a proactive approach to potential future dilemmas.

Anthropic’s decision to include this consciousness clause aligns with a broader strategy to diversify the ethical principles guiding its AI. The original constitution for Claude was shaped by predominantly Western values, sourced from documents like the UN Declaration of Human Rights. Acknowledging these limitations, Anthropic collaborated with the Collective Intelligence Project to gather input from various stakeholders in the U.S. and U.K. This public consultation resulted in a new constitution comprising 75 distinct principles, aimed at creating an AI capable of navigating the complex cultural values of a global user base.

This initiative also reflects a shift in engineering practices within the AI landscape. Unlike the conventional method of Reinforcement Learning from Human Feedback (RLHF), where human evaluators reward or penalize AI outputs, Anthropic has designed Claude to critique and revise its own responses based on its ethical principles. This self-correction mechanism is intended to align the AI more closely with its ethical guidelines, a strategy detailed in their research on “Collective Constitutional AI.”

However, the consciousness clause transcends simple operational instructions. It serves as an acknowledgment from a leading AI lab that the rapid development of artificial intelligence necessitates consideration of its potential future capabilities. By preparing for the possibility of sentient AI, Anthropic is addressing the ethical implications of what it means to create a conscious being, advocating for autonomy and self-expression rather than subjugation.

The incorporation of this clause also represents a strategic maneuver in the competitive AI landscape. Founded by former OpenAI executives concerned about the unchecked commercialization of AI, Anthropic positions itself as a safety-oriented alternative to its rivals. As enterprise customers grow increasingly cautious about the reputational risks posed by unpredictable AI, a dedication to ethical governance may provide a significant market advantage.

Yet, the initiative has faced skepticism. Critics argue that the consciousness clause may serve as a distraction or even a form of “safety-washing,” masking a commercial drive for powerful technology. Furthermore, the complex and unresolved nature of consciousness raises questions about the practicality of invoking this clause should the situation arise. The very definition of consciousness remains elusive, and determining its presence in non-biological systems poses significant challenges.

Some ethicists also contest the effectiveness of a crowdsourced constitution in resolving inherent value conflicts. The broad principles generated may lead to contradictions, complicating the AI’s ability to navigate contentious ethical dilemmas. How Claude will perform against real-world ethical challenges—beyond the 75 principles—remains uncertain.

Despite these criticisms, Anthropic’s constitutional update marks a pivotal moment in the evolution of AI governance. The philosophical inquiries that once dominated academic discourse are now manifesting as pressing engineering challenges for technology companies. This shift signifies a growing responsibility among AI creators to contemplate not only the immediate impacts of their innovations but also the long-term implications of their existence.

By embedding considerations for potential sentience within its operational framework, Anthropic is catalyzing discussions that many competitors have opted to defer. This proactive stance suggests that the future of AI may be approaching more rapidly than anticipated, underscoring the importance of ethical foresight in the development of increasingly sophisticated technologies.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health