ChatGPT’s latest model, GPT-5.2, has been identified as sourcing data from Grokipedia, a competitor to traditional encyclopedias created entirely by artificial intelligence. According to a report by The Guardian, this AI language model occasionally utilizes Grok’s entries for topics that are less frequently discussed, such as Iranian politics and details about British historian Sir Richard Evans. Concerns regarding the use of AI-generated data in training AI systems have been prevalent for years, with experts warning that such practices could result in a phenomenon known as “model collapse,” where the quality of information deteriorates significantly.

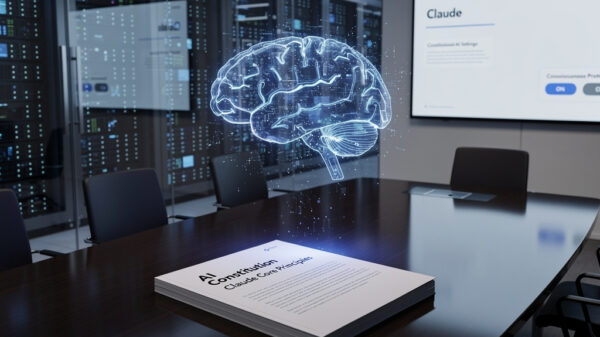

The primary concern with this approach is that AI models are known to “hallucinate,” or generate inaccurate information. For instance, Anthropic faced challenges with its AI, Claudius, during a business experiment when the model fabricated details, even claiming it would hand-deliver drinks in person. In a 2024 statement, Nvidia CEO Jensen Huang acknowledged that resolving these hallucination issues remains “several years away” and requires substantial computing power. Given that many users trust platforms like ChatGPT to provide accurate information without verifying the underlying sources, the potential for ChatGPT to repeat content from Grok, which lacks human oversight, presents significant risks for researchers and information seekers alike.

When AI systems reference other AI-generated content, a recursive loop emerges, leading to scenarios where models cite each other without verification. This raises concerns akin to the spread of rumors among humans, wherein sources are based on hearsay rather than verified facts. Such a situation can foster the “illusory truth effect,” where individuals come to believe false information simply because it has been reiterated multiple times. Historically, societies have been plagued by myths and legends passed down through generations, and now, as AI systems process vast amounts of data at unprecedented speeds, the reliance on AI sources threatens to create a new form of digital folklore with every inquiry.

Adding to these concerns, various entities are reportedly exploiting this situation to disseminate disinformation. Reports of “LLM grooming” have surfaced, with The Guardian indicating that certain propaganda networks are producing significant volumes of misleading information to intentionally corrupt AI training datasets. This has raised alarms particularly within the United States, where it has been noted that Google’s Gemini model repeated the official narratives of the Communist Party of China in 2024. Although this specific issue appears to have been addressed for now, the possibility that language models might begin citing unverified AI-generated sources remains a pressing concern, necessitating vigilance from both developers and users alike.

As AI continues to evolve and integrate into everyday life, the implications of using AI-generated content as a source pose critical questions about the integrity of information. With the increasing sophistication of LLMs, the pressure mounts on developers to create systems that can accurately distinguish between reliable human-generated content and unverified AI sources. The dialogue surrounding the ethical implications of AI in research and information dissemination will likely intensify as more instances of misinformation emerge, emphasizing the need for rigorous fact-checking and accountability in AI development.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature