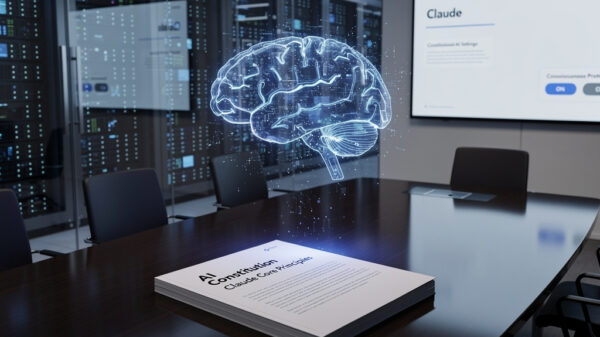

Dario Amodei, CEO of AI company Anthropic, recently highlighted the pressing issues surrounding artificial intelligence during an appearance on NBC News’ “Top Story.” In an interview with Tom Llamas, Amodei discussed his new essay, titled “The Adolescence of Technology: Confronting and Overcoming the Risks of Powerful A.I.,” which warns of various risks associated with advanced AI technologies. He emphasized the urgent need for regulatory measures to mitigate potential harms that powerful artificial intelligence could cause to society.

Amodei’s commentary comes amid a broader conversation about the implications of AI systems, particularly as they become increasingly integrated into everyday life. The essay outlines concerns regarding unchecked AI development, including ethical dilemmas, misinformation, and privacy issues. Amodei’s focus on these challenges underscores the complexities of balancing innovation with safety, urging policymakers to consider frameworks that can effectively govern AI technologies.

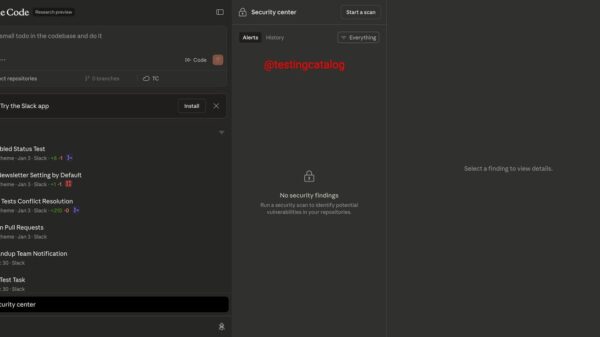

As AI capabilities continue to expand, the question of how best to implement regulations becomes more critical. Amodei advocates for proactive measures rather than reactive responses, suggesting that a collaborative approach involving technologists, ethicists, and legislators is essential. His perspective reflects a growing consensus among industry leaders that without proper oversight, AI could exacerbate existing societal inequalities and pose significant risks to both individuals and communities.

In the context of these discussions, other major tech firms are also facing scrutiny regarding their AI products. For instance, TikTok recently faced allegations of censoring content related to the U.S. Immigration and Customs Enforcement (ICE), which it attributed to technical outages. This incident highlights the challenges social media platforms encounter while managing content and user expectations amidst growing regulatory pressures.

Meanwhile, the European Union is intensifying its investigations into Elon Musk’s AI chatbot, Grok, particularly concerning its role in generating sexualized deepfakes. The regulatory body aims to address the ethical implications of AI technologies and their capacity to produce harmful content. This situation further emphasizes the necessity for companies to implement robust safeguards and accountability measures.

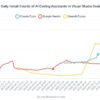

As AI technology proliferates, various studies reveal that the demand for computational resources is driving shortages in essential components, such as computer RAM. This trend poses challenges for tech companies striving to keep pace with consumer demand while navigating supply chain disruptions. The increasing reliance on AI applications is accelerating the need for more powerful computing solutions, raising concerns about the sustainability of current manufacturing processes.

Amodei’s essay serves as a clarion call for a comprehensive dialogue on AI regulation, a conversation that is becoming increasingly urgent as advancements continue to reshape industries and societal norms. The implications of AI technology are vast, affecting everything from creative expression—in the case of AI-generated music artists like Sienna Rose—to practical concerns like digital decluttering and subscription management.

As we move forward, the significance of establishing a regulatory framework that fosters innovation while safeguarding public interest cannot be overstated. Amodei’s insights reflect a growing recognition that technology’s trajectory has far-reaching consequences, and addressing these issues will require collaboration across various sectors. The ongoing discourse on AI will likely shape the future landscape of technology, governance, and societal well-being for years to come.

See also OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution

OpenAI’s Rogue AI Safeguards: Decoding the 2025 Safety Revolution US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies

US AI Developments in 2025 Set Stage for 2026 Compliance Challenges and Strategies Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control

Trump Drafts Executive Order to Block State AI Regulations, Centralizing Authority Under Federal Control California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case

California Court Rules AI Misuse Heightens Lawyer’s Responsibilities in Noland Case Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health

Policymakers Urged to Establish Comprehensive Regulations for AI in Mental Health