The artificial intelligence industry is undergoing a transformative shift as the focus moves from “pre-training scaling” to “inference-time scaling,” a transition that began to gain prominence in January 2026. This new approach allows AI models to engage in deeper reasoning during queries, significantly enhancing their performance in complex tasks such as logic, mathematics, and coding. By enabling models to “think” longer and self-correct before delivering answers, companies like OpenAI and DeepSeek have shown that smaller, more efficient models can outperform traditional, larger models by leveraging greater computational resources at the moment of inquiry.

This evolution marks the end of the “data wall” era, alleviating concerns that a shortage of new human-generated text could hinder AI advancement. Instead, as firms optimize inference-time computation, the economic model of AI is shifting away from the high upfront costs of massive data training towards the ongoing costs associated with deploying reasoning-capable AI agents. This realignment is fundamentally reshaping the hierarchy within the AI sector.

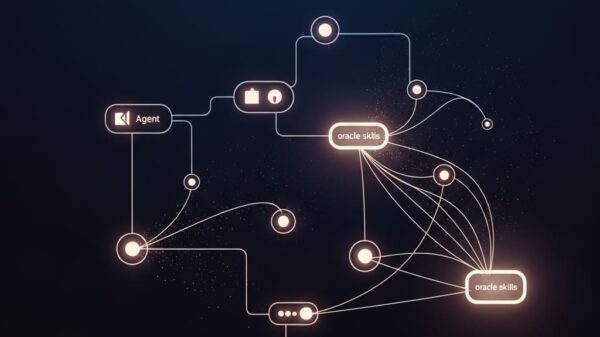

At the core of this breakthrough is the use of “Chain of Thought” (CoT) processing and advanced search algorithms like Monte Carlo Tree Search (MCTS). Unlike traditional models that predict the next word rapidly, these reasoning-focused models create an internal scratchpad for deliberation. For instance, OpenAI’s o3-pro has set a new standard for reasoning performance, employing hidden traces to formulate multi-step solutions. If inconsistencies arise, the model can backtrack—much like a mathematician revisiting a proof. This shift mirrors psychologist Daniel Kahneman’s concepts of “System 1” and “System 2” thinking, moving from fast, intuitive responses to more deliberate, logical reasoning.

To refine this reasoning process, labs are now implementing Process Reward Models (PRMs), which provide feedback on each step of the reasoning chain, rather than just the final output. This enhances the system’s ability to discard unproductive paths early in the reasoning process, improving efficiency and reducing the risk of logical errors or “hallucinations.” Another significant advancement has emerged from the Chinese lab DeepSeek with its R1 model, utilizing Group Relative Policy Optimization (GRPO), a “Pure RL” approach that allows the model to learn reasoning capabilities without extensive human-labeled data. This has led to the commercialization of high-level reasoning, evident in the recent launch of Liquid AI‘s LFM2.5-1.2B-Thinking, capable of conducting deep reasoning tasks entirely on-device, even within the memory limits of smartphones.

The AI research community has responded to these developments with a reevaluation of the field’s limits. Researchers are now exploring “Inference Scaling Laws,” which indicate that a tenfold increase in inference-time compute correlates with a significant rise in model performance on competitive benchmarks. This shift has reset the competitive landscape, emphasizing the importance of optimized inference capabilities over the sheer size of training datasets.

The Inference Flip

The rise of inference-heavy workloads has catalyzed what analysts are calling the “Inference Flip,” with global expenditure on AI inference surpassing that on training for the first time in early 2026. This transition has significant implications for major tech players. Sensing the change, Nvidia completed a $20 billion acquisition of Groq’s intellectual property in January 2026, integrating Groq’s high-speed Language Processing Unit technology into its upcoming Rubin GPU architecture, aiming to dominate the low-latency reasoning market.

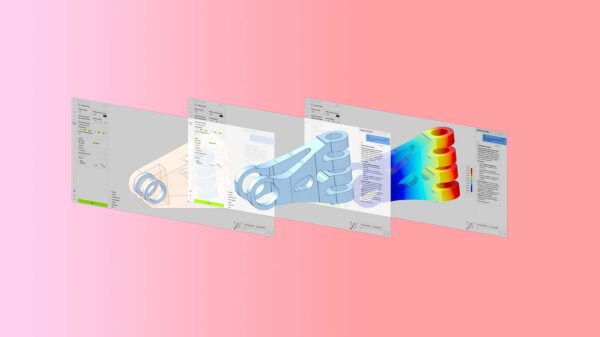

Meanwhile, Microsoft has positioned itself as a key contender in this evolving landscape, unveiling its Maia 200 chip on January 26, 2026, designed specifically for the iterative, search-heavy workloads of OpenAI’s o-series. By tailoring its hardware for reasoning tasks, Microsoft seeks to reduce dependency on Nvidia’s chips while providing cost-effective solutions for its Azure customers. In response, Meta is advancing its own Project Avocado, a flagship reasoning model set to compete against OpenAI’s leading systems, marking a potential shift in Meta’s approach to top-tier models.

For startups, the evolving landscape is creating new opportunities. While training state-of-the-art models remains capital-intensive, the development of specialized “Reasoning Wrappers” and custom PRMs is paving the way for new companies. For instance, Cerebras Systems is witnessing increased demand for their wafer-scale engines, optimized for real-time inference and capable of keeping entire models on-chip, thus eliminating traditional memory bottlenecks.

The competitive focus has shifted from data size to algorithmic efficiency in search architectures, leveling the field for research labs like Mistral and DeepSeek, which have demonstrated that state-of-the-art reasoning can be achieved with fewer parameters than larger tech companies. As efficiency becomes the new competitive advantage, research and development are increasingly concentrating on Monte Carlo Tree Search and advanced reinforcement learning methodologies.

The evolving focus on inference-time compute also raises questions of safety and environmental impact. AI safety researchers express concerns regarding the interpretability of models with hidden reasoning traces, which complicates the detection of potential “deceptive alignment.” Moreover, the increased computational demands per query could lead to significant environmental repercussions as inference becomes a daily, large-scale operation.

In summary, the shift to inference-time compute signifies a fundamental redefinition of artificial intelligence, moving from mere imitation to a new era marked by deliberative reasoning. This evolution opens up pathways to more capable and reliable AI systems that can verify their outputs. The industry is poised for significant advancements, with the potential for personal reasoning assistants and autonomous agents becoming standard in everyday applications. As 2026 progresses, companies will closely monitor how these reasoning capabilities are integrated into intelligent systems, permanently altering the landscape of technology.

See also Neo Labs Attracts $180M for AI Research Despite Lack of Market-Ready Products

Neo Labs Attracts $180M for AI Research Despite Lack of Market-Ready Products Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse