The implementation of the NVIDIA DGX SuperPOD has significantly transformed the AI development lifecycle at MediaTek. This high-performance computing solution is critical for managing extensive and continuous AI workloads, reflecting the growing demands of modern AI applications. “Our AI factory, powered by DGX SuperPOD, processes approximately 60 billion tokens per month for inference and completes thousands of model-training iterations every month,” said David Ku, Co-COO and CFO at MediaTek.

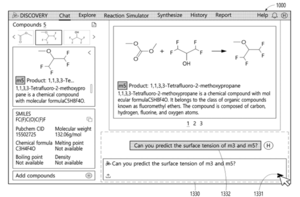

Model inferencing, especially with cutting-edge large language models (LLMs), necessitates loading entire models into GPU memory. Given that models can contain hundreds of billions of parameters, they often exceed the memory capacity of a single GPU server, necessitating their partitioning across multiple GPUs. The DGX SuperPOD, consisting of tightly coupled DGX systems and high-performance NVIDIA networking, is designed to deliver the ultra-fast, coordinated GPU memory and compute power needed for training and inference on the largest AI workloads.

According to Ku, “The DGX SuperPOD is indispensable for our inference workloads. It allows us to deploy and run massive models that wouldn’t fit on a single GPU or even a single server, ensuring we achieve the best performance and accuracy for our most demanding AI applications.” MediaTek leverages these large models not only for core research and development but also for a centralized, high-demand API. The company subsequently distills smaller versions for specific edge or mobile applications, ensuring optimal performance and accuracy across its offerings.

With the DGX platform, MediaTek has streamlined its product development pipeline by integrating AI agents into research and development workflows. One notable application is AI-assisted code completion, which has significantly reduced both programming time and error rates. An AI agent, developed using domain-adapted LLMs, aids engineers in understanding, analyzing, and optimizing designs by extracting information from design flowcharts and state diagrams as part of the chip design process. This advancement allows for the production of technical documentation in days, a marked improvement compared to the weeks it previously required.

In addition, MediaTek utilizes NVIDIA NeMo™, a software suite designed for building, training, and deploying large language models, to fine-tune these models. This ensures both optimal performance and domain-specific accuracy, further enhancing the company’s capabilities in AI development. The shift toward such technologies underscores a broader trend within the tech industry, where companies are increasingly reliant on advanced AI systems to maintain competitiveness and innovate rapidly in their respective fields.

As AI applications continue to expand, the role of powerful computing solutions like the NVIDIA DGX SuperPOD is expected to grow even more critical. MediaTek’s successful integration of these technologies exemplifies how leading tech firms are adapting to the demands of modern AI workloads. The company’s strategy not only emphasizes efficiency but also positions it to capitalize on the evolving landscape of artificial intelligence, ensuring that it remains at the forefront of innovation in this rapidly changing sector.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse