Feb 03, 2026 – The rapid adoption of generative AI technologies is reshaping the operational landscape for businesses, pushing leaders to find ways to enhance productivity and efficiency. Yet, this surge poses a significant challenge for security teams, as much of the AI activity within organizations is occurring without adequate oversight from IT or security departments. As companies integrate AI into their workflows, security teams face the dual challenge of maintaining visibility over AI usage and mitigating associated risks.

AI integration in organizations can be divided into two main categories: securing AI production environments—comprising code, cloud services, applications, and data pipelines—and governing employee use of third-party AI tools such as ChatGPT, Claude, or Midjourney. The former, referred to as the AI “Workload,” is critical for safeguarding applications, APIs, models, and data pipelines developed by the organization. The latter, known as the AI “Workforce,” pertains to the regulation of how employees utilize these AI tools to manage tasks and corporate data.

FireTail, a company specializing in API security, has prioritized the security of AI workloads. Their expertise in API security has enabled them to create a robust suite of capabilities focused on workload AI security. However, they acknowledge that this represents only half of the solution; addressing the burgeoning AI workforce is equally essential.

The emergence of “Shadow AI”—unapproved AI tools utilized by employees—complicates oversight efforts. Employees often bypass formal approval processes to adopt AI tools that simplify their work, leading to the use of applications not vetted by IT. This challenge is exacerbated by the fact that many employees access AI tools directly through web browsers, making it difficult for security measures to track activity comprehensively. While standard security protocols might record user logins, they typically do not monitor subsequent actions, raising the risk of sensitive company information being inadvertently shared or uploaded to external models.

Given this landscape, outright banning AI tools is not a viable solution. Such restrictions can drive employees to use personal devices or unmanaged accounts, ultimately complicating security efforts as activities shift off corporate networks. Instead, companies must adopt a governance approach that allows for responsible AI usage while ensuring data protection. This strategy provides flexibility, enabling different teams to access AI tools in accordance with their unique needs and risks.

Three Pillars of Workforce AI Security

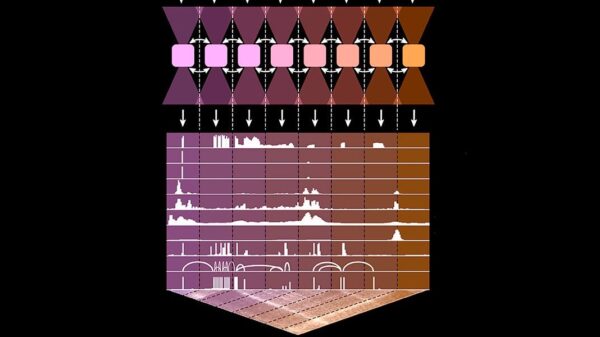

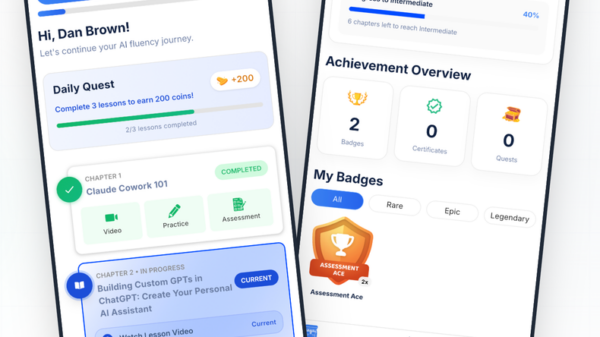

To effectively manage an AI-enabled workforce, organizations must develop three core capabilities: discovery, observability, and governance. Discovery involves identifying all AI services in use within the organization, including which employees are accessing these tools and the frequency of their usage. Observability goes beyond simple detection; it requires understanding the type of data shared with AI models and flagging potential policy violations in real time. Finally, governance entails the ability to enforce rules regarding tool access, allowing for tailored policies based on departmental requirements.

In response to the growing demand for AI governance, FireTail has rolled out significant updates to its platform. These enhancements aim to provide a unified solution addressing both workload and workforce security challenges. Key features include improved visibility through integrations with Google Workspace and browser extensions, enabling comprehensive monitoring and policy enforcement across AI interactions.

FireTail’s new governance features emphasize control rather than restriction, allowing organizations to set nuanced policies. These include the ability to establish rules based on user roles, manage alerts for policy violations at scale, and implement automated guardrails to prevent sensitive information from being shared with unauthorized AI models. Additionally, the newly introduced AI Risk Dashboard centralizes workforce AI risks, allowing organizations to identify hotspots of Shadow AI usage, detect potential data leaks, and make informed decisions regarding which AI tools to permit or block.

By integrating these capabilities, FireTail aims to furnish companies with a comprehensive solution for navigating the complexities of AI adoption. Their full spectrum approach combines both workload security and workforce governance, empowering organizations to embrace AI technologies confidently while safeguarding critical data and assets.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks