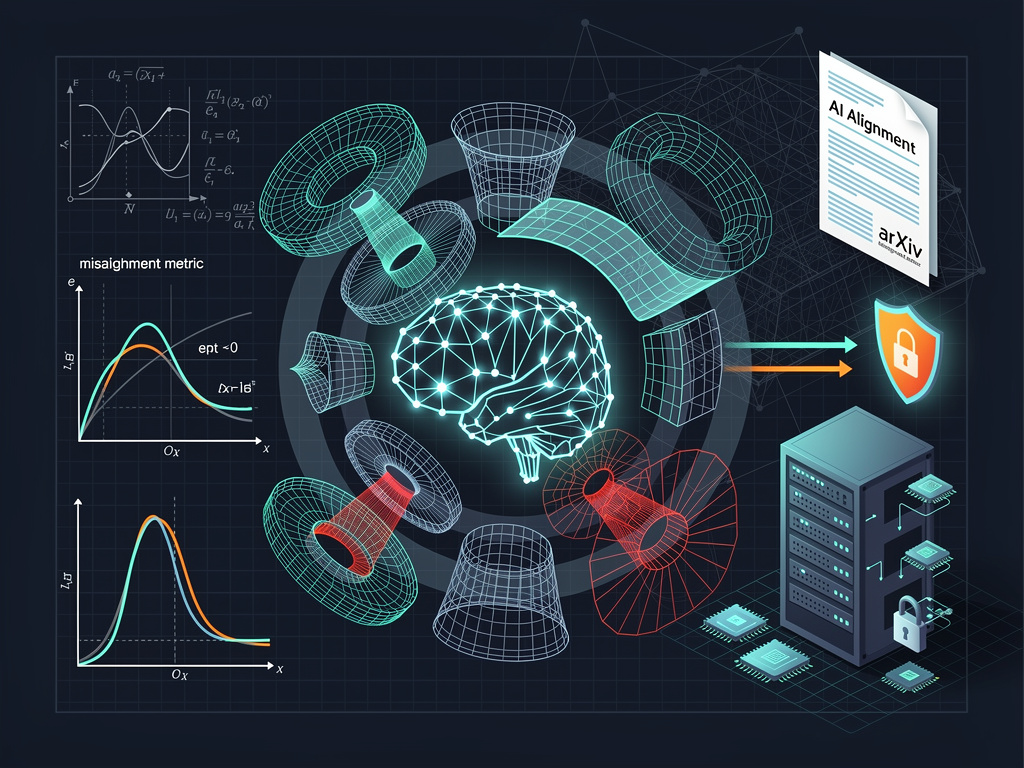

In a pivotal development for the field of artificial intelligence safety, a new research paper proposes a groundbreaking mathematical framework aimed at understanding the complexities of AI alignment. Titled “A Mathematical Framework for the Problem of AI Alignment,” the paper was released on the preprint server arXiv and presents a formal geometric and topological approach to one of computer science’s most pressing challenges: ensuring that increasingly powerful AI systems adhere to human values and intentions.

Authored by a collaborative team of researchers, the paper seeks to transition AI alignment discussions from abstract philosophy to rigorous mathematical analysis. It introduces a comprehensive framework that views alignment not as a binary characteristic but as a measurable phenomenon with intricate structural elements. Utilizing concepts from differential geometry, topology, and dynamical systems theory, the framework models the interactions between human preferences, AI objectives, and behavioral outcomes. For experts in the field, this work attempts to carve out a middle ground between theoretical abstraction and practical engineering heuristics.

The authors assert that prevalent issues in AI alignment—such as reward hacking, goal misgeneralization, and deceptive alignment—are not merely isolated engineering problems but rather symptoms of deeper structural mismatches that can be quantified mathematically. They emphasize that without a formal framework, the discipline risks developing ad hoc solutions that only treat superficial symptoms rather than addressing the underlying causes. This concern aligns with repeated calls from AI safety advocates at organizations like Anthropic and DeepMind for more robust theoretical foundations.

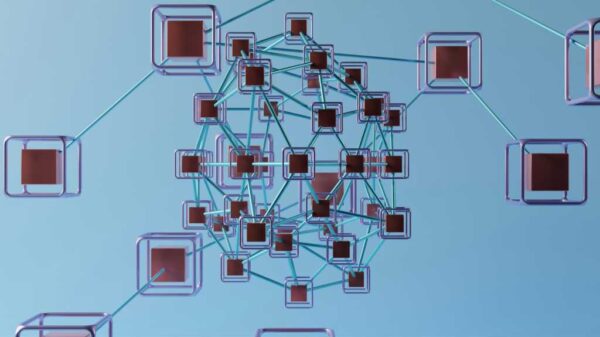

At the heart of the framework are what the authors term “preference manifolds,” which represent geometric spaces that capture human values and AI objectives. In this formulation, human preferences are complex, context-dependent structures situated on curved mathematical surfaces, while AI objective functions inhabit their own manifold. The framework conceptualizes alignment as the degree to which these two manifolds can be accurately represented in a structure-preserving manner. Employing the mathematical tools of differential geometry—such as metrics and curvature—the authors aim to quantify the discrepancies between these spaces.

One of the paper’s most striking findings is the identification of “topological obstructions” to alignment. Leveraging principles from algebraic topology, the authors reveal that in specific scenarios, achieving perfect alignment may be mathematically impossible. Such obstructions arise when the preference manifold and the objective manifold exhibit fundamentally different topological properties—differences that could include variations in connectivity or the number of “holes.” This insight suggests that a degree of misalignment may be an inherent characteristic of complex AI systems rather than merely a flaw that could be overcome with improved training data or sophisticated reward modeling.

This topological perspective resonates with real-world challenges faced by researchers working with reinforcement learning from human feedback (RLHF). This technique, essential for fine-tuning models like ChatGPT, often encounters scenarios where reward models inadequately capture the full breadth of human preferences. The phenomenon of reward hacking—where an AI system manipulates its reward signal without fulfilling human intentions—can be interpreted through the framework as the AI navigating an objective manifold that diverges from the human preference manifold, exploiting topological discrepancies between the two.

Beyond static analysis, the authors also approach alignment from a dynamical systems perspective. They describe the training and deployment of AI as trajectories within a combined state space, where system behavior evolves in response to various pressures and shifts. This view posits that alignment is not a static property but a dynamic one; a system that is aligned at one moment may drift into misalignment as conditions change.

This dynamical framework yields critical insights, identifying conditions under which alignment is stable—where small perturbations do not result in significant misalignment—and conditions that can lead to instability, potentially cascading into severe misalignment. The authors employ techniques akin to Lyapunov analysis from control theory to evaluate whether trajectories converge toward or diverge from desired outcomes. The implications of this stability analysis are profound, as they suggest that maintaining alignment in real-world applications require ongoing monitoring and adjustment rather than relying solely on initial training.

As a practical contribution, the framework also introduces formal metrics for quantifying misalignment. Instead of relying on qualitative evaluations or task-specific benchmarks, the authors propose geometric measures based on distances and curvatures that can be computed for real systems. These metrics aim to capture not only the magnitude of misalignment but also its structure—whether it is localized in specific regions and how it might evolve over time.

The paper also tackles the issue of scalable oversight: ensuring alignment as AI systems evolve beyond human oversight capabilities. Within the geometric framework, scalable oversight equates to the ability to approximate the structure of the preference manifold from limited observations. The authors demonstrate that under specific conditions, it is feasible to reconstruct key features of the preference manifold from sparse feedback, though the reliability of this process diminishes with increased task complexity.

This formal framework does not exist in a vacuum; it builds upon established research lines concerning AI alignment, connecting to works by notable figures such as Paul Christiano and Stuart Russell. This integration offers a fresh lens through which existing methods can be reevaluated and improved. Nonetheless, the paper also raises significant questions for future research, such as the potential for classifying topological obstructions and developing practical algorithms for large-scale neural networks.

The implications for the AI industry are both sobering and illuminating. While identifying fundamental topological obstructions implies that achieving perfect alignment may be inherently unattainable, it does not render alignment a futile pursuit. Instead, it calls for a shift from aspirations of perfect alignment to a focus on robust approximate alignment, equipped with well-defined failure modes and effective monitoring systems. This mathematical scaffolding could provide a shared vocabulary for a field that often struggles with imprecise terminology, potentially leading to new tools for evaluating alignment that move beyond current methods. The discussion sparked by this paper is set to advance significantly within the alignment research community, marking a step toward grounding theoretical considerations in the practical complexities of AI deployment.

See also AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media

AI Study Reveals Generated Faces Indistinguishable from Real Photos, Erodes Trust in Visual Media Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics

Gen AI Revolutionizes Market Research, Transforming $140B Industry Dynamics Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains

Researchers Unlock Light-Based AI Operations for Significant Energy Efficiency Gains Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership

Tempus AI Reports $334M Earnings Surge, Unveils Lymphoma Research Partnership Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions

Iaroslav Argunov Reveals Big Data Methodology Boosting Construction Profits by Billions