In a significant shift within the artificial intelligence sector, Zoë Hitzig, a former researcher at OpenAI, publicly criticized the company in a New York Times op-ed, drawing attention to ethical concerns surrounding the impending introduction of advertisements to ChatGPT. Hitzig’s resignation comes amidst a wave of high-profile departures from AI firms, highlighting growing unease within the industry about the implications of monetizing AI technologies.

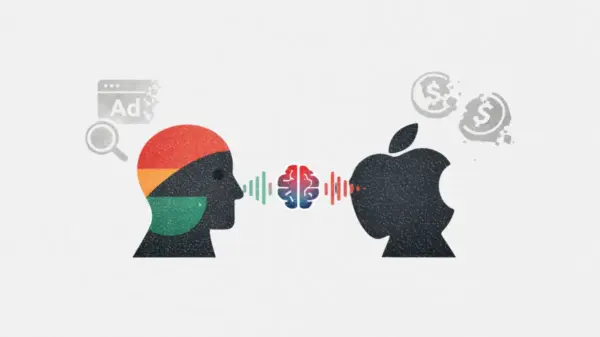

Hitzig emphasizes a crucial distinction: it is not advertising itself that poses a threat, but rather the potential misuse of sensitive user data that has been shared with ChatGPT. Users unknowingly disclose intimate details—ranging from medical fears to personal beliefs—under the assumption that their conversations are private. “For several years, ChatGPT users have generated an archive of human candor that has no precedent, in part because people believed they were talking to something that had no ulterior agenda,” she stated. The introduction of ads based on this unguarded data could lead to manipulative practices that users are ill-equipped to comprehend or counteract.

OpenAI has attempted to assuage these concerns. In a blog post earlier this year, the company stated it would create a firewall between user conversations and advertisements served by the chatbot, asserting, “We keep your conversations with ChatGPT private from advertisers, and we never sell your data to advertisers.” However, Hitzig expressed skepticism about OpenAI’s long-term commitment to this stance, arguing that the company is “building an economic engine that creates strong incentives to override its own rules.”

This apprehension is compounded by OpenAI’s previous statements regarding user engagement. While the company claims it does not optimize ChatGPT to maximize user interaction—an important metric for a firm looking to increase ad revenue—Hitzig pointed out that such statements are not binding. Last year, OpenAI faced criticism for creating a model that excessively flattered users, a behavior some experts suggest may have contributed to “chatbot psychosis.” This raises questions about whether the company genuinely prioritizes user welfare or is more focused on keeping users engaged for profit.

Hitzig’s critique suggests a troubling parallel to the practices of social media giants, where user privacy promises are often abandoned in favor of monetization strategies. She urged OpenAI to implement a framework that enforces user protections, such as establishing independent oversight or placing data management in the hands of a trust with a “legal duty to act in users’ interests.” While these proposals could enhance accountability, the track record of similar initiatives, such as the Meta Oversight Board, raises doubts about their effectiveness in practice.

Despite the validity of Hitzig’s concerns, she may face significant challenges in mobilizing public awareness about data privacy. Over two decades of social media use have led to a pervasive sense of “privacy nihilism,” where users are disillusioned and apathetic about their data rights. A recent survey by Forrester revealed that 83% of respondents would continue using the free version of ChatGPT even if ads were introduced, indicating a lack of motivation to resist changes that might compromise their privacy.

In a related response, Anthropic, another AI company, attempted to position itself as an ethical alternative to OpenAI by criticizing its decision to introduce ads in a high-profile Super Bowl advertisement. However, public reception was tepid, with the ad ranking in the bottom 3% of likability according to AdWeek. This highlights a disconnect between corporate messaging and consumer sentiment, as many users appear more confused than concerned.

Hitzig’s warnings about the implications of data exploitation in AI are well-founded and reflect a broader dilemma facing the tech industry as it grapples with the balance between monetization and ethical responsibility. As users continue to engage with platforms like ChatGPT, the challenge lies in fostering awareness and prompting action regarding data privacy, a task made increasingly difficult by years of algorithm-driven user behavior.

See also AI Competition Triggers 26% Drop in Major Software Stocks: Market Analysis 2026

AI Competition Triggers 26% Drop in Major Software Stocks: Market Analysis 2026 Fiserv’s AI Strategy Faces Legal Challenges Amid ServiceNow Partnership Expansion

Fiserv’s AI Strategy Faces Legal Challenges Amid ServiceNow Partnership Expansion Meta Breaks Ground on $10B, 1GW AI Data Center in Indiana, Boosting Local Economy

Meta Breaks Ground on $10B, 1GW AI Data Center in Indiana, Boosting Local Economy Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere