Google is integrating its AI image generator, internally dubbed Nano Banana, directly into the search functionality of the Google app. Early indications from a recent app build unveil a new “AI Mode” workflow that allows users to generate images seamlessly within the main search interface, similar to tests currently being conducted in Chrome Canary for Android. This seemingly minor user interface adjustment carries significant implications: generating images is poised to become a core function within Google’s most widely used product.

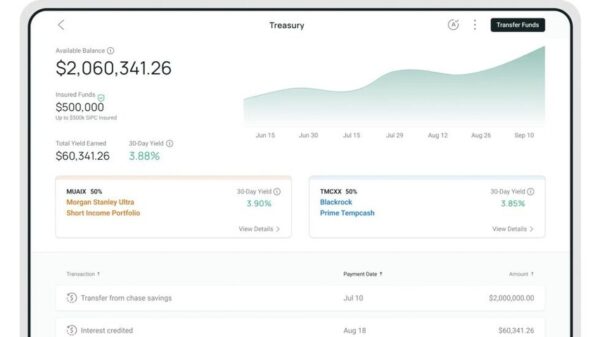

Users can initiate the feature by opening the Google app, tapping the search bar, and selecting a plus icon located on the left. This enables them to choose “Create images,” input a prompt, and view the generated response inline, mirroring the behavior expected from a sophisticated AI assistant. The functionality aligns with what testers are experiencing in Chrome Canary’s address bar, but now appears in the primary app that most Android users rely on for their searches.

Insights into this capability have emerged from app teardowns, including references found in version 16.47.49 of the Google app. Although this feature has not been broadly rolled out yet, it appears to be activated through server-side flags—a method commonly used by Google for testing new functionalities.

Why integrating image generation into search matters

The incorporation of image creation directly into the search bar reduces friction for users. Rather than navigating to a web dashboard or sifting through various Lens menus, users can engage in image generation as part of their search queries. Such user experience improvements tend to enhance usage rates, particularly within an app that is pre-installed on the majority of Android devices, which collectively number over 3 billion worldwide, according to Google’s platform updates.

This move also serves as a competitive response to similar features embedded by Microsoft in its Bing and Edge browsers, as well as Apple’s initiative to include Image Playground in its system applications on macOS. By embedding Nano Banana within the search bar, Google signals its intention to place its AI capabilities at the very moment user intent is expressed: as soon as a search is initiated.

Search traditionally focused on locating existing images; now, it is evolving into a platform for creating them. The presence of the “Create images” option next to the text box cultivates a mindset in users that encourages them to envision search as a generative space. This could lead to more integrated workflows, allowing users to draft concepts, refine them with follow-up prompts, and incorporate real-world references via Lens—all within a single application.

For casual creators, this feature lowers the barrier to experimentation. For marketers, students, and small businesses, it transforms the Google app into a rapid storyboard tool. Even if a small fraction of searches results in images being generated as prompts, the potential impact is substantial given the millions of daily queries processed by Google’s search engine.

Google has emphasized safety around its generative imagery through content filters and automated safety classifiers. Highlighted is SynthID—a watermarking and metadata solution developed by Google DeepMind—designed to label AI-generated images. While implementation specifics may vary by product, outputs from Nano Banana are expected to carry such attributions, enhancing transparency regarding synthetic media. Users can also expect standard disclosure reminders and usage guidelines, as with other AI features, while data from prompts and responses may contribute to service improvements in line with Google’s privacy policies. Restrictions on features may apply based on region, account type, or user age.

The integration is currently in the testing phase through the AI Mode feature, indicating a gradual rollout. Historically, Google has introduced access to new features in stages via server flags and Play Store updates, subsequently expanding availability after validating performance and safety. No official timeline has been established for a broader release, and the features may differ based on device type, language, and account qualifications.

Thus far, a clear trend of convergence is evident: Nano Banana has been spotted in the address bar, replacing Lens entry points, and is now set to populate the Google app’s search bar. This strategy underscores Google’s commitment to making generative image creation a native action at the very beginning of the search process.

Looking ahead, users should remain attentive to upcoming enhancements, such as more sophisticated integrations with Gemini—like prompt histories, multi-round refinements, or direct exports to Google Docs, Slides, or Messages. Google frequently interlinks new capabilities across its platforms once the central experience is established. Should the image creation feature be adopted as a standard option in the search bar, quick-sharing functions, remixing capabilities, and Lens-based reference tools could soon be just a tap away.

In summary, integrating Nano Banana into the search bar signifies more than mere convenience; it reflects Google’s recognition of generative creation as a natural extension of search behavior, setting the groundwork for a more visual, conversational, and immediate form of inquiry—one in which the answers may be entirely original.

See also AI Shopping Surges: 66% of Americans Use ChatGPT for Holiday Purchases in 2025

AI Shopping Surges: 66% of Americans Use ChatGPT for Holiday Purchases in 2025 Google Launches Nano Banana Pro AI Image Tool Amid Concerns Over Realism

Google Launches Nano Banana Pro AI Image Tool Amid Concerns Over Realism Ant Group Launches LingGuang AI Assistant, Surpassing 1M Downloads in Days

Ant Group Launches LingGuang AI Assistant, Surpassing 1M Downloads in Days BeMyEyes Launches Multi-Agent Framework, Surpassing GPT-4o in Multimodal Reasoning Tasks

BeMyEyes Launches Multi-Agent Framework, Surpassing GPT-4o in Multimodal Reasoning Tasks Johns Hopkins Researchers Unveil CWMDT for Superior Counterfactual Video Predictions

Johns Hopkins Researchers Unveil CWMDT for Superior Counterfactual Video Predictions