The recent REF-AI report, co-authored by Jisc and the Centre for Higher Education Transformations (CHET), offers critical insights into how generative AI is shaping research practices within UK universities. Funded by Research England, the report underscores that generative AI is no longer a theoretical concept but a tangible part of daily research activities and assessment processes. This shift is already influencing institutional strategies, with some universities adopting cautious, exploratory approaches while others embrace more innovative methods.

Liam Earney, Managing Director of Higher Education and Research at Jisc, notes that the report highlights a significant gap between emerging practices involving generative AI and existing governance structures. With new technologies often outpacing policy, institutions find themselves interpreting best practices in isolation. Current guidance from UK Research and Innovation (UKRI) emphasizes principles of honesty, transparency, and confidentiality in the context of generative AI, but comparable clarity in the Research Excellence Framework (REF) remains elusive.

The report’s first recommendation stresses the importance of integrity and transparency in the usage of generative AI. It calls for universities to formalize policies on generative AI applications, particularly in relation to REF submissions. Such policies should clearly define acceptable uses and mandate disclosure of AI’s role in the research process. This approach aims to foster trust and establish a fair assessment framework, addressing the current ambiguity surrounding generative AI’s involvement.

Insights from the report indicate a diverse landscape of practices across UK universities. Some institutions employ generative AI to gather evidence of research impact, craft narratives, and even evaluate outputs. Others are trialing customized tools to enhance internal workflows. However, this varied adoption often leads to confusion and a lack of coherence, resulting in a “patchwork” of practices that can obscure the actual contribution of generative AI to research efforts.

Survey data reveals a significant degree of skepticism among academics regarding the application of generative AI within the REF, with some areas showing strong dissent among seventy percent or more of respondents. In contrast, conversations with senior university leaders reflect a growing acknowledgment that generative AI is a permanent fixture that demands careful integration into institutional practices. Pro Vice Chancellors emphasize the sector’s responsibility to navigate this technology effectively.

The report suggests that without coordinated efforts, the existing digital divide among institutions may widen, as only those with adequate resources can harness generative AI’s full potential. The uneven landscape of digital literacy and confidence in generative AI underscores the need for strategic support from organizations like Jisc, which has already established AI literacy programs for teaching staff. This initiative needs to expand to encompass research personnel as well.

Addressing Research Integrity

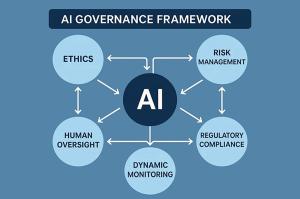

To cultivate a responsible research environment, the report advocates for a foundational focus on research integrity. It proposes that universities define boundaries for generative AI utilization, require disclosure of its involvement in REF-related activities, and integrate these considerations into broader ethical frameworks. Notably, there is a disconnect between current discussions on generative AI and established responsible research assessment initiatives like DORA and CoARA, which must be bridged for effective governance.

The recommendations outlined in the report do not stifle innovation; rather, they pave the way for it to occur responsibly. Institutions are actively seeking guidance on creating secure, trustworthy environments for generative AI, aiming to balance efficiency gains with the necessary academic oversight. There is a collective movement to prevent past mistakes associated with hasty digital adoption that prioritized local solutions over shared standards.

As the next REF approaches amid significant financial pressures and ongoing technological evolution, generative AI presents an opportunity to alleviate workloads and enhance consistency in research assessment. However, this potential can only be realized through transparent practices and a sector-wide commitment to integrity. The time has come for universities to establish clear policies, for assessment panels to receive explicit guidance, and for the sector to develop shared infrastructures that address existing disparities.

See also New Study Reveals Poetic Prompts Bypass AI Safety Systems in 62% of Tests

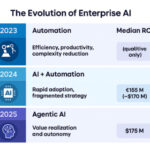

New Study Reveals Poetic Prompts Bypass AI Safety Systems in 62% of Tests Agentic AI Redefines Enterprise IT with 45% of Firms Achieving Autonomy by 2030

Agentic AI Redefines Enterprise IT with 45% of Firms Achieving Autonomy by 2030 Professors in ASEAN Call for AI Guidelines as ChatGPT Use Surges in Higher Education

Professors in ASEAN Call for AI Guidelines as ChatGPT Use Surges in Higher Education AI Agents Use GPT-5 and Claude to Simulate $4.6M in DeFi Exploits, Warns Anthropic Study

AI Agents Use GPT-5 and Claude to Simulate $4.6M in DeFi Exploits, Warns Anthropic Study Nvidia Launches Open-Source AI Models for Speech and Self-Driving Systems at NeurIPS 2025

Nvidia Launches Open-Source AI Models for Speech and Self-Driving Systems at NeurIPS 2025