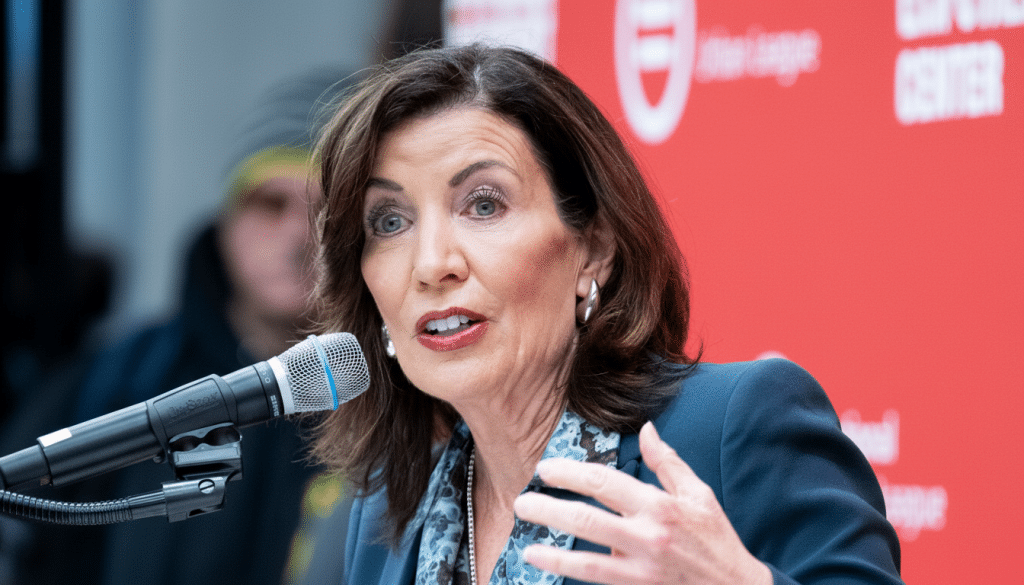

New York has emerged as a frontrunner in the regulation of artificial intelligence, with Governor Kathy Hochul signing the RAISE Act. This makes New York only the second state in the U.S. to implement comprehensive AI safety regulations. The law focuses on enhancing transparency, mandating incident reporting, and establishing independent oversight, all designed to mitigate risks associated with advanced AI models while preserving innovation in critical sectors such as finance, media, and healthcare technology.

The passage of this measure followed intense negotiations and lobbying efforts by major tech firms. While lawmakers were eager to advance the legislation, the administration sought to limit its scope. Ultimately, a consensus was reached that allows for ongoing adjustments in future laws and regulations while keeping the RAISE Act in effect.

Under the new law, large AI developers—those producing or distributing potent general-purpose systems or high-risk applications—are required to submit safety plans and testing methods that demonstrate how they evaluate model behavior. Additionally, any AI safety incidents must be reported to the state within 72 hours, mirroring established timelines in cybersecurity incident response.

To oversee this framework, New York will create a specialized office within the Department of Financial Services (DFS). This office is expected to leverage its experience in regulating digital assets to ensure that AI compliance is robust and actionable. Companies will need to establish repeatable processes, maintain defensible documentation, and demonstrate their ability to identify, assess, and mitigate harms.

The stakes for non-compliance are significant. Companies failing to file the requisite reports or misrepresenting their safety measures could face fines of up to $1 million for a first violation and up to $3 million for subsequent offenses. Such penalties are intended to deter negligence in governance and red-teaming obligations.

This legislative move aligns closely with California’s recently adopted transparency-first policy, creating a growing coastal consensus on foundational AI safety standards. Both states emphasize the importance of disclosure, incident reporting, and accountability in government oversight. While the federal landscape remains devoid of a comprehensive AI law, these state-level initiatives reflect international trends, such as the European Union’s AI Act and the OECD AI Principles, which stress governance practices and performance assessments.

The reciprocal nature of the regulations between New York and California could simplify compliance for developers, reducing the risk of a fragmented regulatory environment. This clarity is crucial for implementing consistent controls ranging from pre-deployment assessments to monitoring for misuse and cascading failures.

Industry reactions have been mixed. While major AI labs like OpenAI and Anthropic have expressed cautious support for New York’s transparency initiatives, they continue to urge Congress to establish federal standards for clarity across jurisdictions. Some policy leaders at Anthropic view state actions as stepping stones toward comprehensive federal regulations desired by many in the industry.

However, opposition exists. Certain political factions backed by influential investors and AI executives oppose a patchwork of state regulations, arguing that they could favor established players and inhibit competition for newcomers. Despite this resistance, the lobbying efforts were successful, signaling a shift toward concrete regulatory frameworks rather than voluntary commitments.

Looking ahead, DFS is tasked with translating the RAISE Act into actionable operational requirements typical of regulated financial and cybersecurity environments. This will involve establishing clear accountability, documented risk assessments, regular adversarial testing, and a taxonomy for incident reporting.

Organizations already aligning their practices with frameworks like the NIST AI Risk Management Framework will find themselves at an advantage. This guidance should extend to address potential model misuse and data provenance concerns, as well as evaluating the safety of emergent behaviors.

For larger companies, the fines associated with non-compliance could be manageable, yet they are substantial enough to motivate mid-sized firms to prioritize governance. Startups will likely feel immediate repercussions only if they meet the criteria for “large developer” status under the new law. Nonetheless, many companies will adopt similar controls to attract enterprise customers increasingly demanding compliance with recognized standards.

The White House’s directive for federal agencies to counter state AI regulations has set the stage for potential legal conflicts, particularly concerning the Commerce Clause and federal supremacy. As New York’s RAISE Act takes effect, the ongoing lobbying efforts and potential lawsuits may involve national trade groups and civil society organizations.

In the coming months, DFS will issue guidance to clarify what constitutes a reportable AI safety incident and will seek feedback from stakeholders, including labs and researchers. While lawmakers have indicated a willingness to consider modest adjustments, the foundational structure of the RAISE Act focusing on transparency and oversight appears secure.

Companies operating in New York or selling into the state should establish AI safety committees, ensure compliance with the law’s reach, develop continuous incident-reporting mechanisms, and align their testing with established public frameworks. Ultimately, the RAISE Act elevates AI safety to a critical issue at the board level, with New York and California setting a precedent for durable, auditable AI governance as federal regulations remain uncertain.

See also AI Regulation Looms as Anthropic Reveals 96% Blackmail Rates Amid Rapid Development

AI Regulation Looms as Anthropic Reveals 96% Blackmail Rates Amid Rapid Development Pegasystems Launches Agentic AI Upgrade, Enhancing Compliance for Financial Institutions

Pegasystems Launches Agentic AI Upgrade, Enhancing Compliance for Financial Institutions South Korea Revives North Korea Policy Division, Adds Assistant Minister for Military AI

South Korea Revives North Korea Policy Division, Adds Assistant Minister for Military AI Colorado Lawmakers Defy Trump’s AI Order, Push for New Regulations to Prevent Discrimination

Colorado Lawmakers Defy Trump’s AI Order, Push for New Regulations to Prevent Discrimination Canada’s AI Policy Shift: Minister Solomon to Introduce New Privacy Bill Amid Global Trends

Canada’s AI Policy Shift: Minister Solomon to Introduce New Privacy Bill Amid Global Trends