A recent cyber intrusion involving a compromised Amazon Web Services (AWS) cloud environment has raised alarms about the escalating use of artificial intelligence (AI) in cyberattacks. The event, noted by the Sysdig Threat Research Team, occurred on November 28, where an attacker leveraged AI to escalate privileges almost instantaneously, achieving administrative access in under ten minutes.

According to Sysdig’s threat research director, Michael Clark, and researcher Alessandro Brucato, the attack was characterized not only by its rapid execution but also by multiple indicators suggesting that large language models (LLMs) were employed to automate various phases of the breach. These phases included reconnaissance, privilege escalation, lateral movement, and even the writing of malicious code, a tactic referred to as LLMjacking, where a compromised cloud account is utilized to access cloud-hosted LLMs.

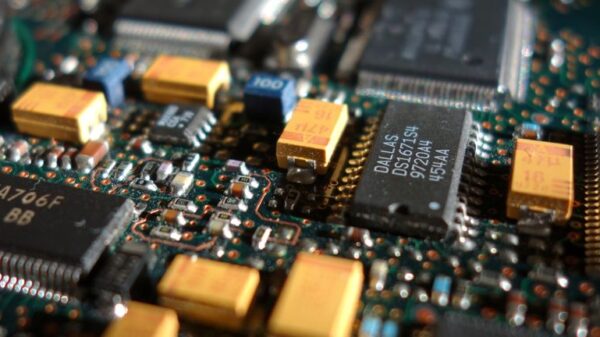

The intruder initially gained entry by stealing valid test credentials from publicly accessible Amazon S3 buckets. These credentials belonged to an identity and access management (IAM) user with extensive permissions on AWS Lambda and restricted permissions on AWS Bedrock. The compromised S3 bucket also contained Retrieval-Augmented Generation (RAG) data for AI models, which proved valuable during the attack.

In their analysis, Sysdig reported that the attacker compromised 19 distinct AWS principals and abused Bedrock models alongside GPU resources. The attacker’s code included Serbian comments, fabricated AWS account IDs, and fictitious GitHub repository references, indicating a sophisticated and AI-assisted approach to offensive operations.

After failing to gain administrative access using common usernames like “sysadmin” and “netadmin,” the attacker escalated privileges through Lambda function code injection. By exploiting the compromised user’s permissions to modify Lambda functions, they created an admin account, enabling them to extract sensitive information including secrets from Secrets Manager, EC2 Systems Manager parameters, and CloudWatch logs.

Technical Insights

As the attacker progressed, they collected account IDs and attempted to assume OrganizationAccountAccessRole across AWS environments. Intriguingly, they included account IDs that did not belong to the victim, a behavior consistent with AI hallucinations, further suggesting LLM-assisted activities. Overall, the attacker accessed multiple IAM roles and users, leading to the extraction of numerous sensitive data points.

Using the compromised access to Amazon Bedrock, the attacker invoked several models including Claude and Llama, raising red flags for unusual usage patterns. Sysdig emphasized that invoking Bedrock models that are not utilized by the account is a notable warning sign. Organizations are encouraged to implement Service Control Policies (SCPs) to restrict model invocation to only essential models.

Following their exploration of Bedrock, the intruder shifted focus to Amazon Elastic Compute Cloud (EC2), querying machine images suited for deep learning applications. They stored a script in the victim’s S3 bucket that appeared designed for machine learning training but referenced a non-existent GitHub repository, suggesting that the code was again generated by an LLM.

While the researchers have yet to ascertain the attacker’s ultimate objective—whether model training or resale of computing access—the script’s design raised concerns about a potential backdoor via a publicly accessible JupyterLab server that did not require AWS credentials. This instance was terminated after five minutes for reasons that remain unclear.

This incident underscores the growing trend of cybercriminals increasingly relying on AI to streamline their operations, raising significant concerns about future automated attacks at scale. To counter such threats, organizations are advised to enhance their identity security and access management protocols. Key recommendations include applying the principle of least privilege to all IAM users and roles, restricting sensitive permissions, and ensuring that S3 buckets containing sensitive data are not publicly accessible.

Moreover, enabling model invocation logging for Amazon Bedrock can help detect unauthorized usage. As cyber threats evolve, continuous vigilance and proactive measures will be crucial in safeguarding digital infrastructures. We reached out to Amazon for comment but received no response by publication time. Updates will be provided as more information becomes available.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks