Most large companies are grappling with a lack of visibility and control over the artificial intelligence systems operating within their networks, according to findings from the 2026 CISO AI Risk Report by Cybersecurity Insiders. The report, which surveyed 235 Chief Information Security Officers (CISOs), Chief Information Officers (CIOs), and senior security leaders across the United States and the United Kingdom, reveals a troubling trend: AI tools are frequently deployed without appropriate approval.

Specifically, the report indicates that 75% of organizations have discovered unapproved “Shadow AI” tools running within their systems, many of which have access to sensitive data. Alarmingly, 71% of CISOs acknowledged that AI systems have access to core business systems, yet only 16% effectively govern that access. This disconnect raises serious concerns about data security within organizations.

The survey highlights a significant visibility gap, with 92% of organizations lacking full oversight of their AI identities. Furthermore, 95% of respondents expressed doubt about their ability to detect malicious activity perpetrated by an AI agent. Only a mere 5% of participants felt confident in their capacity to contain a compromised AI system, pointing to a pervasive sense of vulnerability among security leaders.

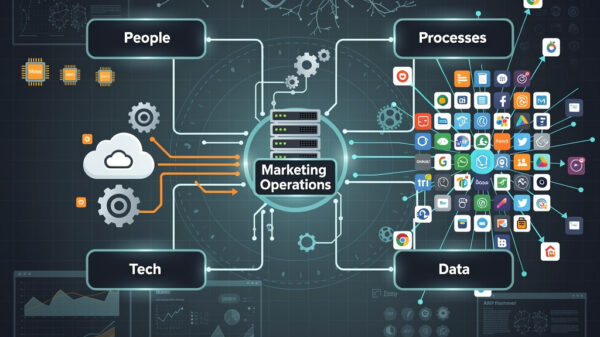

Security leaders identified the rapid and decentralized adoption of AI tools, such as AI-assisted copilots, as a key challenge. These systems often operate autonomously, complicating efforts to track their activities using traditional security measures designed for human users. The report emphasizes that 86% of leaders do not enforce access policies specifically tailored for AI, while just 25% utilize monitoring controls designed to oversee AI systems.

This lack of governance and oversight over AI presents new risks for organizations, particularly as AI technologies become increasingly integrated into business operations. The findings suggest that most companies are ill-prepared to manage the implications of AI deployments, especially when it comes to safeguarding sensitive information from unauthorized access.

Industry experts warn that without robust governance frameworks and monitoring systems in place, organizations may find themselves exposed to significant risks, including data breaches and operational disruptions. As AI systems evolve and become more autonomous, the need for comprehensive security measures becomes even more critical.

The report’s findings underscore the urgent need for organizations to reassess their AI governance strategies. Security leaders are encouraged to implement stricter access controls, develop monitoring capabilities specifically for AI systems, and ensure that all AI tools in use are formally approved. By doing so, organizations can better position themselves to mitigate risks associated with the burgeoning use of AI technologies.

Looking ahead, as organizations continue to navigate the complexities of AI integration, a proactive approach to governance and security will be essential. With the ever-increasing reliance on AI capabilities, addressing these gaps in oversight could not only enhance security but also enable organizations to harness the full potential of AI in a responsible manner.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks