UK Finance has highlighted a significant transformation within the financial services sector, primarily driven by artificial intelligence (AI). The integration of AI technologies is enhancing customer interactions through intelligent chatbots, improving fraud prevention, and refining investment strategies. However, this advancement also introduces a new wave of cybersecurity challenges that diverge from conventional IT threats.

The organization notes that AI systems are inherently dynamic, dependent on vast datasets, and susceptible to unexpected behaviors. These factors open avenues for risks such as model tampering, exposure of sensitive data, biased decision-making, and sophisticated adversarial attacks. These vulnerabilities can affect the entire AI lifecycle, from development to deployment, and evolve rapidly, necessitating specialized security strategies.

Insights from recent analyses, including the 2025 Wavestone AI Cyber Benchmark and extensive consultations within the industry, underscore the urgent need for financial leaders to prioritize AI security. Experts have reached a consensus on five key strategies aimed at fostering AI that is both innovative and secure.

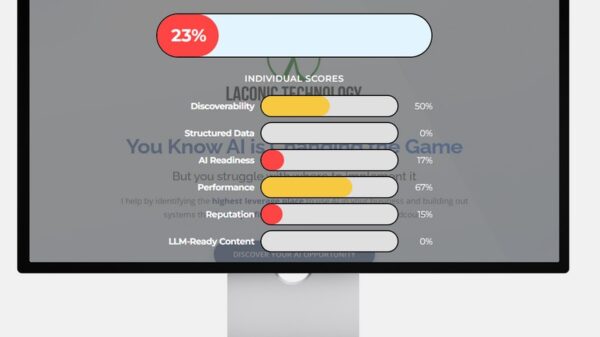

Establishing robust governance is the first imperative. Although approximately 87% of organizations have outlined principles for ethical AI, few possess the internal expertise required to implement these principles effectively, leading to gaps in protections. Trustworthy AI must weave together security, ethical considerations, regulatory compliance, and reputation management. Progressive firms are responding by creating centralized units, often referred to as Centres of Excellence, which integrate expertise from legal, risk management, compliance, and technology departments. This holistic approach ensures that AI initiatives align with organizational goals and risk appetites.

To meet executive demands for quick returns, some organizations have adopted flexible “innovation labs” with predefined oversight, facilitating safe experimentation and rapid scaling of viable projects. The second strategy involves the early identification and classification of risks. About 71% of firms now conduct AI-specific evaluations during project initiation, systematically reviewing AI involvement, assessing data sources, distinguishing between in-house and external models, and defining operational boundaries. These practices align with the risk-tiered framework outlined in the EU AI Act, helping to avoid costly retrofits.

Moreover, consolidating various assessments—covering privacy, legal, and environmental factors—into one cohesive process minimizes redundancy, reveals interconnected threats, and fosters collaborative learning about emerging AI risks. The third strategy emphasizes the necessity for cybersecurity measures to evolve in response to AI’s unique landscape. While 70% of current controls are rooted in traditional defenses, AI presents new vulnerabilities through interfaces, training processes, and vendor connections. Leading organizations are mapping their AI infrastructures comprehensively to identify weaknesses, employing “red team” simulations to uncover flaws such as erroneous outputs or input manipulations.

They are also leveraging built-in protections found in platforms like AWS Bedrock, while adapting existing enterprise tools to avoid unnecessary innovation. Resources such as Meta’s PurpleLlama and Microsoft’s PyRIT are being utilized to conduct rigorous testing of AI systems. The fourth strategy involves enhancing monitoring and detection capabilities for improved AI awareness. Despite extensive logging—practiced by 72% of firms—only a small portion integrates these logs into security operations centers, which hampers threat visibility. Financial institutions are encouraged to embed observability features that track biases, harmful responses, and performance degradation as AI evolves from simple tools to complex orchestrators.

Lastly, readiness for AI-centric incidents is a non-negotiable requirement. Currently, just 9% of entities have tailored response plans, revealing a significant shortfall. Financial leaders must extend standard protocols to encompass AI scenarios, including attack recovery and model updates. Building forensic expertise and participating in sector-wide AI incident response networks will facilitate quicker resolutions and bolster defenses.

In conclusion, securing AI within the financial sector transcends mere technical fixes; it is a critical board-level imperative. By embedding trust from the outset, chief information and security officers can spearhead multidisciplinary initiatives to mitigate risks, ensure compliance, and foster stakeholder confidence. This proactive approach not only protects assets but also unlocks the full potential of AI, promoting a resilient and intelligent financial ecosystem.

See also Finance Ministry Alerts Public to Fake AI Video Featuring Adviser Salehuddin Ahmed

Finance Ministry Alerts Public to Fake AI Video Featuring Adviser Salehuddin Ahmed Bajaj Finance Launches 200K AI-Generated Ads with Bollywood Celebrities’ Digital Rights

Bajaj Finance Launches 200K AI-Generated Ads with Bollywood Celebrities’ Digital Rights Traders Seek Credit Protection as Oracle’s Bond Derivatives Costs Double Since September

Traders Seek Credit Protection as Oracle’s Bond Derivatives Costs Double Since September BiyaPay Reveals Strategic Upgrade to Enhance Digital Finance Platform for Global Users

BiyaPay Reveals Strategic Upgrade to Enhance Digital Finance Platform for Global Users MVGX Tech Launches AI-Powered Green Supply Chain Finance System at SFF 2025

MVGX Tech Launches AI-Powered Green Supply Chain Finance System at SFF 2025