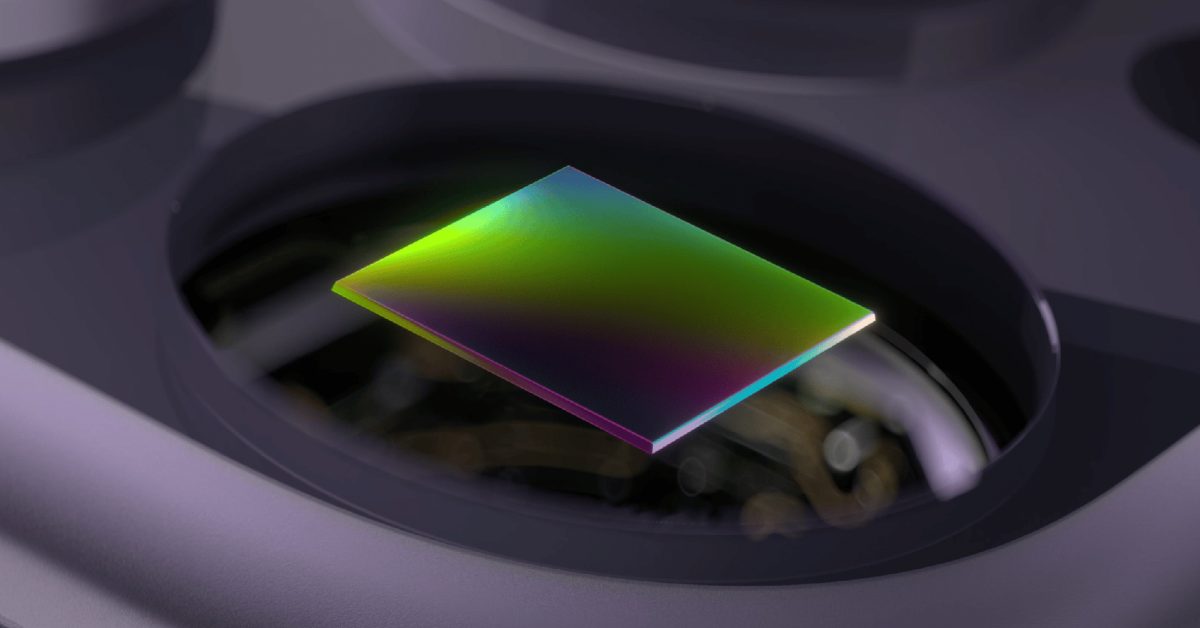

Researchers from Apple and Purdue University have unveiled a novel artificial intelligence model named DarkDiff, designed to enhance photography in extremely low-light conditions. By integrating a diffusion-based image model directly into the camera’s image processing pipeline, DarkDiff promises to recover intricate details from raw sensor data that would otherwise be lost. This advancement aims to resolve longstanding issues faced by mobile photographers when capturing images in dim environments.

Photographers often encounter grainy images filled with digital noise when shooting in low light, as traditional image sensors struggle to capture sufficient light. Companies, including Apple, have relied on image processing algorithms to improve these photos, yet these techniques have often come under criticism for producing overly smoothed images that lack fine detail. DarkDiff seeks to enhance this process significantly.

The development team describes their approach in a recent paper titled, “DarkDiff: Advancing Low-Light Raw Enhancement by Retasking Diffusion Models for Camera ISP.” They emphasize the importance of high-quality photography in challenging lighting conditions, noting that traditional camera image signal processor (ISP) algorithms are increasingly being replaced by more efficient deep learning networks capable of intelligently enhancing noisy raw images.

DarkDiff diverges from existing regression-based models that often oversmooth low-light images. Instead, it retasks Stable Diffusion, an open-source model trained on millions of images, to better understand what details should appear in dark areas based on the overall context of the image. This innovative method preserves local structures and mitigates the risk of generating hallucinations—artifacts where the AI alters image content inaccurately.

In the processing flow, the camera’s ISP addresses initial tasks such as white balance and demosaicing. DarkDiff then operates on the linear RGB image, applying denoising techniques to produce a final sRGB image. It utilizes a standard diffusion technique known as classifier-free guidance, which allows for control over how closely the model adheres to input images compared to its learned visual patterns. This adjustable guidance enables the model to favor sharper textures while being mindful of potential artifacts that might arise.

To validate DarkDiff’s effectiveness, the researchers conducted tests using actual photographs taken in low-light conditions with cameras such as the Sony A7SII. They compared DarkDiff’s performance against other raw enhancement models, including a diffusion-based baseline called ExposureDiffusion. The results revealed that DarkDiff could enhance images captured with short exposure times, making them comparable to photographs taken in significantly longer exposures using a tripod.

Despite its promising results, the researchers caution that DarkDiff’s AI-driven processing may be slower than traditional methods, implying that it could necessitate cloud-based processing to meet computational demands. Running such intensive processing locally on smartphones could lead to rapid battery depletion. Furthermore, the model has limitations, particularly concerning the recognition of non-English text in low-light scenes.

While there is no indication that DarkDiff will be implemented in upcoming iPhones, this research underscores Apple’s commitment to advancing computational photography—a growing priority across the smartphone industry as consumers increasingly seek enhanced camera capabilities beyond conventional hardware limitations. The implications of this research could potentially set new standards for mobile photography, enabling users to capture clearer, more detailed images even in challenging lighting conditions.

See also Top NSFW AI Art Generators Tested: My Dream Companion Outperforms Competitors After 2 Weeks

Top NSFW AI Art Generators Tested: My Dream Companion Outperforms Competitors After 2 Weeks OpenAI Reveals GPT-5, Surpassing 1 Million Business Clients and 300 Million Weekly Users

OpenAI Reveals GPT-5, Surpassing 1 Million Business Clients and 300 Million Weekly Users Japan’s FTC Launches Investigation into AI Firms Over Potential News Article Misuse

Japan’s FTC Launches Investigation into AI Firms Over Potential News Article Misuse Alibaba Launches Qwen-Image-Edit-2511 with Enhanced Consistency and LoRA Integration

Alibaba Launches Qwen-Image-Edit-2511 with Enhanced Consistency and LoRA Integration Government Finalizes Industry Consultations on Mandatory AI Content Labeling Rules

Government Finalizes Industry Consultations on Mandatory AI Content Labeling Rules