A recent AI-generated video showcasing a synthetic version of actress Sydney Sweeney has gone viral after being shared by tech billionaire Elon Musk on his social media platform, X. The clip serves as a demonstration of new AI video technology, igniting a broader conversation about AI, celebrity images, and digital consent. Within hours, the post gained traction across various platforms, prompting users to engage in discussions about the ethical implications of such technology.

The video utilizes advanced artificial intelligence to create a highly realistic scene featuring a digital character resembling Sweeney. Observers noted the impressive quality of facial detail, expressions, and movements in the generated footage, highlighting the progress made in AI video tools. In Musk’s tweet, he emphasized improvements in both video length and audio quality, reflecting the rapid developments in this field.

Grok video is now 10 seconds and the audio is greatly improved

pic.twitter.com/tN6hX5cs6o

— Elon Musk (@elonmusk) January 28, 2026

From a technological standpoint, supporters of AI-generated media believe such advancements hold significant potential for use in films, advertisements, and online content. However, the video’s reception was mixed, with many users questioning whether a celebrity’s likeness should be utilized in AI videos without explicit permission. This concern sparked a major part of the online discourse, focusing on critical issues surrounding digital ethics.

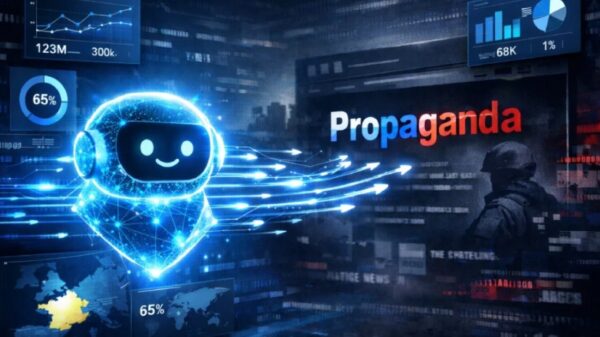

Among the main concerns articulated by users were the unauthorized use of a celebrity’s likeness, the potential for confusion between real and AI-generated videos, risks of synthetic media misuse, and the lack of clear regulations governing AI identity use. Given the video’s realistic nature and its connection to a well-known figure, the reactions were notably more intense than those typically drawn by standard AI demonstrations.

The implications of this viral AI video extend beyond mere entertainment; they touch on significant legal and ethical questions concerning celebrity image rights. Experts note that existing laws in many regions are struggling to keep pace with the capabilities of AI tools, raising urgent questions about ownership and consent when AI can replicate someone’s face and voice.

Calls for clearer regulations around AI-generated content, including consent protocols for likeness usage and platform guidelines, are gaining momentum. While some social media platforms have begun implementing AI content labels, there remains inconsistency in regulations across different jurisdictions. The situation illustrates a pressing need for updated digital rights laws that adequately address the realities of AI technology.

The emergence of this viral AI video demonstrates that AI-generated media has entered the mainstream. What began as experimental technology is now readily accessible to everyday users, reshaping the landscape of digital content creation. As AI video tools continue to improve and become easier to use, it is likely that we will see an influx of similar content in the future. For audiences, creators, and platforms alike, the emphasis will likely shift toward transparency, consent, and clear labeling, ensuring viewers can discern the nature of the media they consume.

See also Sam Altman Praises ChatGPT for Improved Em Dash Handling

Sam Altman Praises ChatGPT for Improved Em Dash Handling AI Country Song Fails to Top Billboard Chart Amid Viral Buzz

AI Country Song Fails to Top Billboard Chart Amid Viral Buzz GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test

GPT-5.1 and Claude 4.5 Sonnet Personality Showdown: A Comprehensive Test Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative

Rethink Your Presentations with OnlyOffice: A Free PowerPoint Alternative OpenAI Enhances ChatGPT with Em-Dash Personalization Feature

OpenAI Enhances ChatGPT with Em-Dash Personalization Feature