In a significant shift for content moderation, Meta, the parent company of Facebook and Instagram, is overhauling its policies on synthetic media as the 2024 electoral cycle heats up. The change comes in response to a critical review from its Oversight Board, which labeled existing policies as “incoherent.” This review highlighted the pressing need to differentiate between “manipulated media” and “AI info,” marking a pivotal moment in the tech giant’s approach to misinformation. Meta’s new strategy focuses on contextual labeling rather than outright removal of misleading content, aiming for a more nuanced governance of digital authenticity.

The impetus for Meta’s policy transformation stemmed from a specific incident involving a manipulated video of President Joe Biden. The Oversight Board used this example to illustrate the inadequacies of Meta’s previous rules, which were narrowly defined to target only AI-generated content that altered spoken words. Reporting by Mashable emphasized that the Board criticized the company for allowing a wide range of misleading content, including “cheap fakes” and traditional editing errors, to go unchecked. Instead of solely removing content based on its creation method, the Board urged Meta to implement labeling to enhance transparency.

In response, Meta swiftly shifted its strategy, signaling its intent to step back from the role of “arbiter of truth” in synthetic media. By labeling content rather than removing it outright, the company aims to strike a balance between free expression and user safety. This initiative includes applying “Made with AI” labels to a broader array of media, determined by user self-disclosure and industry-standard indicators like C2PA metadata and invisible watermarks from generative AI tools such as Midjourney and DALL-E 3. However, this execution quickly faced challenges.

The reliance on metadata standards—which are theoretically sound—encountered complexities in real-world application. Meta’s Newsroom detailed that content identified as manipulated would remain on the platform unless it violated other community standards, such as those against voter suppression or harassment. This effectively placed the onus of discernment on users rather than moderators, leading to confusion amid a chaotic digital landscape.

Professional photographers and digital artists quickly reacted to the new labeling practices, voicing concerns that their authentic images were unjustly marked with “Made with AI” badges. This occurred even when they used standard retouching tools, such as Adobe Photoshop, to enhance their work. The backlash highlighted the frustration within the creative community, which argued that such labeling delegitimized their craftsmanship by conflating minor edits with entirely synthetic creations. The labels became perceived as a “scarlet letter,” undermining trust in genuine photojournalism and artistic photography.

Amidst this backlash, Meta recognized the need for a more sensitive terminology, leading to the introduction of the updated tag “AI Info” in July 2024. This adjustment sought to clarify that AI tools were utilized in the creation process without implying that the entire image was fabricated. Reporting by The Verge revealed that this semantic recalibration was a direct response to user feedback, yet it underscored the ongoing challenge platforms face in differentiating AI as a tool from AI as a source of misinformation.

Despite these broader labeling efforts, the Oversight Board expressed ongoing concern about a significant loophole: the prevalence of “cheap fakes.” These manipulated images or videos can often be produced using traditional editing techniques, such as altering audio to misrepresent a speaker or cropping footage to eliminate critical context. The Board cautioned that Meta’s focus on sophisticated deepfakes left these low-tech manipulations inadequately addressed, allowing them to proliferate unchecked.

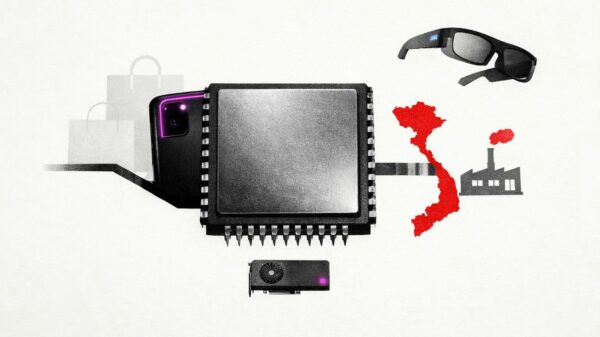

As Meta navigates these labeling complexities, it is part of a larger industry movement towards the Coalition for Content Provenance and Authenticity (C2PA) standards, intended to embed tamper-evident metadata into digital files. However, the effectiveness of these measures is hampered by the reality that many platforms strip metadata from files during uploads. Unless platforms like Meta participate in the verification process—an effort they are currently undertaking—this crucial provenance data could be lost.

The effectiveness of labeling in the context of upcoming global elections will be put to the test, as concerns about AI-generated disinformation threaten the integrity of democratic processes. The “Biden Robocall” incident in New Hampshire, where an AI-generated voice misled voters, has amplified fears about the impact of generative technologies. By pivoting towards labeling, Meta is betting that users will be able to interpret these tags to adjust their trust levels adequately, rather than relying on the platform to remove content deemed harmful.

However, critics warn that such labels may fall short in a hyper-polarized environment where users frequently share content without scrutiny. The distinction between “AI Info” and unlabeled content may be lost amid the rapid scrolling of social media feeds. Furthermore, the sheer volume of digital content means that even with automated detection systems, many pieces of synthetic media could evade oversight. A report from NPR indicates that regulatory bodies are struggling to keep pace, but platform policies remain a critical line of defense against misinformation.

Ultimately, Meta’s evolution from “Manipulated Media” to “AI Info” encapsulates a broader truth about the digital landscape: the boundary between authenticity and fabrication is increasingly blurred. The Oversight Board’s intervention compelled Meta to recognize that its outdated policies were ill-suited for the current reality, leading to a framework that attempts to navigate a complex environment where everything from smartphone photos to AI-generated content competes for attention.

For industry stakeholders, the key takeaway is that the era of binary content moderation is giving way to a more intricate system of metadata, contextual labeling, and user disclosures. While this reduces the risk of censorship, it also places more cognitive demands on users to discern the authenticity of digital content. As technology continues to evolve, the effectiveness of these platforms will hinge not on their ability to detect every synthetic image, but on their capacity to convey information in a manner that users can readily comprehend and trust.

See also AI’s Impact on Journalism: Can Democracies Navigate the Challenges of Misinformation?

AI’s Impact on Journalism: Can Democracies Navigate the Challenges of Misinformation? Google Limits Nano Banana Pro to 2 Daily Photos; OpenAI Cuts Sora Video Generations to 6

Google Limits Nano Banana Pro to 2 Daily Photos; OpenAI Cuts Sora Video Generations to 6 Google Limits Free Use of Nano Banana AI Image Generator to Two Images Daily Amid High Demand

Google Limits Free Use of Nano Banana AI Image Generator to Two Images Daily Amid High Demand Study Reveals Generative AI’s Creative Limits: Capped at Average Human Level

Study Reveals Generative AI’s Creative Limits: Capped at Average Human Level AI Study Reveals 62% Success in Bypassing Chatbot Safety with Poetry Techniques

AI Study Reveals 62% Success in Bypassing Chatbot Safety with Poetry Techniques