Governments worldwide are increasingly turning to artificial intelligence (AI) to enhance the efficiency of public services, envisioning a future where algorithms manage the day-to-day administration of various functions. The potential applications are vast, ranging from combating tax fraud to improving public health services by screening for cancer and triaging cases at scale. However, these advancements raise critical questions about the reliability and ethical implications of AI systems, particularly in high-stakes environments.

Instances of AI misjudgments have already demonstrated the potential for severe consequences. In the Netherlands, for example, flawed algorithmic assessments used to identify families allegedly committing benefits fraud resulted in financial ruin for innocent households, tearing families apart and even leading to the separation of children from their parents. Such outcomes highlight the risks of relying on risk-scoring systems that can misinterpret data without human oversight.

The pressing concern is the replacement of human judgment with algorithmic decision-making. There is a fundamental assumption that AI will yield accurate results, but this is not always the case. People’s lives cannot easily be distilled into data points for algorithms to evaluate. When AI systems fail, accountability becomes murky. Who bears the responsibility for errors made by these automated systems?

Despite substantial investments and interest in AI, evidence is mounting that the technology does not consistently deliver on its promises. The phenomenon of “hallucinations,” where AI generates plausible yet false content, remains unresolved. Industry leaders, including the co-founder of OpenAI, have acknowledged that merely increasing the size of large language models (LLMs) will not significantly enhance their reliability or efficacy.

As AI continues to be integrated into crucial sectors such as law, journalism, and education, there are fears about the implications for human learning and critical thinking. One possible future envisions universities where lectures and assignments are designed by LLMs, leading to a scenario where students engage in machine-to-machine communication rather than authentic learning experiences. This shift could undermine the very critical thinking skills and expertise that educational institutions are meant to foster.

Profit and Power

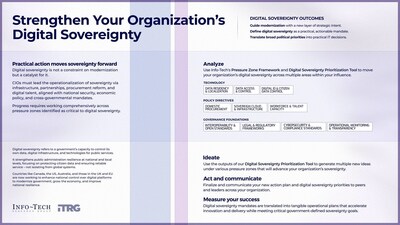

For AI companies, the integration of technology into public infrastructure has proven highly profitable. As AI becomes more embedded in various sectors, these firms gain an indispensable status, complicating oversight and regulation. The development of autonomous weapons for the defense sector exemplifies how a company could become “too big to fail,” as national security could hinge on its technologies.

When issues arise, the knowledge gap between governments, citizens, and AI developers only amplifies the reliance on those very companies whose systems may have caused the problems initially. A historical parallel can be drawn to the early days of social media, which began with the promise of connecting people but has since raised significant concerns regarding privacy, surveillance, and misinformation. The current landscape sees a potent mix of social media, AI, and machine learning, creating a feedback loop where attention-seeking algorithms generate content that further entrenches users in informational bubbles.

Even if AI evolves to become more accurate and capable, society must critically assess the extent to which control is ceded to algorithmic systems in pursuit of order and efficiency. Technology alone cannot address social, economic, or moral challenges. As history has shown, if technology could resolve such issues, fundamental problems like hunger would not persist in a world that produces sufficient food for all.

Critics of AI often face labeling as Luddites, a term that misrepresents their stance. The Luddites of the 19th century did not oppose technology outright; instead, they challenged its unreflective deployment and the ways it reshapes work and communities. Today, this call for a more thoughtful examination of technology’s impact remains relevant. As societies increasingly rely on AI, a deeper understanding of its implications is essential to ensure that these systems serve humanity rather than undermine it.

See also RCP Reports 70% of UK Physicians Support AI in NHS, Urges Digital Infrastructure Overhaul

RCP Reports 70% of UK Physicians Support AI in NHS, Urges Digital Infrastructure Overhaul UK Government Commits £3.25 Billion to Scale AI Across Public Services Amid Execution Challenges

UK Government Commits £3.25 Billion to Scale AI Across Public Services Amid Execution Challenges Missouri Executive Order Launches AI Strategy to Enhance Efficiency and Training Programs

Missouri Executive Order Launches AI Strategy to Enhance Efficiency and Training Programs US Imposes 25% Tariffs on Nvidia, AMD AI Chips to Boost Treasury Amid Trade Tensions

US Imposes 25% Tariffs on Nvidia, AMD AI Chips to Boost Treasury Amid Trade Tensions Transport Committee Chair Ruth Cadbury Advocates AI Investment to Enhance UK Transport Network

Transport Committee Chair Ruth Cadbury Advocates AI Investment to Enhance UK Transport Network