A controversial AI-generated video of Gloucester’s mayor, Ashley Bowkett, has ignited calls for stricter regulations surrounding the use of artificial intelligence in politics. The video, created by independent councillor Alastair Chambers, depicted Bowkett chuckling while allegedly dismissing investigations into a missing £8 million from the city’s budget. This incident raises significant ethical concerns as Gloucester City Council seeks a government bailout following a series of financial overspends.

The video has drawn sharp criticism, particularly from former mayor Kathy Williams and council leader Jeremy Hilton. Williams emphasized the need for clearer standards in council meetings, stating, “The AI thing is a new tool… it has its advantages, but it also has disadvantages.” Hilton condemned the video as “psychological bullying,” urging Chambers to reconsider his actions. Following the backlash, Bowkett clarified that he had never refused to hold a meeting regarding the council’s financial situation, stressing that an emergency debate on the matter would take place in December after some negotiation with the Conservative leader, Stephanie Chambers.

Independent councillor Alastair Chambers defended his creation, describing it as a “recreation” rather than a deep fake. He explained that the video was intended to highlight the council’s poor financial management and claimed that Bowkett was not offended by it, as they had since shared a cordial conversation. Chambers argued that the uproar surrounding the video serves as a distraction from the council’s financial mismanagement, suggesting that Hilton’s comments are an attempt to “create smoke and mirrors.”

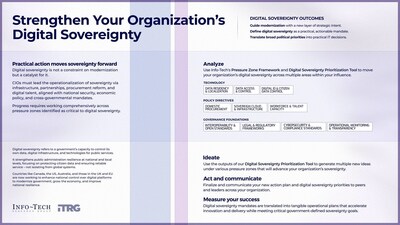

As discussions around the implications of AI in politics escalate, other local figures have voiced their concerns. James Vincent, founder of Digital Resistance, a company aimed at educating individuals about AI threats, called for comprehensive regulations on AI-generated political content. He stated, “There needs to be a law… that actually protects individuals in saying that politicians or people within power should not be generating this kind of content and distributing it.” Vincent warned that the rapid evolution of AI technology complicates the public’s ability to discern reality from fabrication.

The Gloucester incident underscores a growing anxiety regarding the application of AI in political discourse, particularly as the technology becomes more accessible. Recent developments in this area include the UK government’s ongoing discussions with social media platform X about its AI service Grok, along with proposed modifications to the Online Safety Act. However, specific guidelines on regulating AI-generated content remain unclear.

As the debate continues, the urgency for a framework governing AI usage in politics becomes increasingly evident. The Gloucester incident not only highlights the potential risks associated with AI in public discourse but also serves as a catalyst for broader conversations about ethical standards in political communication. With the technology advancing at such a rapid pace, stakeholders from various sectors are calling for immediate action to ensure that AI does not compromise the integrity of democratic processes.

See also Chhattisgarh’s Vidya Samiksha Kendra Achieves National Recognition for AI-Driven Education Governance

Chhattisgarh’s Vidya Samiksha Kendra Achieves National Recognition for AI-Driven Education Governance China Launches AI Plus Action Plan, Aiming for 90% AI Penetration by 2030

China Launches AI Plus Action Plan, Aiming for 90% AI Penetration by 2030 Asimov’s Three Laws Proposed as Legal Framework to Address AI Liability Gaps

Asimov’s Three Laws Proposed as Legal Framework to Address AI Liability Gaps Musk Promises to Block Sexualised Deepfakes on X, Highlighting Regulatory Gaps in NZ

Musk Promises to Block Sexualised Deepfakes on X, Highlighting Regulatory Gaps in NZ