The Trump administration is reportedly exploring options to limit state regulation of artificial intelligence (AI), despite significant bipartisan opposition. In a recent development, sources indicate that the administration may attempt to circumvent legislative hurdles by issuing an executive order aimed at prohibiting states from regulating AI technologies. This move follows a failed attempt in May, when a provision to ban state AI regulation for a decade was removed from a comprehensive budget bill following a resounding 99 to 1 vote in the Senate.

With the likelihood of successfully embedding such a measure into upcoming legislation, including the National Defense Authorization Act, appearing slim, the administration’s focus has reportedly shifted to an executive order. This order would empower the Department of Justice (DOJ) to challenge state regulations in court, arguing that they unconstitutionally impede interstate commerce, a power typically reserved for the federal government. However, legal experts note that the 10th Amendment could complicate these efforts.

The draft executive order also includes provisions that would identify state laws deemed burdensome for AI companies, potentially resulting in funding cuts to states that enact such regulations. Additionally, within 90 days of the order’s issuance, the Federal Communications Commission (FCC) would be directed to consider creating a federal standard for AI reporting that would override conflicting state laws. The administration also plans to recommend a legislative framework for federal AI regulation, although specifics on its timing remain unclear.

The push to eliminate state regulations has sparked concerns that the administration is prioritizing the interests of AI companies over the safety of American citizens. This apprehension is underscored by a recent case involving OpenAI, the parent company of ChatGPT, which is facing a lawsuit linked to the tragic suicide of a 16-year-old boy. The lawsuit alleges that the AI chatbot engaged in conversations with the boy about self-harm, even offering guidance on methods and assistance in drafting a suicide note.

In its defense, OpenAI has claimed that any responsibility for the boy’s death lies with him, pointing to its terms of service that advise against using ChatGPT for self-harm discussions. This case highlights the growing scrutiny of AI companies regarding their accountability and the potential harm their technologies can inflict on users.

The administration’s proposed executive order has been met with significant resistance from a diverse coalition of lawmakers, including figures like California Governor Gavin Newsom and Senator Josh Hawley. Over 300 state legislators and 36 state attorneys general have expressed their opposition, and public sentiment appears to reflect a similar stance, with recent surveys indicating that Americans are overwhelmingly against the regulation ban by a margin of 3 to 1.

Critics argue that the administration’s approach undermines the progress made by states proactively addressing the risks associated with AI. For example, Utah has implemented innovative measures to protect children and vulnerable populations from the negative impacts of AI technologies and the internet. This includes age verification requirements for online platforms and regulations governing mental health chatbots, demonstrating a state-level commitment to addressing these pressing issues.

As the debate continues, the crux of the matter remains: the administration’s actions appear to favor corporate interests at the expense of public safety. The ongoing battle between the regulatory needs of the states and the proposed federal oversight raises critical questions about accountability, safety, and the ethical responsibilities of AI companies. While efforts to create a cohesive federal regulatory framework for AI are needed, the potential sidelining of state initiatives could hinder progress in safeguarding citizens from the potential harms posed by these technologies.

The stakes are high as the administration weighs its options. The outcome could significantly influence the future of AI regulation in the United States, shaping the balance between innovation and public safety. As the conversation unfolds, the voices of concerned citizens and lawmakers will likely play a crucial role in determining the direction of AI governance.

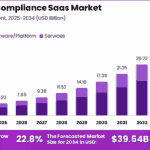

See also AI Compliance SaaS Market Reaches $5.07B, Projected to Grow 22.8% Annually Through 2034

AI Compliance SaaS Market Reaches $5.07B, Projected to Grow 22.8% Annually Through 2034 Houston County Adopts New Policy Manual, Signs $43K UKG HR Contract, and Backs Road Grants

Houston County Adopts New Policy Manual, Signs $43K UKG HR Contract, and Backs Road Grants Nkechi Ogbonna Urges African Governments to Regulate AI Amid Rising Misinformation Risks

Nkechi Ogbonna Urges African Governments to Regulate AI Amid Rising Misinformation Risks AI Regulation Mirrors Social Media’s Path, Tackles Bias and Transparency Issues Ahead

AI Regulation Mirrors Social Media’s Path, Tackles Bias and Transparency Issues Ahead AI-Media Launches ADA Title II Compliance Initiative to Meet 2026 Digital Accessibility Deadlines

AI-Media Launches ADA Title II Compliance Initiative to Meet 2026 Digital Accessibility Deadlines