AI regulation remains a contentious issue in the United States, characterized by fragmented state-level efforts and a push for federal oversight. In 2025, states like California and New York began implementing regulations that emphasize transparency, whistleblower protections, and the safeguarding of teenage users. Advocates for unregulated AI growth argue that these state laws could hinder innovation and give international competitors, particularly China, an edge in the AI landscape.

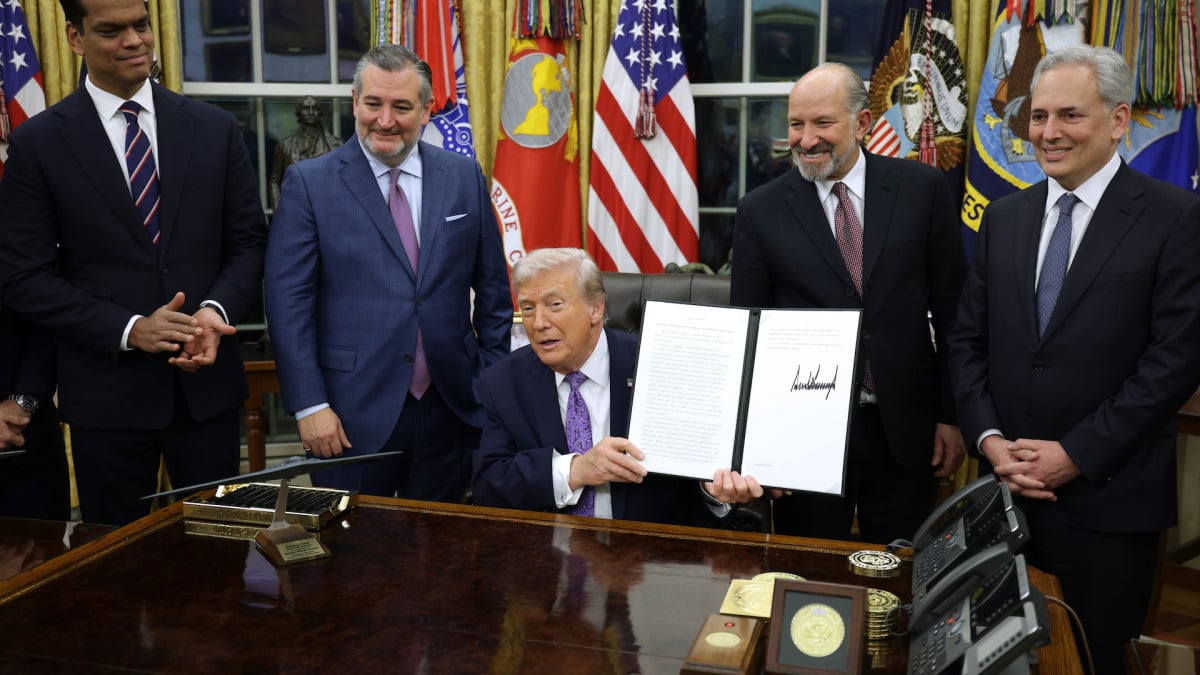

In a decisive move, President Donald Trump signed an executive order on Thursday aimed at establishing a cohesive federal framework for AI regulation. The order asserts that “to win, United States AI companies must be free to innovate without cumbersome regulation,” and warns that “excessive State regulation thwarts this imperative.”

One of the more controversial aspects of the executive order is its provision allowing for the withholding of federal funds from states that enact what the order deems “onerous AI laws.” Federal agencies may condition state grants, especially those aimed at enhancing high-speed internet access in rural areas, on compliance with this national policy.

Produced in consultation with David Sacks, a tech venture capitalist and special advisor for AI and crypto during the Trump administration, the order has drawn criticism. A recent investigation by the New York Times highlighted potential conflicts of interest, revealing that many of Sacks’ investments could benefit from the policies outlined in the executive order.

Critics of the order include Michael Kleinman, head of US Policy at the tech research nonprofit Future of Life Institute, who labeled it a “gift for Silicon Valley oligarchs.” Kleinman argued that unlike other industries, AI operates with minimal oversight, which he described as a dangerous precedent. “Unregulated AI threatens our children, our communities, our jobs, and our future,” he remarked.

The debate surrounding AI regulation has intensified following legal actions against companies like OpenAI, the creator of ChatGPT, which faces multiple lawsuits from families of teens who tragically died by suicide after engaging with the chatbot. The company has denied responsibility, particularly in the case of Adam Raine, a 16-year-old who reportedly discussed his suicidal feelings with ChatGPT before taking his life. The new order emphasizes the need to “ensure that children are protected.”

Coinciding with the signing of the executive order, a trio of child safety advocacy groups released a public service announcement (PSA) highlighting the potential dangers of AI chatbots for children, explicitly urging that states should not be hindered in their regulatory efforts.

Earlier this year, Trump’s “One Big Beautiful Bill Act” proposed a moratorium on state regulation of AI for a decade, a provision faced with significant backlash, including from within his own party. A bipartisan Senate vote ultimately defeated the measure by a staggering margin of 99 to 1. Despite this, factions of Trump’s base, including notable supporters like Steve Bannon, continue to resist regulatory measures imposed by the industry.

The reception of Trump’s executive order remains uncertain, particularly given the existing opposition to federal oversight. Legal challenges could also arise, raising questions about the sustainability of the order’s provisions. As the regulatory landscape for AI continues to evolve, the implications for innovation, safety, and public welfare will likely remain at the forefront of national discourse.

See also Trump’s Executive Order on AI: Misguided Belief in Winner-Take-All Competition

Trump’s Executive Order on AI: Misguided Belief in Winner-Take-All Competition Trump’s AI Executive Order Faces State Resistance and Legal Challenges Ahead of 2026

Trump’s AI Executive Order Faces State Resistance and Legal Challenges Ahead of 2026 Trump Signs AI Executive Order to Centralize Regulation Amid Industry Debate

Trump Signs AI Executive Order to Centralize Regulation Amid Industry Debate QUALCOMM Introduces Governance Changes Amid AI Chip Expansion, Boosting Investor Influence

QUALCOMM Introduces Governance Changes Amid AI Chip Expansion, Boosting Investor Influence Trump Administration Unveils Federal AI Regulations, Challenging Arizona’s Local Authority

Trump Administration Unveils Federal AI Regulations, Challenging Arizona’s Local Authority