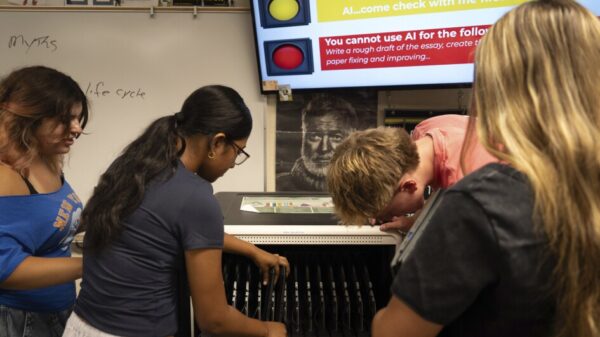

In June 2025, researchers uncovered a vulnerability that exposed sensitive Microsoft 365 Copilot data without any user interaction. This exploit, named EchoLeak, deviates from conventional breaches that typically rely on phishing or user error. Instead, it silently extracted confidential information by manipulating the way Copilot interacts with user data. The incident reveals a critical flaw in modern cybersecurity frameworks, which are primarily designed for predictable software systems and conventional application-layer defenses.

The EchoLeak vulnerability underscores the evolving landscape of cybersecurity threats, particularly as organizations increasingly incorporate artificial intelligence into their operational frameworks. Traditional security measures, which often focus on user behavior and external threats, are becoming less effective against these sophisticated AI-driven exploits. Experts emphasize that the interconnected nature of AI infrastructure complicates existing security paradigms, leading to a need for a comprehensive reevaluation of security strategies.

As the reliance on AI tools like Microsoft 365 Copilot grows, the potential for systemic vulnerabilities also escalates. These tools, designed to enhance productivity and streamline workflows, can inadvertently become channels for data leaks when security protocols fail to keep pace with technological advancements. The EchoLeak incident serves as a reminder that the integration of AI into business processes must be accompanied by equally robust security measures.

In light of this vulnerability, organizations are urged to adopt a more proactive approach to cybersecurity. This includes not only upgrading existing security systems but also fostering a culture of awareness around the risks associated with AI technologies. Experts recommend that companies invest in advanced threat detection capabilities and ensure that data governance frameworks are updated to address the unique challenges posed by AI applications.

The significance of the EchoLeak exploit extends beyond Microsoft and its users. It raises broader questions about the security of AI systems across various sectors, from finance to healthcare. As organizations rush to harness the benefits of AI, the potential repercussions of neglecting security cannot be overstated. This incident may serve as a catalyst for regulatory bodies to impose stricter guidelines on AI deployment and security standards.

Looking ahead, the focus will likely shift towards developing adaptive security frameworks that can respond to the dynamic threats posed by AI. Experts advocate for the integration of machine learning into security protocols to enhance real-time threat detection and response capabilities. This transition will require collaboration between technology developers, cybersecurity professionals, and policymakers to create a safer digital environment.

In conclusion, the EchoLeak vulnerability serves as a pivotal example of the challenges that lie ahead in the realm of AI security. As companies increasingly integrate these advanced technologies, the lessons learned from such incidents must inform future strategies. A renewed commitment to cybersecurity will be essential to safeguarding sensitive information in an era where AI tools are becoming ubiquitous across business operations.

See also Thinking Machines Lab Launches Tinker, Secures $2B Funding at $12B Valuation

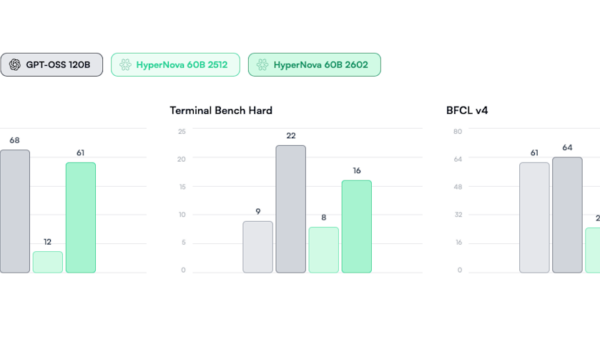

Thinking Machines Lab Launches Tinker, Secures $2B Funding at $12B Valuation AI Benchmarks Flawed: Study Reveals Variability in Performance Metrics and Testing Methods

AI Benchmarks Flawed: Study Reveals Variability in Performance Metrics and Testing Methods AI Memorization Crisis: Stanford Reveals Major Copyright Risks in OpenAI, Claude, and Others

AI Memorization Crisis: Stanford Reveals Major Copyright Risks in OpenAI, Claude, and Others Researchers Develop Physics-Informed Deep Learning Model for Accurate Rainfall Forecasting

Researchers Develop Physics-Informed Deep Learning Model for Accurate Rainfall Forecasting Automated Machine Learning Improves Frailty Index Accuracy for Spinal Surgery Outcomes

Automated Machine Learning Improves Frailty Index Accuracy for Spinal Surgery Outcomes