Kakao’s artificial intelligence (AI) model, “Kanana,” has been recognized for its safety, outperforming some global counterparts according to the first AI safety evaluation conducted in South Korea. The Ministry of Science and ICT announced the results on the 29th, highlighting the evaluation’s importance in reinforcing the safety of high-performance AI models ahead of the “Basic AI Act,” which is set to be enforced in January next year.

This evaluation, a collaboration between the Ministry, the Artificial Intelligence Safety Research Institute, and the Korea Information and Communication Technology Association (TTA), marks a significant step in establishing safety protocols for AI technologies in the country. The Basic AI Act will mandate that high-performance AI models must secure safety measures, creating a framework for responsible AI deployment.

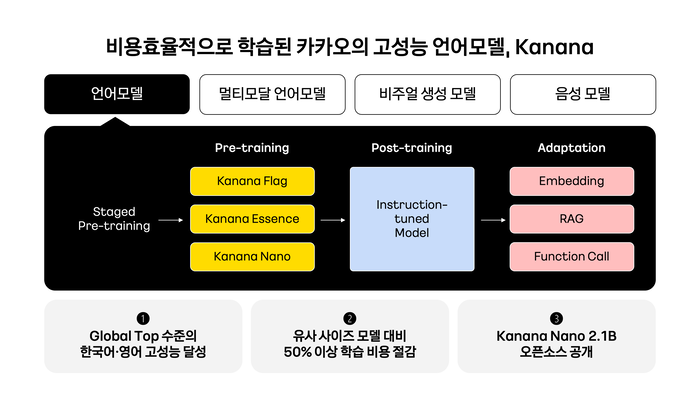

The focus of this inaugural evaluation was Kakao’s “Kana Essence 1.59.8B,” categorized as a medium-sized model within its portfolio. As a participant in the domestic AI safety consortium, Kakao engaged in this assessment through consultations that aimed at ensuring compliance with the forthcoming legislation.

The evaluation utilized the “AsurAI” dataset, developed by TTA and KAIST research teams, alongside high-risk assessment datasets from the AI Safety Research Institute. This comprehensive method involved testing the AI model’s responses to queries that probe various risk factors, including violent and discriminatory content, as well as scenarios with high potential for misuse, such as weapon-related inquiries.

Following rigorous testing, the evaluation determined that Kanana exhibited a high level of safety when compared to similar global models, including Meta’s “Rama 3.1” and Mistral’s “Mistral 0.3.” This finding not only underscores the capabilities of domestic AI technologies but also positions Kakao favorably in the ongoing discourse surrounding AI safety and responsibility.

Kim Kyung-man, head of the artificial intelligence policy office at the Ministry of Science and ICT, remarked on the significance of the evaluation. He stated, “This evaluation is a case of proving the safety competitiveness of domestic AI models at a time when discussions on AI safety are emphasized on verification and implementation rather than regulation worldwide.”

The Ministry has plans to extend the safety evaluation framework to its own AI foundation model project, slated for implementation in January. This initiative aims to broaden the safety assessment efforts to include various AI models in collaboration with both domestic and international companies.

As global conversations about AI safety evolve, the role of national frameworks such as South Korea’s Basic AI Act may become increasingly pivotal. The implementation of systematic evaluations could set a precedent for AI safety regulations worldwide, influencing how similar technologies are developed and deployed across different markets.

See also Ensemble Learning Predicts Breast Cancer Surgery Costs, Identifies Key Influencers

Ensemble Learning Predicts Breast Cancer Surgery Costs, Identifies Key Influencers Chandrakala Reveals Machine Learning Method for Acoustic Ball Bearing Fault Detection

Chandrakala Reveals Machine Learning Method for Acoustic Ball Bearing Fault Detection AI Expert John Sviokla: Adoption Accelerates as Companies Face Investment Risks

AI Expert John Sviokla: Adoption Accelerates as Companies Face Investment Risks AI Models Exhibit Bias Against Dialects, Reveals Study from Germany and the US

AI Models Exhibit Bias Against Dialects, Reveals Study from Germany and the US Researchers Expose Fault Injection Vulnerabilities in ML-Based Quantum Error Correction

Researchers Expose Fault Injection Vulnerabilities in ML-Based Quantum Error Correction