Thinking Machines Lab, an AI startup co-founded by former OpenAI CTO Mira Murati, has quickly emerged as a notable player in the competitive landscape of artificial intelligence. Established in February 2025, the company raised $2 billion within its first five months, achieving a valuation of $12 billion. This rapid funding underscores the significant interest in its mission to innovate how AI models are fine-tuned and customized for specific tasks.

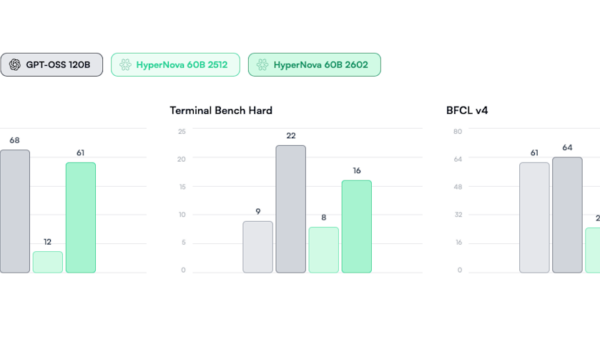

Distinct from other AI labs that focus on developing larger models requiring immense data and computing resources, Thinking Machines Lab is dedicated to creating smarter, more efficient models. Its flagship product, Tinker, allows developers to adjust AI models tailored for various applications without the burdensome costs and complexities typically associated with distributed computing.

Murati, who briefly served as interim CEO of OpenAI following the contentious firing and reinstatement of Sam Altman, helped assemble a team of over 30 researchers, drawing talent from Meta, Mistral, and other leading AI organizations. The company has gained attention not only for its innovative technology but also for its unconventional governance structure; Murati retains voting powers that surpass those of the board of directors, granting her significant influence over the company’s direction.

In an industry where speculation runs rampant, Thinking Machines Lab has maintained an air of mystery while gradually revealing its ambitious goals. The company aims to develop multimodal AI systems capable of collaboration across various fields, emphasizing the need for more accessible and customizable AI solutions. Its commitment to transparency is reflected in its research publications, covering topics from GPU optimization to novel fine-tuning methods like Low-Rank Adaptation (LoRA), which offers an efficient alternative to traditional model retraining.

LoRA allows for the freezing of model weights while introducing new parameters, making it feasible to fine-tune models without the vast computational overhead associated with full retraining. This method has been incorporated into Tinker, which is already being utilized by researchers at prestigious institutions such as Princeton, Stanford, and UC Berkeley.

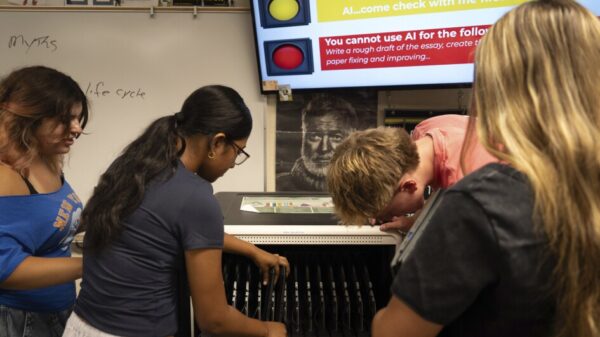

Tinker was officially launched in October 2025 and enables users to customize a variety of open-source models, including those from Meta and OpenAI. By providing a user-friendly interface for fine-tuning, Tinker facilitates the specialization of AI models, making them more applicable to niche domains such as healthcare and legal studies. While it streamlines the customization process, users still need a foundational understanding of machine learning to effectively navigate its features.

As Thinking Machines Lab approaches its one-year mark, the company is eyeing an additional $5 billion in funding, aspiring to reach a valuation of $50 billion. This ambition aligns with plans for 2026, which include the introduction of proprietary models and enhanced capabilities for Tinker to support users with limited technical expertise in fine-tuning processes.

John Schulman, the company’s chief scientist, hinted at the potential for Tinker to revolutionize AI accessibility, making it easier for a broader audience to adapt advanced AI technology to meet specific needs. The progress and innovations at Thinking Machines Lab are being closely monitored within the AI sector, as its unique approach to customization and efficiency may very well redefine industry standards.

See also AI Benchmarks Flawed: Study Reveals Variability in Performance Metrics and Testing Methods

AI Benchmarks Flawed: Study Reveals Variability in Performance Metrics and Testing Methods AI Memorization Crisis: Stanford Reveals Major Copyright Risks in OpenAI, Claude, and Others

AI Memorization Crisis: Stanford Reveals Major Copyright Risks in OpenAI, Claude, and Others Researchers Develop Physics-Informed Deep Learning Model for Accurate Rainfall Forecasting

Researchers Develop Physics-Informed Deep Learning Model for Accurate Rainfall Forecasting Automated Machine Learning Improves Frailty Index Accuracy for Spinal Surgery Outcomes

Automated Machine Learning Improves Frailty Index Accuracy for Spinal Surgery Outcomes LG’s K-Exaone Achieves 7th Place in Global AI Rankings, Dominating Benchmark Tests

LG’s K-Exaone Achieves 7th Place in Global AI Rankings, Dominating Benchmark Tests