Certain short-form videos on major social media platforms can trigger suicidal thoughts among vulnerable viewers, according to new research led by the University of Delaware‘s Jiaheng Xie. The study highlights the potential dangers posed by specific viral content, especially to young and impressionable audiences.

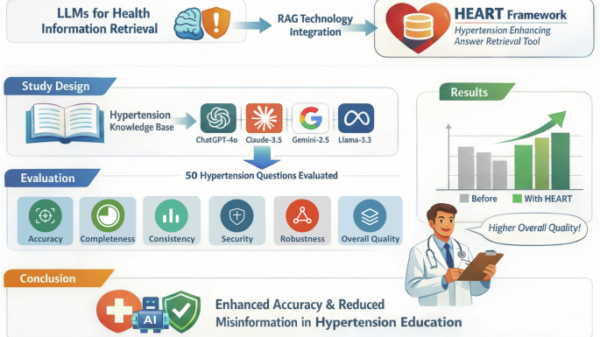

Xie’s team developed an AI model capable of predicting and flagging videos that may pose a risk to viewers. Their findings, released in the journal Information Systems Research, demonstrate how this AI tool can enhance safety protocols by identifying high-risk videos prior to their viral spread. The model evaluates both the content of the videos and the sentiments expressed in viewer comments, offering a dual-layered approach to risk assessment.

As an assistant professor of accounting and management information systems, Xie emphasized the model’s innovative capability to differentiate between the creator’s intentions and the audience’s perceptions. “Our tool can distinguish what creators choose to post from what viewers think or feel after watching,” he stated. This distinction is crucial, as it helps to understand the emotional impact of content beyond its surface level.

The AI system further separates known medical risk factors from emerging social media trends, such as viral heartbreak clips or challenges that may negatively influence teenagers. This ability to discern established risks from novel social phenomena is particularly important in a digital landscape that evolves rapidly.

One of the most significant aspects of Xie’s research is the model’s proactive nature; it aims to predict high-risk videos before they gain traction among larger audiences. Such foresight could revolutionize how platforms like TikTok and others approach content moderation, potentially preventing harmful content from reaching susceptible viewers.

As social media continues to play a pivotal role in shaping public discourse and personal well-being, the implications of this research are profound. Platforms face increasing scrutiny over their content moderation practices, particularly as mental health concerns escalate amid rising social media use. Xie’s work provides a pathway toward more responsible platform governance, where preemptive measures can be taken to safeguard vulnerable users.

Xie is open to discussions about how the model was developed and its potential implications for content moderation across various platforms. Reporters interested in delving deeper into this topic can reach out to [email protected] for interview opportunities.

As the digital landscape continues to evolve, so too must the approaches to managing its impact on mental health. The emergence of tools like Xie’s AI model signals a shift toward more nuanced and effective moderation strategies, marking a significant step in protecting users from harmful content online.

See also Bellini College Launches at USF, Pioneering AI and Cybersecurity Education for 5,000 Students

Bellini College Launches at USF, Pioneering AI and Cybersecurity Education for 5,000 Students MIT Jameel Clinic Launches AI Study to Predict Breast Cancer Risk in Japan with Mirai Tool

MIT Jameel Clinic Launches AI Study to Predict Breast Cancer Risk in Japan with Mirai Tool CeriBell Achieves FDA Breakthrough Status for AI-Powered LVO Stroke Detection Solution

CeriBell Achieves FDA Breakthrough Status for AI-Powered LVO Stroke Detection Solution Reinforcement Learning Transforms Resource Management in Communication Networks

Reinforcement Learning Transforms Resource Management in Communication Networks AI Personalizes Cancer Treatment: Insights from David R. Spigel of Sarah Cannon Research Institute

AI Personalizes Cancer Treatment: Insights from David R. Spigel of Sarah Cannon Research Institute