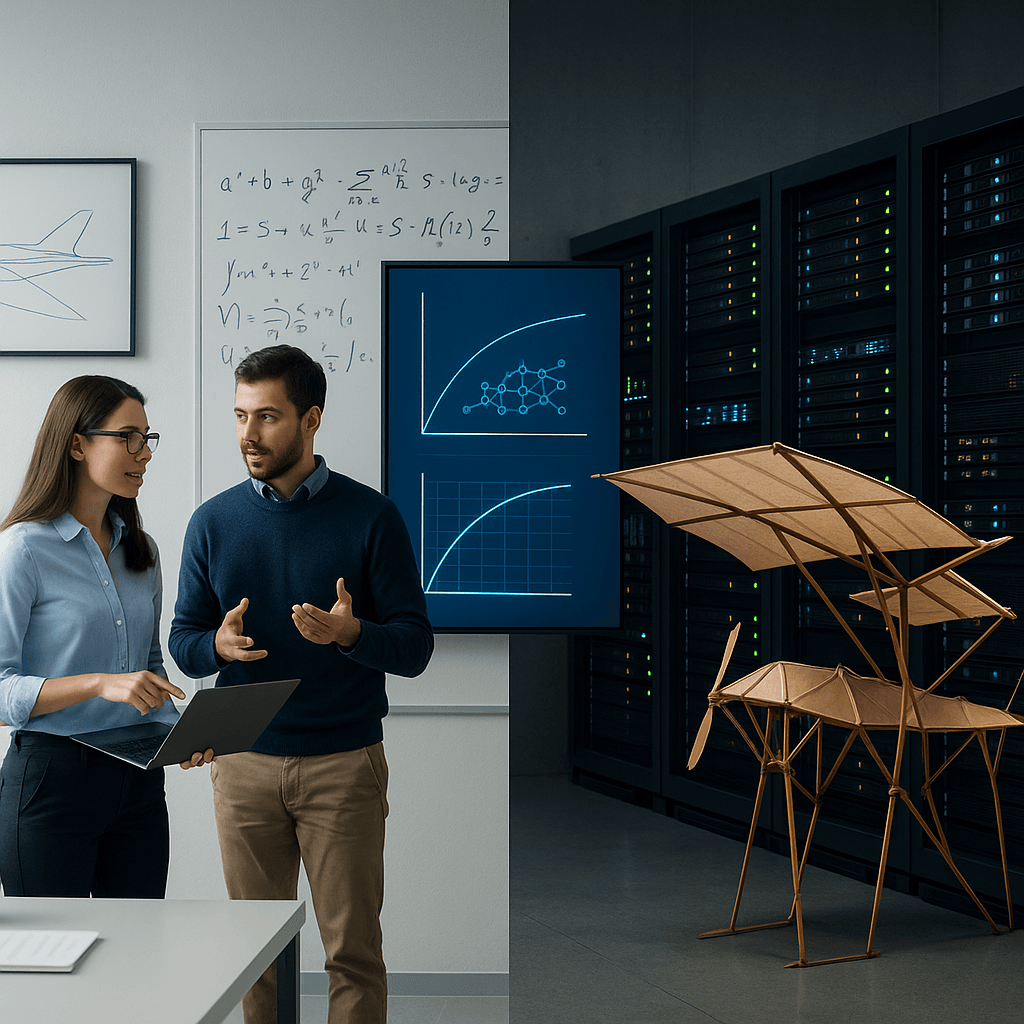

A new AI lab, Flapping Airplanes, emerged from stealth mode on Wednesday, announcing a substantial seed funding round of $180 million backed by prominent investors including Google Ventures, Sequoia Capital, and Index Ventures. The company’s arrival is notable not only for its financial backing but also for its contrarian approach to AI development, which diverges from the industry’s prevailing focus on scaling large computing resources.

While many competitors in the rapidly evolving AI landscape are preoccupied with expanding compute capabilities and amassing extensive training data, Flapping Airplanes aims to reshape the conversation by prioritizing fundamental research breakthroughs. The lab’s mission targets the improvement of training methodologies for large language models (LLMs) to reduce their reliance on the vast datasets that have characterized current AI systems.

This strategy presents a stark contrast to the dominant “scaling paradigm” embraced by many in the industry. As outlined by David Cahn, a partner at Sequoia, the prevailing belief posits that increasing computational power and data volume will ultimately lead to the development of artificial general intelligence (AGI). Cahn argues, however, that Flapping Airplanes signals a pivotal shift towards a long-term research focus that may unlock significant advances in AI within the next decade.

In his commentary on Sequoia’s investment, Cahn noted, “The scaling paradigm argues for dedicating a huge amount of society’s resources, as much as the economy can muster, toward scaling up today’s LLMs, in the hopes that this will lead to AGI. The research paradigm argues that we are 2-3 research breakthroughs away from an ‘AGI’ intelligence, and as a result, we should dedicate resources to long-running research, especially projects that may take 5-10 years to come to fruition.” This perspective embodies a growing recognition that the quest for AGI may require innovative thinking rather than just increased resources.

Flapping Airplanes’ entry into the AI sector marks it as the latest player in an increasingly crowded field, yet its commitment to fundamental research sets it apart. Traditional approaches have primarily involved scaling existing architectures, often leading to escalating costs and diminishing returns. In contrast, the lab’s focus on foundational research may not only yield novel training techniques but also contribute to sustainable advancements in the field.

As the AI landscape continues to evolve, the challenge remains whether the industry can pivot from its current trajectory dominated by compute-heavy models. Flapping Airplanes may represent a critical inflection point, advocating for a shift in investment toward long-term research initiatives that could ultimately redefine the capabilities of AI technologies. This approach not only introduces a refreshing alternative to the status quo but also emphasizes the importance of fostering innovation through dedicated research efforts.

Looking ahead, the success of Flapping Airplanes will be closely monitored as it navigates the intricacies of the AI sector. By challenging established norms and proposing a distinct research-driven paradigm, the lab’s approach could either catalyze a new wave of AI advancement or serve as a cautionary tale about the pitfalls of diverging from industry standards. As the quest for AGI continues, Flapping Airplanes’ journey will likely influence discussions around the direction of AI investment and research priorities in the years to come.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech