In a notable departure from traditional vertical integration strategies, Microsoft CEO Satya Nadella has announced that the tech giant will continue to purchase artificial intelligence chips from Nvidia and AMD while simultaneously developing its own custom silicon. This approach, unveiled during recent investor discussions, is indicative of a sophisticated hedging strategy aimed at addressing the surging computational demands of AI workloads alongside the inherent challenges of semiconductor development.

The announcement aligns with Microsoft’s aggressive expansion of AI infrastructure to support its growing portfolio of generative AI services, which includes the Copilot suite and Azure OpenAI offerings. Nadella highlighted that the development of custom chips, including the Maia AI accelerator and Cobalt CPU, serves as an additional option rather than a replacement for existing suppliers. This pragmatic stance reveals that Microsoft’s computational needs are outpacing the capabilities of any single manufacturer, even as its internal technology begins to take shape.

Industry analysts suggest that this multi-vendor strategy reflects lessons learned from other major tech players. Amazon Web Services, despite its own development of Graviton processors and Trainium AI chips, continues to utilize Nvidia GPUs within its cloud services. Similarly, Google maintains partnerships with Nvidia while deploying its proprietary Tensor Processing Units. This trend underscores a vital principle of modern AI infrastructure: sourcing chips from multiple vendors is not only prudent risk management but an operational necessity for serving enterprise customers with diverse workload requirements.

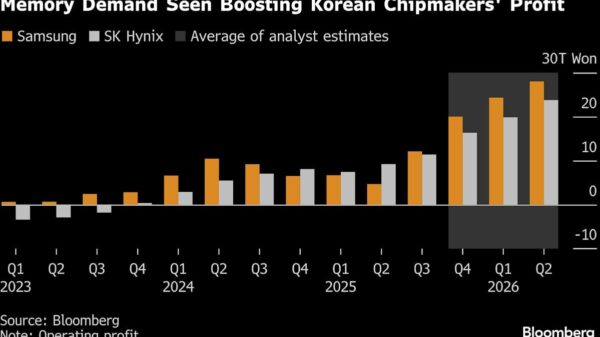

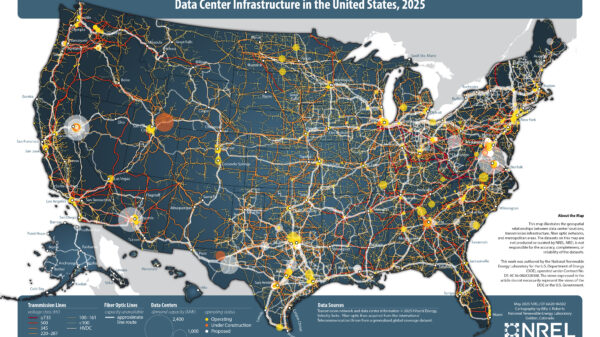

Microsoft’s capital expenditure on AI infrastructure has surged, with the company investing billions quarterly on data center expansion and chip procurement. The rationale behind nurturing relationships with Nvidia and AMD—while also developing proprietary silicon—extends beyond mere cost considerations. Custom chips typically entail extensive development timelines and significant upfront investments before reaching production scale, whereas established vendors provide immediate availability and proven performance for current AI models.

The Maia chip represents Microsoft’s first significant venture into custom AI accelerators, targeting workloads that allow for performance optimization specific to its software stack. However, the timeline for Maia’s development means it cannot yet fulfill the entire spectrum of the company’s computational needs. In contrast, Nvidia’s H100 and upcoming B100 GPUs, alongside AMD’s MI300 series accelerators, deliver reliable solutions that support the wide array of AI frameworks and models essential for Microsoft’s enterprise clientele. This reality strongly advocates for maintaining robust procurement channels even as internal alternatives emerge.

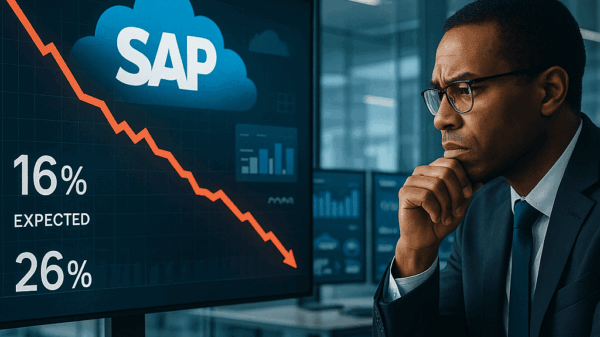

Moreover, the competitive landscape of the AI chip market reinforces Microsoft’s strategy. Currently, Nvidia commands about 80% of the AI accelerator market, which provides it with unparalleled economies of scale in manufacturing and a software ecosystem that has become the industry standard for AI development. AMD’s MI300 series presents a viable alternative, particularly for clients looking to reduce reliance on a single vendor. By fostering strong relationships with both suppliers, Microsoft enhances its negotiating power while ensuring access to the latest innovations from multiple sources.

Custom chip development is not without its challenges. The semiconductor industry’s complexity creates limitations on how quickly a company can develop competitive alternatives to established players. The Maia chip, designed in collaboration with TSMC, represents a multi-year investment in design, software tooling, and validation. Even with ample resources, replicating the performance and software maturity of Nvidia’s CUDA ecosystem or AMD’s ROCm platform requires sustained effort across several product iterations.

Technical risks also loom large. Design flaws identified late in development can postpone product launches by months or years, and manufacturing hurdles at advanced process nodes can limit production capacity. By maintaining procurement ties with established vendors, Microsoft safeguards its AI services against potential setbacks from internal chip development initiatives. This becomes particularly critical given the company’s obligations to enterprise customers who demand consistent service levels.

The software dimension adds another layer of complexity. Nvidia’s CUDA platform is embedded in numerous AI development workflows, with a plethora of frameworks and applications optimized for its architecture. While Microsoft can tailor its services for Maia chips, enterprise customers deploying AI workloads on Azure often possess existing codebases designed for Nvidia hardware. Thus, supporting these customers necessitates maintaining substantial Nvidia offerings, even as Microsoft promotes its proprietary solutions for specific scenarios.

Looking ahead, Microsoft’s commitment to a diverse chip procurement strategy signals a broader shift in how hyperscale cloud providers approach AI infrastructure. Instead of exclusive reliance on custom silicon, leading firms are adopting portfolio strategies that blend internal development with external partnerships. This model acknowledges that the AI chip market evolves too swiftly for any single architectural approach to reign supreme across all workloads and client needs.

Additionally, geopolitical factors and supply chain dynamics play a crucial role in shaping Microsoft’s chip strategy. The concentration of advanced chip manufacturing in Taiwan, coupled with ongoing geopolitical tensions, introduces risks that diversification can alleviate. By sustaining relationships with multiple chip vendors that utilize different manufacturing partners, Microsoft lessens its exposure to potential disruptions arising from natural disasters or geopolitical conflicts.

As the AI infrastructure landscape continues to evolve, Microsoft’s multi-vendor approach positions it to meet diverse customer needs, fostering healthy competition among various architectural solutions. This strategy not only enhances customer choice but also drives innovation across the sector, compelling traditional players like Nvidia and AMD to continually enhance their offerings. In this rapidly changing environment, Microsoft’s success will hinge on effectively orchestrating its relationships with external suppliers while strategically investing in its internal chip development, ensuring flexibility in addressing the burgeoning demands of AI workloads.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech