The artificial intelligence industry is witnessing a surge in infrastructure deals, largely driven by a new class of vendors known as neocloud providers. These emerging cloud companies are specifically designed to meet the computational demands of generative AI, offering high-performance GPU clusters and ultra-fast networking. Unlike established giants like Amazon Web Services, Google Cloud, and Microsoft Azure, neocloud providers focus primarily on the optimized infrastructure needed to train and run large AI models.

Neocloud providers are startups dedicated almost exclusively to AI workloads, which allows them to deliver high-performance GPU computing at lower prices than traditional hyperscalers. This shift has placed them at the center of significant industry partnerships, including a $50 billion deal between **Anthropic** and the neocloud firm **Fluidstack** to develop AI-centric data centers in Texas and New York, intended to support future versions of Claude. Meanwhile, **Microsoft** has reportedly spent over $60 billion in neocloud investments, and **Meta** has committed $3 billion to **Nebius** and an additional $14.2 billion to **CoreWeave**, both of which are scaling rapidly to meet the demands for GPU-dense compute.

The landscape is characterized by an unprecedented infrastructure arms race, with estimates suggesting that major tech players will spend up to $320 billion by 2025 on new AI data centers. This level of construction poses challenges to America’s power and water systems. **Meta** alone plans to invest $600 billion in new facilities over the next three years, and the **SoftBank-OpenAI-Microsoft-Oracle “Stargate” initiative** aims to build a network of AI-first supercomputing sites across the U.S., potentially reaching a capacity of 10 gigawatts by the end of the decade.

As companies look to secure the infrastructure necessary for advanced AI development, the trend signals a notable shift in sourcing strategies. After years of depending on major cloud providers for their needs, top industry players are increasingly turning to neocloud vendors that promise quicker capacity delivery, flexible terms, and more affordable pricing. With the costs of training cutting-edge models soaring, traditional platforms are often unable to keep pace, creating a space that neoclouds are adept at filling.

Neocloud providers have emerged in response to shortages in GPU availability that became evident during the generative AI boom. Many began in the cryptocurrency mining sector before pivoting to provide GPU-as-a-service, leading to the creation of a new type of cloud computing focused solely on AI. Currently, there are over 100 neocloud providers globally, with around ten gaining significant traction in the United States. Established companies like **CoreWeave** and **Lambda** are leading the charge, closely followed by newcomers such as **Nebius**, **Fluidstack**, and **TensorWave**.

These neocloud providers offer several competitive advantages. Their focused model allows them to provide GPU compute at significantly lower costs compared to major players, with some estimates indicating savings of up to two-thirds. This affordability is particularly attractive to startups and smaller AI developers. Furthermore, the specialized infrastructure and high-bandwidth networks built for AI workloads enable faster access to GPUs, allowing teams to initiate large multi-GPU projects within minutes rather than waiting in extensive queues.

However, the rise of neocloud providers is not without risks. The influx of investment into these startups raises concerns about possible market bubbles reminiscent of the dot-com era, where inflated valuations could lead to instability. Additionally, as AI research evolves toward more efficient models, the long-term demand for large GPU clusters may decline, leaving companies with underutilized resources. The market is also becoming increasingly fragmented, with competition intensifying among providers, leading to potential consolidations or failures.

As these neocloud providers continue to expand, they are putting additional strain on environmental and infrastructure resources. In 2024, U.S. data centers consumed 183 terawatt-hours of electricity, accounting for about 4 percent of the nation’s total energy use. This demand is projected to double by 2030, raising concerns about sustainability as cooling these facilities consumed an estimated 17 billion gallons of water last year.

Looking toward the future, neoclouds will play a pivotal role in the ongoing AI boom, which is driving an insatiable demand for computational power. This surge is shaping a new cloud ecosystem where neoclouds complement traditional hyperscalers. While general enterprise workloads may still run on conventional clouds, the need for specialized GPU-heavy training and inference will see a significant reliance on neocloud capacities. The dynamic between these agile providers and established hyperscalers will redefine the landscape of AI development, influencing how quickly innovative models can be created and deployed.

See also Chinese Startup Zhonghao Xinying Launches GPTPU, Claims 1.5x Speed Boost Over NVIDIA A100

Chinese Startup Zhonghao Xinying Launches GPTPU, Claims 1.5x Speed Boost Over NVIDIA A100 eMazzanti Technologies Launches eBot: AI Assistant for 24/7 IT Support with Microsoft Copilot Studio

eMazzanti Technologies Launches eBot: AI Assistant for 24/7 IT Support with Microsoft Copilot Studio ESDS Launches Sovereign-Grade GPU-as-a-Service for Large-Scale AI Workloads

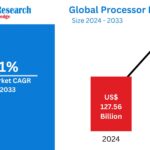

ESDS Launches Sovereign-Grade GPU-as-a-Service for Large-Scale AI Workloads Global Processor Market Set to Reach $210.26B by 2033, Driven by AI and Cloud Demand

Global Processor Market Set to Reach $210.26B by 2033, Driven by AI and Cloud Demand