BENGALURU: Salesforce is refining its artificial intelligence strategy by re-evaluating the deployment of large language models (LLMs) within enterprise software. As organizations struggle to transition generative AI from pilot projects to reliable, production-ready systems, the company is focusing on bridging the gap that has emerged between LLM benchmark performance and real-world business applications. Srini Tallapragada, president and chief engineering and customer success officer at Salesforce, noted that the past two years have highlighted a significant disparity in this area.

“LLMs are foundational technology and will be relevant for many years,” Tallapragada remarked. “But enterprises are discovering that strong benchmark performance doesn’t automatically translate into consistent business outcomes.” Many large firms spent 2024 and early 2025 conducting AI pilot programs, only to find that few systems were ready for full-scale deployment. Tallapragada emphasized that the primary obstacle lies in the “last mile,” where AI systems must function predictably across various edge cases, over time, and under regulatory scrutiny.

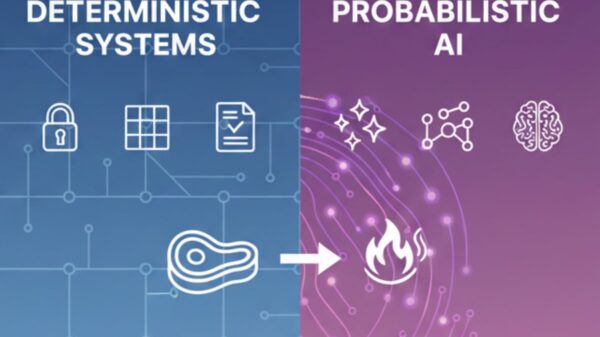

By design, LLMs are probabilistic systems. While they excel at interpreting intent, language, and context, they do not always comply with fixed instructions absolutely. “They may comply 97% of the time, but enterprises need workflows that work 100% of the time,” Tallapragada stated, particularly when it comes to sensitive areas like financial services, customer refunds, and policy enforcement.

To tackle these challenges, Salesforce is integrating generative AI with deterministic systems that enforce non-negotiable rules and standard operating procedures. This approach entails using LLMs in scenarios that require flexibility, reasoning, and empathy, while depending on rule-based logic for compliance-heavy or audit-sensitive tasks. “People initially tried to use the same tool for everything,” Tallapragada explained. “But sometimes a simple ‘if-then’ rule is the right answer. The challenge is making these different approaches work seamlessly together.”

Tallapragada also issued a caution regarding the over-reliance on industry benchmarks, suggesting that many assessments are theoretical and can be manipulated. “A perfect score doesn’t mean the system will perform reliably in the real world,” he said. Despite adopting this more disciplined approach, Salesforce is not decreasing its use of LLMs. The company collaborates with both large and small models and continues to enhance overall usage, focusing on performance, cost, and sustainability.

Looking toward the future, Tallapragada believes that 2026 could represent a pivotal moment for enterprise AI adoption. “The focus is shifting from excitement to outcomes,” he stated. “Our job is to turn powerful models into systems that deliver real value for businesses—consistently and at scale.” This aligns with previous statements from Salesforce CEO Marc Benioff, who has emphasized that the company’s AI strategy is intended to augment human decision-making rather than replace it. In this vision, AI agents manage routine tasks while human operators retain roles that require judgment.

See also Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity

Tesseract Launches Site Manager and PRISM Vision Badge for Job Site Clarity Affordable Android Smartwatches That Offer Great Value and Features

Affordable Android Smartwatches That Offer Great Value and Features Russia”s AIDOL Robot Stumbles During Debut in Moscow

Russia”s AIDOL Robot Stumbles During Debut in Moscow AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse

AI Technology Revolutionizes Meat Processing at Cargill Slaughterhouse Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech

Seagate Unveils Exos 4U100: 3.2PB AI-Ready Storage with Advanced HAMR Tech