Nvidia unveiled significant updates aimed at enterprises during the CES 2026 event in Las Vegas, launching its latest computing architecture, the Rubin platform. This new platform is set to transform how businesses deploy advanced artificial intelligence systems. Among the first vendors to offer the Rubin platform is CoreWeave, a neocloud provider with clients including IBM and OpenAI.

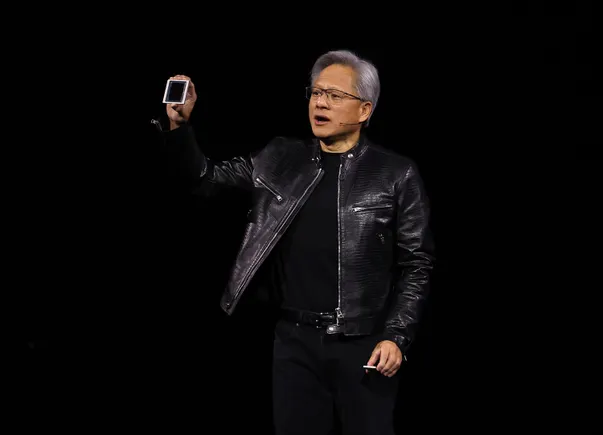

The Rubin platform, which utilizes six chips, is designed to deliver more efficient inference results and requires fewer GPUs for model training compared to its predecessor, the Nvidia Blackwell platform. Nvidia claims these enhancements will lower inference costs and resource demands, which the company believes will facilitate broader adoption of AI technologies across various industries. “Vera Rubin is designed to address this fundamental challenge we have: The amount of computation necessary for AI is skyrocketing; the demand for Nvidia GPUs is skyrocketing,” said Jensen Huang, Nvidia’s CEO and Founder, during his keynote at CES. Huang emphasized that the computational demands imposed by rapidly evolving AI models are increasing exponentially each year.

In 2025, the surge in demand for compute resources became apparent as businesses hastened to implement new AI tools. In a Q1 2026 earnings call, Microsoft disclosed that it was grappling with a compute capacity shortage that would impact its operations throughout the fiscal year. A report from IT services management firm Flexential indicated that nearly 80% of organizations are proactively evaluating their AI data center capacities in anticipation of future needs.

Major players like Microsoft, AWS, Google, Oracle, and OpenAI are expected to adopt Nvidia’s Rubin platform as they navigate the ongoing capacity challenges. The interest is not limited to large hyperscalers; traditional IT firms such as Dell, HPE, and Lenovo have also expressed interest, highlighting the widespread relevance of this new technology.

The Rubin platform aims to meet the demands of what Nvidia terms “next generation AI factories.” These factories must manage thousands of input tokens to deliver context for complex workflows while ensuring real-time inference within power, cost, and deployment limitations. Kyle Aubrey, director of technical marketing for Nvidia’s accelerated computing product team, explained that AI factories consist of specialized infrastructure stacks tailored to streamline the AI lifecycle.

To achieve its goals, Nvidia integrated various components—including GPUs, CPUs, power delivery systems, and cooling structures—into a cohesive system that underpins the Rubin platform. “By doing so, the Rubin platform treats the data center, not a single GPU server, as the unit of compute,” Aubrey noted, establishing a new basis for producing intelligence efficiently and predictably at scale.

Nvidia was not the only technology company to present a new rack-scale platform at CES. AMD also introduced its Helios platform, which aims to provide optimal bandwidth and energy efficiency for training trillion-parameter models. In its release, AMD highlighted that compute infrastructure serves as the backbone for AI development, driving unprecedented expansion in global compute capacity. “AMD is building the compute foundation for this next phase of AI through end-to-end technology leadership, open platforms, and deep co-innovation with partners across the ecosystem,” stated Lisa Su, AMD’s CEO and Chair.

The introduction of both the Rubin and Helios platforms underscores the tech industry’s rapid evolution in response to growing AI workloads. As companies like Nvidia and AMD push the boundaries of what is technologically possible, the implications for data centers and enterprise capabilities are profound, signaling a shift towards more integrated and efficient computing solutions designed to meet the demands of the AI-driven future.

See also Open-Source AI Models Transform 2025 Machine Learning with Privacy and Innovation

Open-Source AI Models Transform 2025 Machine Learning with Privacy and Innovation Norm Ai Launches AI-Powered DDQ and RFP Solution to Streamline Institutional Workflows

Norm Ai Launches AI-Powered DDQ and RFP Solution to Streamline Institutional Workflows METR Reveals AI Tools Slow Developers by 19%, Sparking Concerns of AI Bubble

METR Reveals AI Tools Slow Developers by 19%, Sparking Concerns of AI Bubble AI Founders Misjudge Uniqueness as Industry Advances; Clear Communication Key to Distinction

AI Founders Misjudge Uniqueness as Industry Advances; Clear Communication Key to Distinction Maxon Launches Digital Twin Tool Amid Backlash from 3D Artists Over AI Focus

Maxon Launches Digital Twin Tool Amid Backlash from 3D Artists Over AI Focus