The landscape of artificial intelligence (AI) is undergoing a significant transformation, making machine learning more accessible to a broader swath of developers, startups, and researchers by 2025. This shift is largely attributed to the rise of open-source AI models, particularly open-weight models, which offer a cost-effective and flexible alternative to proprietary AI solutions. The proliferation of these tools is democratizing AI technologies, allowing smaller entities to innovate without the hefty price tag associated with traditional software and services.

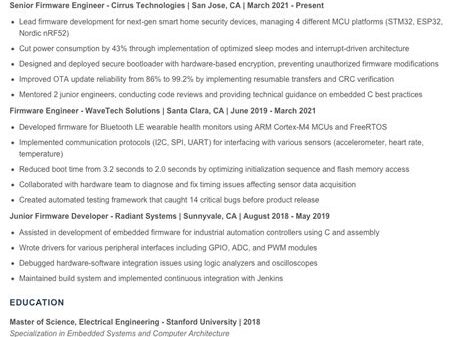

As companies increasingly opt for local deployment of open-source AI, they benefit from enhanced data privacy and control, minimizing the risk of data leaks, particularly in sensitive applications. This approach allows developers to run models on their own systems, fine-tune them, and leverage them for real-world applications without relying heavily on cloud services. In 2025, open-weight models have emerged as a dominant form of open AI, enabling developers to create customized solutions swiftly and economically.

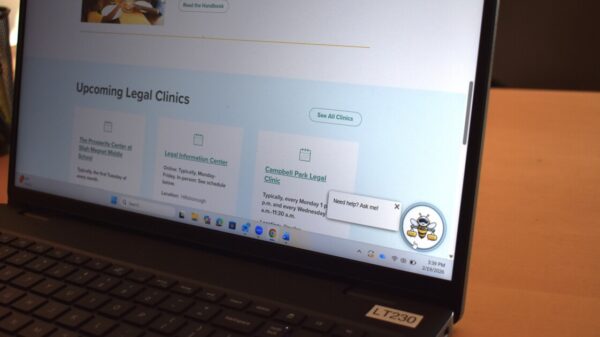

Startups are among the primary beneficiaries of this trend. Open-source AI enables them to develop essential tools—ranging from chatbots and search systems to writing assistants and data analysis platforms—without incurring prohibitive costs. Furthermore, the flexibility afforded by open-source models allows these new enterprises to avoid the stringent usage restrictions imposed by larger platform providers, fostering innovation and accelerating product development.

Open-source AI also caters to specific local needs and niche sectors that often go overlooked by larger companies. By allowing developers to focus on regional languages, unique datasets, and industry-specific challenges, many effective AI solutions in 2025 have emerged from small teams dedicated to addressing precise problems. This localized approach not only enhances functionality but also aligns technology with the unique demands of diverse communities.

The privacy implications of open-source AI are profound. Traditional AI services typically necessitate the transmission of sensitive data to external servers, creating discomfort for users concerned about data safety. In stark contrast, open-source models can be deployed locally, ensuring that sensitive information remains on users’ premises. This feature is particularly crucial for industries such as healthcare, finance, and legal sectors, where data integrity and confidentiality are paramount.

Moreover, organizations utilizing open-source models gain greater control over their data management practices, eliminating ambiguities related to data storage and reuse. This clarity is vital in an era where data privacy is increasingly scrutinized, making open-source technology a preferred choice for privacy-conscious entities.

However, the rise of open-source AI is not without its challenges. With the release of powerful models into the public domain, the potential for misuse also escalates. Issues such as the creation of misleading information and automated scams pose significant ethical questions. As of 2025, it remains uncertain who bears responsibility for the actions taken using these open-source tools, complicating the landscape of regulation and ethical use in AI.

While legislative efforts are underway to govern AI, the decentralized nature of open-source models presents unique hurdles. Existing regulations tend to be designed with large corporations in mind, often failing to account for the nuances associated with open-source technology. Different countries have begun to adopt varied approaches to AI regulation, but the absence of universal guidelines complicates compliance for developers who must navigate a patchwork of rules.

Concerns also persist regarding potential overreach in regulation. Striking a balance between ensuring safety and fostering innovation is an ongoing challenge, particularly for smaller teams that may lack the resources to navigate complex legal frameworks. The risk is that stringent regulations could inadvertently consolidate AI capabilities within larger organizations, stymying the creativity and ingenuity present in smaller startups.

The coexistence of open-source and closed AI models is becoming a hallmark of the industry. Most companies are now utilizing a hybrid approach, employing open-source models for custom, private, or budget-sensitive applications while reserving closed models for broader, large-scale tasks. This synergy not only enhances operational efficiency but also enriches the technological landscape.

As open-source AI reshapes the foundations of machine learning, it empowers a wider array of developers to influence the direction of technology, reducing barriers that once confined innovation to well-funded corporations. This evolution marks a significant departure from viewing AI as merely a product; instead, it is increasingly recognized as a foundational technology that can be built upon by diverse players.

While the journey toward fully harnessing the potential of open-source AI may be gradual, its impact on innovation, privacy, and the emergence of new enterprises is already evident. Addressing the ethical and regulatory challenges that accompany this shift will be critical in determining the future trajectory of artificial intelligence. How these issues are navigated will play a pivotal role in shaping the next chapter of AI development.

See also Norm Ai Launches AI-Powered DDQ and RFP Solution to Streamline Institutional Workflows

Norm Ai Launches AI-Powered DDQ and RFP Solution to Streamline Institutional Workflows METR Reveals AI Tools Slow Developers by 19%, Sparking Concerns of AI Bubble

METR Reveals AI Tools Slow Developers by 19%, Sparking Concerns of AI Bubble AI Founders Misjudge Uniqueness as Industry Advances; Clear Communication Key to Distinction

AI Founders Misjudge Uniqueness as Industry Advances; Clear Communication Key to Distinction Maxon Launches Digital Twin Tool Amid Backlash from 3D Artists Over AI Focus

Maxon Launches Digital Twin Tool Amid Backlash from 3D Artists Over AI Focus AI Weather Forecasting Tools Underperform in Predicting Extreme Events, Study Finds

AI Weather Forecasting Tools Underperform in Predicting Extreme Events, Study Finds