As artificial intelligence (AI) continues to permeate various sectors, a significant shift is underway in how organizations perceive and implement this technology. The recent findings from the AI World Journal indicate a decisive turn in the conversation around AI, moving from “what’s possible” to “what’s sustainable and responsible.” With over 1 billion users engaging with AI tools globally, the industry is acknowledging that the integration of AI must be aligned with ethical standards and governance frameworks.

Since the launch of ChatGPT three years ago, AI has transitioned from an emerging technology to a foundational force in business operations. Recent statistics reveal that 88% of organizations have adopted AI for at least one business function, while 800 million users interact with ChatGPT weekly. However, the numbers highlight an underlying dilemma: while many organizations are experimenting with AI, they struggle to realize its full potential.

McKinsey’s latest research underscores this gap, showing that while 88% of organizations use AI, only 33% have successfully scaled it across their enterprises, and a mere 6% report a significant return on investment (ROI). This phenomenon, often referred to as “pilot purgatory,” reflects a common pattern wherein businesses integrate AI into existing workflows without fundamentally redesigning their processes to maximize the benefits of the technology.

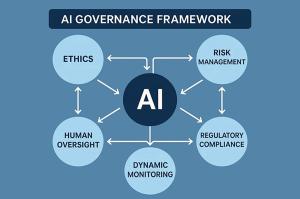

Moreover, organizations have yet to address the critical issue of governance and accountability. According to Hostinger’s research, nearly half of companies allocate a modest percentage of their tech budgets to AI, yet many lack robust frameworks for ensuring responsible AI use. With the rise of generative AI and its applications in customer support and automation, the need for stringent oversight regarding bias, data privacy, and ethical considerations has become paramount.

This growing urgency is underscored by the fragmented nature of the current AI ecosystem. DataReportal’s analysis indicates that, despite the vast number of users, many leading AI companies operate without transparency, leading to inflated claims and a lack of unified governance standards. As governments across the globe begin implementing regulations for AI safety and ethical use, the existing frameworks often lag behind the rapid technological advancements.

The AI World Journal emphasizes that organizations face a critical challenge: aligning AI capabilities with robust governance frameworks. As the industry grapples with issues of bias, safety, and accountability, emerging roles focused on responsible AI governance are being created. However, the adoption of these frameworks often remains slow.

Interestingly, emerging markets, particularly in Africa, present a unique opportunity. Countries like Nigeria, where significant portions of the population remain unconnected to the internet, can develop AI infrastructure without the constraints of legacy systems. This absence of outdated processes allows for the integration of responsible AI practices from the outset, potentially giving these regions a competitive edge over their developed counterparts.

Organizations that prioritize responsible AI from the beginning are likely to benefit significantly in the coming years. As the AI World Journal highlights, the market is shifting toward recognizing responsible AI as a competitive advantage, especially as consumer expectations evolve. Companies that effectively address responsible governance can expect to attract and retain top talent while navigating emerging regulations more smoothly.

Looking ahead, the year 2026 is poised to mark a pivotal moment in the AI landscape. As the conversation shifts from merely deploying AI to deploying it responsibly, organizations will be forced to confront the implications of their technology choices. Those that treat responsible AI as a compliance checkbox may find themselves trailing behind more forward-thinking competitors.

The impending regulatory landscape, including frameworks like the EU AI Act, will further underscore the importance of ethical considerations. Organizations that have proactively integrated responsible AI practices into their operations will not only enhance user trust but also position themselves favorably as the market continues to evolve.

In conclusion, the transformative potential of AI is undeniable. However, the question that organizations must grapple with is not whether to adopt AI, but rather how to do so responsibly. As we approach 2026, those who successfully navigate this complex landscape will emerge as leaders, setting a precedent for how technology can be leveraged ethically and sustainably.

See also Jimmy Joseph’s AI Breakthrough Cuts Healthcare Payment Anomalies by 35% and Wins Global Award

Jimmy Joseph’s AI Breakthrough Cuts Healthcare Payment Anomalies by 35% and Wins Global Award China’s Fashion Industry Transforms with AI: Key Innovations Propel $1.75B Market Growth by 2025

China’s Fashion Industry Transforms with AI: Key Innovations Propel $1.75B Market Growth by 2025 Malaysia Blocks Elon Musk’s Grok Chatbot Over Inadequate Safeguards Against AI Pornography

Malaysia Blocks Elon Musk’s Grok Chatbot Over Inadequate Safeguards Against AI Pornography Former Isro Chief Urges Ethical AI Leadership at GM University’s Inaugural Convocation

Former Isro Chief Urges Ethical AI Leadership at GM University’s Inaugural Convocation Google Unveils Universal Commerce Protocol, Enabling AI Agents to Manage Seamless Checkout

Google Unveils Universal Commerce Protocol, Enabling AI Agents to Manage Seamless Checkout