A recent study by Anthropic reveals significant trends in the autonomy of AI agents, highlighting the evolving dynamics of oversight and their application in higher-risk environments. This research offers insight into how users interact with AI agents, particularly through its public API and the coding agent Claude Code, showcasing a notable shift towards greater independence in their operations.

The analysis, which examined millions of interactions, indicates a marked increase in the duration of autonomous sessions. Top users have begun allowing AI agents to operate for stretches exceeding forty minutes without intervention, a substantial leap compared to previous practices where tasks were frequently interrupted. This trend suggests a growing confidence among users in the capabilities of AI systems.

Furthermore, experienced users exhibit a distinct behavioral shift as they become more accustomed to working with AI. Many have transitioned to auto-approve features, reducing the frequency of manual checks on the actions performed by the agent. Interestingly, while trust in the AI appears to grow, these users also tend to interrupt the agent more often when they perceive unusual behavior. This duality indicates that trust in AI does not eliminate the necessity for oversight; instead, it evolves alongside a refined understanding of when monitoring is essential.

The AI agent itself demonstrates a cautious approach, increasingly pausing to seek clarification as tasks escalate in complexity. This behavior suggests an intrinsic design aimed at enhancing communication between the agent and its human counterparts, arguably fostering more effective collaboration.

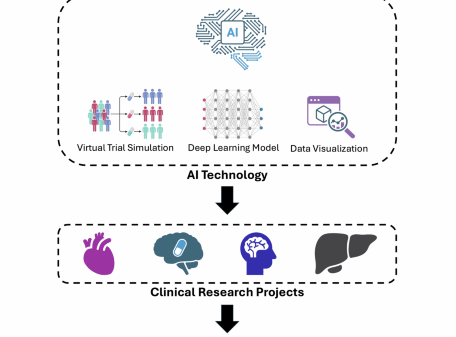

The research further highlights a diverse array of domains utilizing AI agents, with software engineering leading in usage. However, early indications of adoption in sectors such as healthcare, cybersecurity, and finance are also emerging. Although most actions executed by these agents remain low-risk and easily reversible—often safeguarded by restricted permissions or human oversight—there is a small fraction where actions could have irreversible consequences, such as sending messages externally.

Anthropic notes that the level of real-world autonomy currently realized falls significantly short of the potential indicated by external capability assessments, including those conducted by METR. The company underscores that the safe deployment of these technologies hinges on the development of stronger post-deployment monitoring systems. Additionally, effective design for human-AI cooperation will be critical, ensuring that autonomy is granted judiciously rather than recklessly.

As AI technology continues to evolve and integrate into various sectors, the findings from this study underscore the necessity for adaptive oversight mechanisms that can keep pace with growing user trust and increasing complexity of tasks. The implications for industries venturing into higher-risk applications are profound, as the balance between AI autonomy and human oversight becomes ever more critical.

See also Barclays Highlights Physical AI’s Growth Potential as Market Approaches Inflection Point

Barclays Highlights Physical AI’s Growth Potential as Market Approaches Inflection Point Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032 Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs

Satya Nadella Supports OpenAI’s $100B Revenue Goal, Highlights AI Funding Needs