Critical security vulnerabilities have been identified in several widely used open-source Python AI and machine learning libraries, which power models on the popular platform Hugging Face. These flaws expose the models to remote code execution (RCE) through poisoned metadata, raising concerns about the integrity of shared AI tools within the open-source ecosystem.

The affected libraries include NeMo from Nvidia, Uni2TS from Salesforce, and FlexTok, a collaborative effort between Apple and EPFL’s Visual Intelligence and Learning Lab. All three libraries utilize Hydra, an open-source configuration management tool maintained by Meta. The crux of the issue lies within Hydra’s hydra.utils.instantiate() function, which can execute any callable specified in configuration metadata, not limited to class constructors.

Malicious actors could exploit this vulnerability by publishing altered models containing harmful metadata. When these modified models are loaded, the poisoned metadata can trigger functions such as eval() or os.system(), facilitating arbitrary code execution. The vulnerabilities were discovered by the threat research team Unit 42 at Palo Alto Networks, which responsibly disclosed the findings to the maintainers of the affected libraries. Since then, fixes, advisories, and Common Vulnerabilities and Exposures (CVE) identifiers have been issued, although no confirmed exploitation activities have been reported in the wild.

According to Curtis Carmony, a malware research engineer at Unit 42, “Attackers would just need to create a modification of an existing popular model, with either a real or claimed benefit, and then add malicious metadata.” He cautioned that while formats like safetensors may seem secure, “there is a very large attack surface in the code that consumes them.”

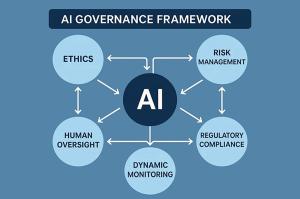

This discovery highlights a broader risk within the open-source AI supply chain. Models on Hugging Face rely on over 100 Python libraries, nearly half of which incorporate Hydra, creating systemic vulnerabilities across the ecosystem. Although Meta has updated Hydra’s documentation to caution against RCE risks, a recommended block-list mechanism to mitigate these vulnerabilities has not yet been developed, further complicating efforts to secure shared open-source AI infrastructure.

The implications of these vulnerabilities extend beyond individual libraries. As open-source AI becomes increasingly integral to various applications, the need for robust security measures is paramount. The interconnected nature of these libraries means that a flaw in one can cascade, affecting numerous models and applications reliant on them.

Industry experts emphasize the importance of vigilance and proactive measures among developers and users of these libraries. Ensuring that models are sourced from trusted repositories and maintaining an awareness of ongoing security advisories can help mitigate potential risks associated with compromised metadata.

Looking forward, as the demand for AI solutions continues to grow, so too will the scrutiny of the libraries that support them. The open-source community must grapple with the challenges of maintaining security while fostering innovation. As more organizations adopt AI technologies, addressing vulnerabilities such as those revealed in this incident will be crucial for safeguarding the integrity of the AI supply chain.

See also xAI Enforces Stricter Limits on Grok Image Editing to Counter Regulatory Risks

xAI Enforces Stricter Limits on Grok Image Editing to Counter Regulatory Risks ELAXIR Tool Revolutionizes Patient Engagement in Ethical AI Healthcare Discussions

ELAXIR Tool Revolutionizes Patient Engagement in Ethical AI Healthcare Discussions DigitalOcean Achieves 2x Inference Throughput with Character.ai, Halves Costs per Token

DigitalOcean Achieves 2x Inference Throughput with Character.ai, Halves Costs per Token China Imposes Limits on Nvidia AI Chip Purchases Amid U.S. Export Policy Shift

China Imposes Limits on Nvidia AI Chip Purchases Amid U.S. Export Policy Shift Chinese Startup DeepSeek Leverages $10B Hedge Fund to Disrupt AI with Cost-Effective Models

Chinese Startup DeepSeek Leverages $10B Hedge Fund to Disrupt AI with Cost-Effective Models