Google Research has introduced a groundbreaking framework aimed at transforming accessibility in technology, called Natively Adaptive Interfaces (NAI). Announced by Sam Sepah, AI Accessibility Research Program Manager, the framework seeks to address the longstanding issue of accessibility being an afterthought in product design. Instead of requiring users with disabilities to adjust to technology, NAI proposes that technology should dynamically adapt to meet their needs.

Utilizing multimodal AI agents, the NAI framework aims to make accessibility the default for all users. This innovative approach centers around a system of “orchestrator” and “sub-agents.” Rather than navigating through complex menus, a primary AI agent assesses the user’s overall goal and collaborates with specialized agents to reconfigure the interface in real-time.

For instance, if a user with low vision opens a document, the NAI framework goes beyond simply offering a zoom function. An orchestrator agent identifies the document type and user context, allowing sub-agents to scale text, adjust UI contrast, or even provide real-time audio descriptions of images. For users with ADHD, the system can proactively simplify page layouts to reduce cognitive load and emphasize critical information. This approach aims to create what researchers refer to as the “curb-cut effect”, where interfaces designed to accommodate extreme needs ultimately enhance the experience for all users.

The development of NAI is grounded in collaboration with organizations dedicated to the disability community. Google.org is partnering with several key institutions, including the Rochester Institute of Technology’s National Technical Institute for the Deaf (RIT/NTID), The Arc, and Team Gleason. These partnerships are aimed at ensuring that the tools created address real-world challenges faced by individuals with disabilities.

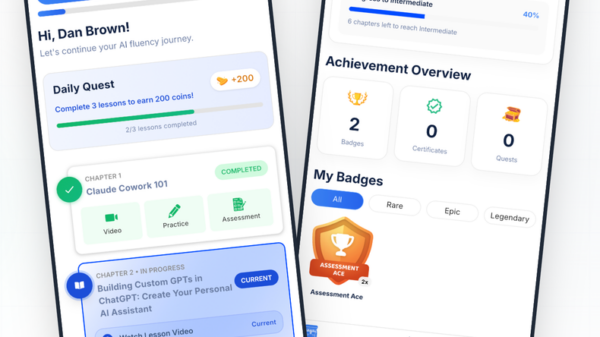

A notable example of this initiative is the Grammar Lab, an AI-powered tutoring tool developed by Erin Finton, a lecturer at RIT/NTID. This tool generates customized learning paths for students in both American Sign Language (ASL) and English, employing AI to produce tailor-made multiple-choice questions that adapt to each student’s language goals. The result is an educational experience that fosters independence and a deeper understanding of language.

While NAI is currently a research initiative, elements of its framework are already visible in Google’s public-facing products. Features like the newly launched Gemini in Chrome side panel and the “Auto Browse” functionality are early steps toward a more intuitive web that anticipates user needs. As Google moves closer to the launch of Project Aluminium, the NAI framework offers a glimpse into a future where operating systems act as active collaborators, adjusting in real-time to accommodate individual user abilities.

By fundamentally rethinking how technology can serve all users, Google’s NAI framework represents a significant shift in the accessibility landscape, positioning itself to create more inclusive digital experiences. As the technology continues to develop, the implications for both users with disabilities and the broader community could be profound, marking a pivotal moment in the evolution of accessible technology.

See also Broadcom and TSMC Set to Dominate $100B AI Chip Market by 2027, Analysts Predict Major Gains

Broadcom and TSMC Set to Dominate $100B AI Chip Market by 2027, Analysts Predict Major Gains Anthropic Launches Claude Opus 4.6, Set to Disrupt Software Market with Advanced AI Features

Anthropic Launches Claude Opus 4.6, Set to Disrupt Software Market with Advanced AI Features Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032