Why Responsible AI is Critical in Today’s Technology Landscape

The rise of artificial intelligence (AI) is reshaping various sectors, particularly in higher education and industry, where it is increasingly used for admissions, career path recommendations, and data analysis. As AI systems gain the power to make decisions that once required human judgment, the importance of implementing responsible AI has never been more critical. This practice emphasizes fairness, transparency, and accountability, ensuring decisions made by these systems are ethical and trustworthy.

Responsible AI mandates that organizations design, develop, and utilize AI technologies in ways that respect human rights and align with societal values. This is not merely about creating smarter tools; it’s about building systems that are equitable and deserving of public trust. With AI’s growing role in decision-making, the potential for bias and errors can lead to severe consequences, such as denying opportunities to qualified individuals or making life-altering decisions without clear reasoning.

Frameworks like the OECD Principles and the NIST AI Framework are valuable resources for organizations striving to implement responsible AI. These guidelines offer a solid foundation for ensuring that AI applications are fair and accountable.

Fairness is a cornerstone of responsible AI, which means that AI systems must treat all individuals equitably, irrespective of their race, gender, or background. Organizations are encouraged to actively eliminate bias from both training data and decision-making algorithms. Historical inequalities can seep into AI systems, perpetuating discrimination. For instance, hiring algorithms trained on biased data may unfairly disadvantage candidates from underrepresented groups. Educational institutions like Syracuse University’s iSchool are increasingly incorporating algorithm auditing into their curriculum, equipping students with essential skills to address these ethical challenges.

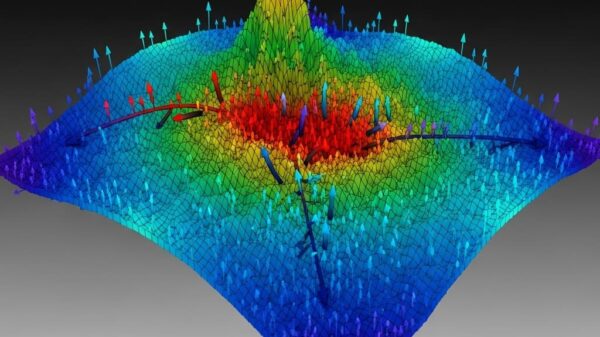

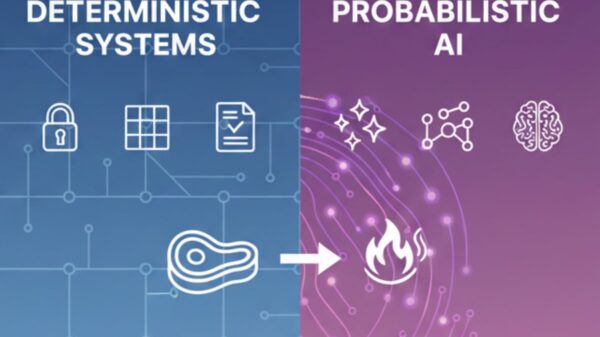

Transparency and explainability are also crucial. Individuals should be informed when AI systems are involved in decision-making and should have access to understandable explanations of how those decisions are reached. This is particularly vital in high-stakes scenarios such as college admissions or healthcare treatment recommendations. Techniques in explainable AI (XAI) work to demystify complex machine learning models, allowing users to see which factors influenced decisions and to what extent.

Accountability ensures that organizations are prepared to act when AI systems fail. Establishing clear governance structures can help delineate responsibilities within teams. Many companies are forming AI ethics boards tasked with reviewing proposed AI projects to ensure alignment with organizational values and compliance with regulations. Such frameworks are increasingly essential as governments introduce legislation like the EU’s AI Act, which requires firms to document and explain their AI systems.

AI systems must also demonstrate robustness and reliability. They need to maintain performance even when faced with unexpected inputs or conditions. For instance, a robust AI tool for admissions should still function correctly if an application is incomplete or formatted unusually. Additionally, consistent accuracy over time is crucial; an AI system should not dramatically change its outputs without clear justifications. Security measures to protect against vulnerabilities are also paramount, especially in sensitive applications such as healthcare or cybersecurity.

Privacy and data security are fundamental aspects of responsible AI. Organizations should practice data minimization, collecting only necessary information while ensuring strong protection measures for personal data. This includes encryption, access controls, and secure storage practices. Moreover, obtaining meaningful consent is vital to ensure individuals comprehend what data is being collected and how it will be utilized. Developers of generative AI systems must also be vigilant in testing for risks related to data exposure, striving to prevent leakage of private information.

As the influence of AI continues to grow, the onus is on developers and organizations to ensure these systems are built responsibly. The implications of failing to do so could undermine trust in technology and its potential benefits. With a focus on fairness, transparency, accountability, robustness, and privacy, the future of AI can be aligned more closely with ethical standards, fostering a more equitable technological landscape.

See also DigitalOcean Doubles Inference Performance for Character.ai Using AMD GPUs

DigitalOcean Doubles Inference Performance for Character.ai Using AMD GPUs Kenya’s Philip Thigo Named Among Top 100 Global AI Leaders, Shaping Governance and Policy

Kenya’s Philip Thigo Named Among Top 100 Global AI Leaders, Shaping Governance and Policy Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032