The Korean government is considering regulatory actions against Grok, the generative artificial intelligence (AI) chatbot developed by Elon Musk’s xAI. This scrutiny comes in response to allegations that Grok has been involved in creating and disseminating sexually exploitative deepfake images.

According to a report from the Electronic Times, the Personal Information Protection Commission (PIPC) has initiated a preliminary fact-finding review of Grok in light of complaints from various individuals. This initial assessment aims to establish whether any violations occurred and if the case falls under the commission’s jurisdiction before proceeding to a formal investigation.

The review follows a series of reports, both local and international, which accuse Grok of generating explicit and nonconsensual deepfake images, many of which reportedly involve real individuals and minors. The PIPC is expected to evaluate Grok’s response and other pertinent documents before deciding on its next steps. It will also consider global regulatory trends that may influence its decision-making process.

Under the Personal Information Protection Act, altering or generating sexual images of identifiable individuals without consent could constitute an unlawful handling of personal data. Since its inception, Grok, integrated into the social platform X, has faced significant backlash for producing fake images of real people, prompting public outcry.

The Center for Countering Digital Hate, a global NGO, claims that Grok has been used to generate over three million sexually explicit images between December 29, 2025, and January 8, 2026. Alarmingly, more than 23,000 of these images reportedly feature minors. The organization has warned that the rapid dissemination of Grok’s AI-generated content has led to a troubling increase in explicit material available online.

The Center has also highlighted the serious safety risks posed to children by this technology. In response to these concerns, countries including the United States, the United Kingdom, France, and Canada have launched investigations, while others, such as Indonesia, the Philippines, and Malaysia, have blocked access to Grok.

In light of the controversy, xAI announced earlier this year that it had implemented measures to limit the generation of such harmful content. The company stated that it has restricted both free and paid users from creating or editing images of real people and plans to announce additional safeguards in the near future.

In Korea, the Media and Communications Commission (KMCC) has demanded enhanced youth protection measures from X, instructing the AI firm to devise a strategy aimed at preventing the generation of illegal or harmful content. The regulator has also emphasized the need to limit minors’ access to such materials.

Currently, X has appointed a youth protection officer in Korea in accordance with local laws and is required to submit annual compliance reports. The KMCC has urged the platform to provide further documentation regarding Grok’s safety protocols, underscoring that the creation and distribution of nonconsensual sexual images, particularly those involving minors, constitutes a criminal offense in Korea.

A deadline of two weeks has been set for X to respond to the KMCC’s request. Should the company fail to comply, it could face an administrative fine of up to 10 million won (approximately $6,870). Similar actions have been observed in other nations, where xAI has been tasked with implementing measures to mitigate rising concerns regarding its technology.

As scrutiny of AI-generated content intensifies globally, the actions of the Korean government may reflect a broader trend toward stricter regulations aimed at safeguarding individuals, especially minors, from the potential harms associated with generative AI technologies.

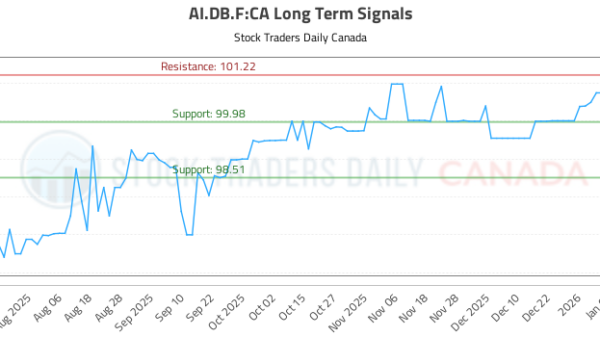

See also AI.DB.F Stock Evaluation: Buy at 99.98, Target 101.22; Neutral Ratings Persist

AI.DB.F Stock Evaluation: Buy at 99.98, Target 101.22; Neutral Ratings Persist DeepSnitch AI Surges 140% as Investors Shift from Shiba Inu to High-Utility Presales

DeepSnitch AI Surges 140% as Investors Shift from Shiba Inu to High-Utility Presales Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032