Editor’s note: This article is part of the Police1 Leadership Institute, which examines the leadership, policy and operational challenges shaping modern policing. In 2026, the series focuses on artificial intelligence technology and its impact on law enforcement decision-making, risk management and organizational change.

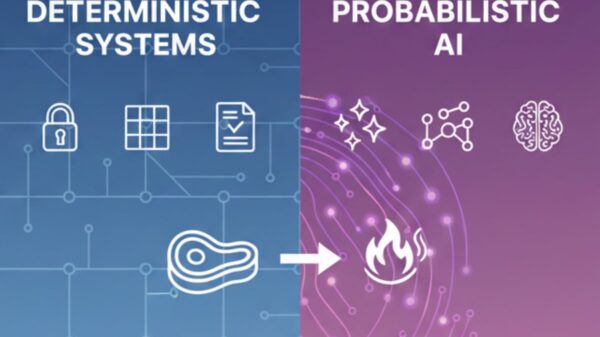

Artificial intelligence (AI) has quickly evolved from a conceptual tool to an operational reality in policing. Law enforcement leaders face the challenge of not just acquiring new technology but integrating it effectively into their organizational structures. Successful AI implementation hinges on aligning personnel, policy, training, and governance to enhance operations while minimizing risks. In many cases, the success or failure of AI initiatives rests less on the technology itself and more on organizational culture, processes, and accountability.

When agencies approach AI as merely a software upgrade, they often find themselves with costly tools that are underutilized or misapplied, leading to potential legal and ethical repercussions. To avoid these pitfalls, law enforcement agencies must develop a framework for internal readiness that emphasizes cultural adaptation, targeted workforce training, and robust governance structures.

Resistance to AI often arises from fears of job displacement among officers. Leaders must proactively communicate that AI is designed to serve as a force multiplier rather than as a replacement for personnel. The objective is to streamline repetitive tasks, allowing officers to concentrate on areas where human judgment is irreplaceable—such as victim support and community engagement. By automating administrative burdens like transcribing body-worn camera footage and data entry, AI frees officers to focus on critical decision-making.

Once a culture receptive to AI is established, agencies must ensure that their workforce possesses the necessary skills. As law enforcement increasingly relies on data-driven tools, data literacy must become a core competency. This does not necessitate that every officer becomes a data scientist, but it does require personnel to understand the data on which AI systems are based, the outputs they generate, and their inherent limitations. Training should be tailored to specific roles, addressing the different responsibilities and risks associated with AI use.

For command staff, training focuses on understanding organizational risks rather than technical proficiency with the software. Chiefs and other leaders must be capable of evaluating systems that produce outputs without transparent reasoning, recognizing potential biases in data, and anticipating implications for privacy and civil rights. This foundational knowledge is critical for setting effective policies and justifying decisions that impact civil liberties.

Crime analysts and technical specialists serve as the vital link between algorithmic outputs and operational application. Their role is not to accept AI results without scrutiny but to validate and contextualize them before operational use. This includes ensuring data quality and integrating AI-generated insights into workflows while preserving human judgment. Effective validation acts as a safeguard against the misuse of untested outputs.

Frontline officers need practical, scenario-based training that emphasizes the appropriate application of AI tools. They should be taught to view AI as a lead generator rather than an absolute authority. Understanding the risks of “hallucinations,” where systems produce plausible but erroneous conclusions, is crucial. Officers must verify AI-assisted outputs against existing evidence to maintain the integrity of the justice process.

Ensuring internal readiness begins at the procurement stage. Historically, agencies have purchased technology in silos, leading to fragmented data management and inconsistent policies. Moving toward integrated platforms and interoperable systems can help agencies synthesize data from various sources, such as license plate readers and computer-aided dispatch, to create a comprehensive picture of crime patterns.

Establishing an AI governance committee is essential for reviewing and approving AI tools and associated policies. This committee should consist of operational commanders, IT and cybersecurity experts, legal representatives, and training personnel to ensure comprehensive oversight. Governance reviews should outline approved uses, enforce human review for significant decisions, and establish data management protocols to mitigate risks.

A key aspect of governance is maintaining agency control over data. Contracts with vendors should be scrutinized to define data ownership and usage rights clearly. Agencies must be aware of how their data will be stored, secured, and potentially used after contract termination. A common pitfall arises when contracts allow for broad vendor reuse of agency data under vague clauses, leading to disputes that could damage public trust.

The integration of AI in policing presents both opportunities and challenges. Those agencies that prioritize culture, invest in targeted training, and enforce robust governance structures are better positioned to leverage AI technologies. As policing increasingly embraces AI, the balance between technological advancement and the preservation of essential human elements will play a crucial role in shaping the future of law enforcement.

References

California Police Chiefs Association. Leading intelligently on AI.

International Association of Chiefs of Police. Artificial intelligence resource hub.

Police Executive Research Forum. Policing and artificial intelligence: Promise and peril.

U.S. Department of Justice. Artificial intelligence applications in law enforcement: An overview.

Apple Partners with Google, Investing $1B Annually to Transform Siri with Gemini AI

Apple Partners with Google, Investing $1B Annually to Transform Siri with Gemini AI Syspro Accelerates Growth with AI-Driven Modernization and Strategic Acquisitions in 2025

Syspro Accelerates Growth with AI-Driven Modernization and Strategic Acquisitions in 2025 Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere

Germany”s National Team Prepares for World Cup Qualifiers with Disco Atmosphere 95% of AI Projects Fail in Companies According to MIT

95% of AI Projects Fail in Companies According to MIT AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032

AI in Food & Beverages Market to Surge from $11.08B to $263.80B by 2032