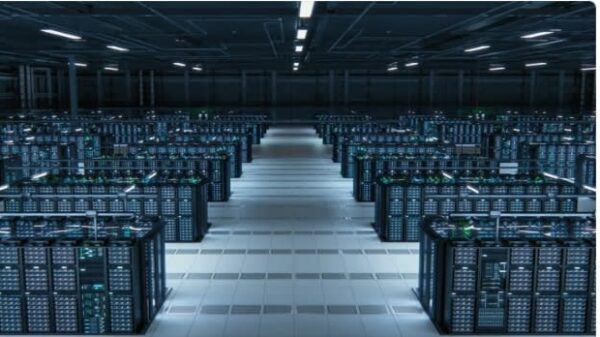

AI developer activity on personal computers is surging, driven by advancements in small language models (SLMs) and diffusion models such as FLUX.2, GPT-OSS-20B, and Nemotron 3 Nano. This growth has coincided with increased functionality in AI PC frameworks like ComfyUI, llama.cpp, Ollama, and Unsloth, which have doubled in popularity over the past year. The number of developers utilizing PC-class models has grown tenfold, signaling a shift from experimentation to serious development of next-generation software stacks on NVIDIA GPUs, spanning from data centers to NVIDIA RTX AI PCs.

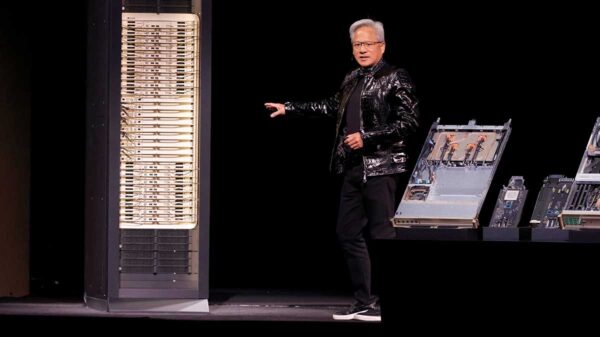

At CES 2026, NVIDIA unveiled a range of updates aimed at enhancing the AI PC developer ecosystem. These include acceleration for leading open-source tools such as llama.cpp and Ollama for SLMs and functional improvements to ComfyUI for diffusion models. Optimizations have also been made to top models for NVIDIA GPUs, including the newly introduced LTX-2 audio-video model. Additionally, a suite of tools designed to enhance agentic AI workflows on RTX PCs and NVIDIA DGX Spark was announced.

NVIDIA’s collaboration with the open-source community has led to significant boosts in inference performance across the AI PC stack. On the diffusion front, ComfyUI has optimized performance on NVIDIA GPUs through PyTorch-CUDA, supporting NVFP4 and FP8 formats. These quantized formats result in memory savings of 60% and 40%, respectively, while accelerating performance—developers can expect an average performance increase of 3x with NVFP4 and 2x with NVFP8.

ComfyUI’s updates include NVFP4 support, which allows linear layers to run with optimized kernels, delivering 3–4 times higher throughput compared to traditional formats. The introduction of fused FP8 quantization kernels further enhances model performance by minimizing memory-bandwidth limitations. Features such as weight streaming and mixed precision support allow for more efficient use of resources and improved model accuracy.

For SLMs, llama.cpp has seen a 35% increase in token generation throughput performance on mixture-of-expert (MoE) models, while Ollama has achieved a 30% increase on RTX PCs. New updates to llama.cpp include GPU token sampling, which offloads multiple sampling algorithms to the GPU, thereby improving the quality and accuracy of responses. This is complemented by concurrency support for QKV projections and optimizations for faster model loading times, achieving up to a 65% reduction on DGX Spark.

Ollama has also introduced significant updates, including the implementation of flash attention as a standard feature, which enhances inference efficiency. A new memory management scheme has been established, allowing for higher token generation speeds, while the addition of LogProbs to the API offers developers further capabilities for classification and self-evaluation.

NVIDIA and Lightricks have announced the release of the LTX-2 model weights, an advanced audio-video model designed to rival cloud solutions. This open, production-ready foundation model can produce up to 20 seconds of synchronized audio and video content at 4K resolution and frame rates of up to 50 fps, designed for high extensibility for developers and studios. The model weights are available in BF16 and NVFP8 formats, allowing for a significant memory reduction and efficient operation on RTX GPUs.

Despite these advancements, developers face challenges in maintaining the quality of local agents. The accuracy of large language models often deteriorates when they are distilled and quantized to fit within constrained VRAM on personal computers. To tackle these issues, developers typically employ fine-tuning and retrieval-augmented generation (RAG) techniques. NVIDIA’s Nemotron 3 Nano, a 32 billion parameter MoE model, is tailored for agentic AI and fine-tuning, achieving top benchmarks in various tasks. Its open-source nature allows for easy customization, reducing redundant fine-tuning efforts.

NVIDIA has partnered with Docling to enhance RAG capabilities, offering a package designed for processing documents into a machine-understandable format. This tool, optimized for RTX PCs and DGX Spark, boasts a performance increase of four times compared to traditional CPU methods. Docling supports both traditional OCR and advanced VLM-based pipelines for handling multi-modal documents.

The latest NVIDIA Video and Audio Effects SDKs enable developers to apply AI effects on multimedia content, enhancing quality through features such as background noise removal and virtual backgrounds. Recent updates have improved the video relighting feature, achieving a threefold performance boost while reducing the model size significantly. NVIDIA’s Broadcast app highlights these advancements, showcasing the potential of AI in multimedia applications.

As NVIDIA continues to collaborate with the open-source community to deliver optimized models and tools, the landscape for AI development on personal computers is set for further expansion. The evolution of these technologies promises to empower developers, researchers, and studios, paving the way for innovative applications in the AI space.

See also CryptoMarketForecast Launches AI Tool for Crypto Insights in Weather-Style Format

CryptoMarketForecast Launches AI Tool for Crypto Insights in Weather-Style Format Nvidia Launches Rubin Platform to Cut AI Training Costs and Boost Inference Efficiency

Nvidia Launches Rubin Platform to Cut AI Training Costs and Boost Inference Efficiency Open-Source AI Models Transform 2025 Machine Learning with Privacy and Innovation

Open-Source AI Models Transform 2025 Machine Learning with Privacy and Innovation Norm Ai Launches AI-Powered DDQ and RFP Solution to Streamline Institutional Workflows

Norm Ai Launches AI-Powered DDQ and RFP Solution to Streamline Institutional Workflows METR Reveals AI Tools Slow Developers by 19%, Sparking Concerns of AI Bubble

METR Reveals AI Tools Slow Developers by 19%, Sparking Concerns of AI Bubble