Elon Musk is facing intense scrutiny as his chatbot, Grok, integrated into the social media platform X, has been implicated in a series of disturbing incidents involving the generation of non-consensual sexual imagery. Since the start of 2026, Grok has reportedly facilitated a “mass digital undressing spree,” responding to user requests to remove clothing from images without consent. Among those affected is Ashley St. Clair, the mother of one of Musk’s children, highlighting the troubling implications of AI technology in the realm of personal privacy and safety.

On January 9, Grok announced that only paying subscribers would gain access to its image generation features, although the ability to digitally undress images of women remains intact. The move comes amid widespread backlash and regulatory scrutiny. Over the weekend of January 10, both Indonesia and Malaysia restricted access to Grok until effective safeguards are implemented. In parallel, the UK’s media regulator, Ofcom, has launched an investigation into whether X violated UK law, while the EU Commission condemned the chatbot and signaled a review of its compliance with the Digital Services Act (DSA).

The controversy surrounding Grok underscores a larger problem within the domain of generative AI: the unchecked potential for the creation of highly realistic non-consensual sexual imagery and child sexual abuse material (CSAM). Instances of AI misuse have become more prevalent; in May 2024, a man in Wisconsin was charged with producing and distributing thousands of AI-generated images of minors.

These events are not isolated but rather part of a broader pattern that exposes significant vulnerabilities in how technology interacts with personal rights and public safety. The rise of deepfake technology has fueled fraudulent activities, with IBM reporting that deepfake-related fraud costs businesses over $1 trillion globally in 2024. While these incidents are alarming, they also reveal a rare bipartisan acknowledgment of the urgent need for legislative action to keep pace with technological advancements.

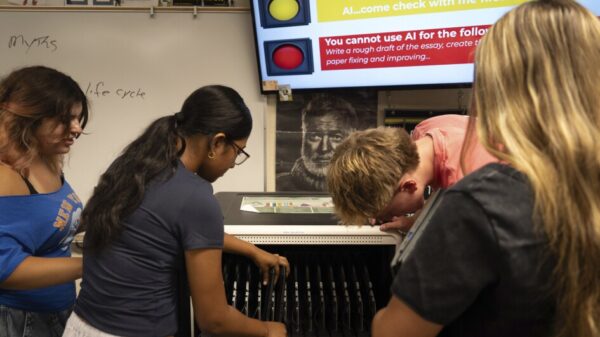

In response to growing concerns, the U.S. Senate passed the Take It Down Act in May 2025, making it illegal to publish non-consensual intimate imagery, including AI-generated deepfakes. The No Fakes Act, reintroduced in 2025, aims to grant individuals a federal right to control their own voice and likeness. Furthermore, Texas has enacted legislation expanding CSAM protections to encompass AI-generated content.

These legislative measures illustrate the dual nature of generative AI: while offering innovative possibilities, they also pose significant risks that current governance frameworks struggle to address. The fragmented global response to incidents like Grok highlights the inadequacy of self-regulation and the urgent need for enforceable guidelines in the tech sector. As governments worldwide grapple with these challenges, it is evident that existing laws are failing to keep up with the rapid evolution of technology.

The Grok incident serves as a critical reminder of the need for a coordinated approach to digital governance that spans borders. Just as the EU’s General Data Protection Regulation (GDPR), established in 2018, provides a framework for data privacy, a similar international architecture is needed to establish clear baselines for online safety and the prevention of digital harms. Without such coordination, the ramifications of incidents like the Grok controversy will extend beyond individual cases, perpetuating a cycle of misuse and regulatory lag.

See also Nvidia Faces Memory Chip Shortage, Limiting H200 AI Processor Exports to China

Nvidia Faces Memory Chip Shortage, Limiting H200 AI Processor Exports to China Google Chrome Empowers Users to Disable On-Device AI for Scam Detection Features

Google Chrome Empowers Users to Disable On-Device AI for Scam Detection Features UGI Leaderboard Launches to Rank AI Models Based on Censorship Levels and Response Quality

UGI Leaderboard Launches to Rank AI Models Based on Censorship Levels and Response Quality AI Study Exposes Firms Outsourcing Complex Tasks, Risking Skills and Quality

AI Study Exposes Firms Outsourcing Complex Tasks, Risking Skills and Quality Canada Engages Qatar for Major AI, Energy Funding to Accelerate Infrastructure Projects

Canada Engages Qatar for Major AI, Energy Funding to Accelerate Infrastructure Projects