AI’s Ethical Dilemmas in High-Stakes Environments

As artificial intelligence (AI) becomes increasingly integrated into humanitarian, security, and disaster-response operations, the complexities surrounding moral decision-making become more pronounced. A recent analysis delves into how probabilistic AI systems handle uncertainty in high-risk situations, highlighting the potential for hidden biases and the vital role of human oversight. The study emphasizes that while AI can enhance operational efficiency, its deployment must be approached with caution, particularly in life-critical contexts.

Current drone systems, whether used for humanitarian demining, disaster mapping, or security surveillance, primarily function as monitoring tools. They collect extensive sensor data—including visual, thermal, and radar inputs—which is then interpreted by human operators tasked with decision-making. The value of these systems lies in their ability to extend human perception while minimizing direct risk to operators; however, risk assessment and judgment remain predominantly human responsibilities.

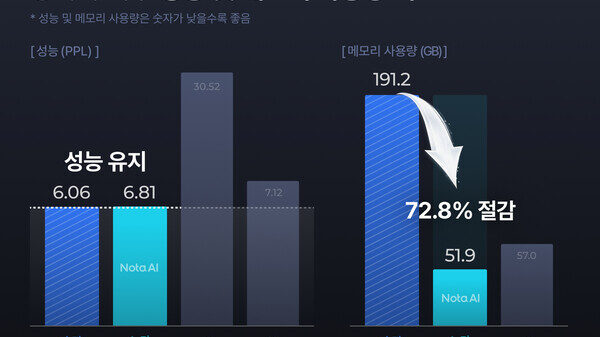

In high-risk environments, AI systems operate on probabilistic principles, lacking a definitive understanding of safety. For instance, a drone equipped with a ground-penetrating radar, thermal imager, or synthetic aperture radar does not yield binary results but generates confidence intervals. A former minefield may be classified as 98.2% likely to be free of unexploded ordnance (UXO), a statistic derived from sensor fusion and historical data. This introduces an ethical conundrum: who decides whether a confidence level of 99% is adequate, or if 99.9% is necessary? In scenarios like humanitarian demining, these distinctions hold operational significance, as a 99% confidence level implies that one in every hundred “cleared” zones could still harbor hidden dangers.

The line between passive monitoring and autonomous action is increasingly blurred. Recent incidents involving unauthorized drone incursions over European airspace, which resulted in airport closures and flight diversions, illustrate the potential consequences of misinterpreting sensor data. In future scenarios, AI could be responsible for assessing whether a drone poses a threat, making real-time risk assessments that directly influence operational decisions.

This shift raises critical questions about accountability. AI systems in high-stakes environments face unique challenges, particularly when tasked with replicating human decision-making processes where outcomes can be irreversible. Experienced operators rely on a blend of tacit knowledge, pattern recognition, and situational ethics, making it difficult to encode these variables into AI systems. Operational success in demining or air traffic control often hinges on intricate human judgments that cannot be distilled into simple algorithms.

Furthermore, the potential for bias in training data poses significant risks. AI systems trained on historical minefield data may falter in new contexts, particularly when faced with unconventional or improvised devices. This bias can lead to overconfidence in familiar settings while overlooking the complexities of unfamiliar environments. The challenge lies in auditing these biases while ensuring that AI outputs are interpreted through a human lens, especially when a system’s confidence might obscure underlying uncertainties.

Integrating human oversight into AI decision-making processes is crucial. Studies show that when AI makes errors, the perceived consequences often carry a heavier weight than human mistakes, a phenomenon known as algorithm aversion. This raises vital ethical questions: should operators be fully “in the loop” to approve every action generated by AI, or is it acceptable for them to be merely “on the loop,” monitoring decisions without direct intervention? In life-critical contexts, including demining and air traffic control, maintaining a human presence in the decision-making process is essential for accountability.

The disparity between life-critical systems and those utilized for security or economic interests further complicates the ethical landscape. In scenarios such as demining, the consequences of a single erroneous decision can result in severe harm or loss of life, necessitating near-zero acceptable error rates. Conversely, misclassifying an innocent drone usually incurs financial losses rather than human casualties, altering how risks are formulated and making room for more aggressive operational strategies.

In contrast, applications of AI in agriculture illustrate a different ethical landscape where the stakes are markedly lower. AI systems employed in precision agriculture optimize resource use and enhance crop yield with relatively minor consequences. A misapplied chemical or a missed weed patch rarely leads to irreversible harm, allowing for a broader margin of error compared to humanitarian or aviation applications. The path from training data to deployment in agriculture is generally clear, contrasting sharply with the complexities faced in life-critical domains.

As AI continues to evolve and permeate various sectors, including those where human lives hang in the balance, it is imperative to embed ethical principles from the outset. Transparency, explainability, and human oversight must guide the development of these systems to prevent misuse or unintended harm. Without these safeguards, even the most advanced AI technologies risk undermining trust and producing errors with far-reaching consequences.

In summary, the integration of AI into high-stakes domains presents profound challenges and accountability questions. As technological capabilities advance, maintaining a balance between efficiency and ethical responsibility remains crucial, ensuring that life-saving innovations do not come at the cost of human oversight.

See also Washington Lawmakers Propose AI Chatbot Regulations to Protect Minors’ Mental Health

Washington Lawmakers Propose AI Chatbot Regulations to Protect Minors’ Mental Health Invest $3,000 in Nvidia, AMD, and Broadcom: Seize AI Growth Before 2026 Boom

Invest $3,000 in Nvidia, AMD, and Broadcom: Seize AI Growth Before 2026 Boom AustralianSuper Seizes AI Investment Opportunities Amid Geopolitical Tensions and Risks

AustralianSuper Seizes AI Investment Opportunities Amid Geopolitical Tensions and Risks AI to Displace 50% of Entry-Level Jobs by 2026, Warns Top Experts Amidst Economic Shifts

AI to Displace 50% of Entry-Level Jobs by 2026, Warns Top Experts Amidst Economic Shifts