Cybersecurity leaders must brace for a future where cybercriminals can harness the power of artificial intelligence (AI) to automate cyberattacks at an unprecedented scale, according to Heather Adkins, Vice President of Security Engineering at Google. Speaking on the Google Cloud Security podcast, Adkins emphasized that while the full realization of this threat may still be years away, cybercriminals are already beginning to employ AI to enhance various aspects of their operations.

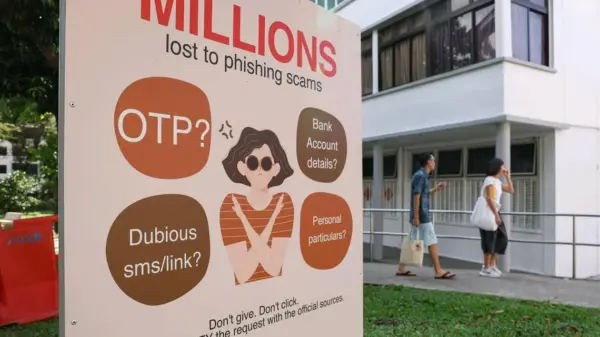

Adkins pointed out that even today, malicious actors are leveraging AI for seemingly mundane tasks, such as grammar and spell-checking in phishing schemes. “It’s just a matter of time before somebody puts all of these things together, end-to-end,” she said. The concern lays not only in the incremental improvements but in the potential for a comprehensive toolkit that could enable attackers to launch sophisticated cyber operations with minimal human oversight.

As AI technologies continue to evolve, the implications for cybersecurity are profound. Adkins elaborated on a scenario where an individual could use AI to prompt a model designed for hacking to target a specific organization, resulting in a complete attack strategy delivered within a week. This “slow ramp” of AI adoption in criminal activity could manifest over the next six to 18 months, posing significant challenges for cybersecurity professionals.

The Google Threat Intelligence Group (GTIG) has noted increasing experimentation with AI among attackers, with malware families already using large language models (LLMs) to generate commands aimed at stealing sensitive data. Sandra Joyce, a GTIG Vice President, highlighted that nation-states such as China, Iran, and North Korea are actively exploiting AI tools across various phases of their cyber operations, from initial reconnaissance to crafting phishing messages and executing data theft commands.

The potential for AI to democratize cyber threats is alarming to industry experts. Anton Chuvakin, a security advisor in Google’s Office of the CISO, articulated a growing concern that the most significant threat may not come from advanced persistent threats (APTs) but from a new “Metasploit moment”—a reference to the time when exploit frameworks became readily accessible to attackers. “I worry about the democratization of threats,” he stated, emphasizing the risks associated with powerful AI tools falling into the wrong hands.

Adkins provided a stark vision of a worst-case scenario involving an AI-enabled attack that could resemble the infamous Morris worm, which autonomously spread through networks, or the Conficker worm, which created panics without causing significant damage. She noted that the nature of future attacks will largely depend on the motivations of those who assemble these AI capabilities.

While LLMs today still struggle with basic reasoning—such as differentiating right from wrong or adapting to new problem-solving paths—experts recognize that significant advancements could soon empower attackers. When criminals can efficiently direct AI tools to compromise organizations, defenders will need to redefine success metrics in the post-AI landscape.

Adkins suggested that future cybersecurity strategies might focus less on preventing breaches and more on minimizing the duration and impact of any successful attacks. In a cloud environment, she recommended that AI-enabled defenses should be capable of shutting down instances upon detecting malicious activity, although implementing such systems requires careful consideration to avoid operational disruptions.

“We’re going to have to put these intelligent reasoning systems behind real-time decision-making,” she said, emphasizing the need for a flexible approach that allows for options beyond a simple on/off switch. As organizations prepare for a landscape increasingly shaped by AI, they must innovate to remain resilient against rapidly evolving threats. The challenge lies not only in enhancing defenses but also in understanding how to outmaneuver attackers who may find themselves equally challenged by the complexities of AI.

See also Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism

Anthropic’s Claims of AI-Driven Cyberattacks Raise Industry Skepticism Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage

Anthropic Reports AI-Driven Cyberattack Linked to Chinese Espionage Quantum Computing Threatens Current Cryptography, Experts Seek Solutions

Quantum Computing Threatens Current Cryptography, Experts Seek Solutions Anthropic’s Claude AI exploited in significant cyber-espionage operation

Anthropic’s Claude AI exploited in significant cyber-espionage operation AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks

AI Poisoning Attacks Surge 40%: Businesses Face Growing Cybersecurity Risks